The latest version of Apple's Mac OS contains some excellent new features aimed at musicians and audio engineers. Is this one OS upgrade you won't mind performing on your studio computer?

Without wishing to be too nostalgic, it's hard to believe that Mac OS X has been with us in a final, released state for over four years — v10.0 was released on March 24th 2001 after many public and developer previews. 2001 also saw the release of Microsoft's Windows XP operating system, but while musicians and audio engineers were up and running pretty quickly on this operating system (an evolution of Windows ME and 2000), Mac OS X represented a more radical change for both users and developers of Mac audio and MIDI software, breaking away from 17 years of the 'classic' pre OS X Mac OS tradition.

However, while some Mac-based musicians clung to OS 9 for the first few years of OS X, the new Mac operating system, while not initially great from a MIDI and audio developer's standpoint, just got better and better. With the later releases of 10.1 (Puma) and, more significantly, 10.2 (Jaguar), the audio and MIDI feature of OS X matured and stabilised. The release of Pro Tools 6 probably helped, but Jaguar was really when Mac-based musicians began migrating to the new operating system in serious numbers. And the release of 10.3 (Panther) made everyone feel much more confident. Considering the functionality now in Mac OS 10.4 — codenamed Tiger — it's amazing how far Apple have come in just four years.

Bonjour, Monsieur Le Tigre!

It's worth mentioning right away (as we'll be coming back to it later), that one well-known technology introduced back in Jaguar has been renamed due to a trademark settlement. Rendezvous, the technology for automatically locating computers, devices (such as printers) and services (such as the one used to share users' iTunes libraries across a network), is now known as Bonjour in Tiger, and literally every reference on Apple's web site and in the operating system to Rendezvous has been changed to Bonjour.

Most of Tiger's new general features will be fairly well known by the time you read this, since there's been a large amount of coverage in the mainstream computer press and, of course, on the Internet. Among the headline non-musical features you'll probably have heard about already is Spotlight, which is a new searching technology that allows you to find files based on content and metadata: for example, if you click on the new blue and circular Spotlight icon at the top right of the menu bar and type someone's name, Spotlight will find all information related to the name in all supporting applications on your Mac. Address Book will give you a link to the relevant entry, Mail will give you links to any emails to and from this person, and so on.

There's also Dashboard, which is activated by pressing F12 (by default) and displays a collection of Widgets on the screen, which you can move around, remove, or add new ones. A Widget is a small application that displays information or provides access to a commonly used service on the Internet, and among the Widgets included with Tiger are a calculator, a weather display, a calendar, a world clock and a word translator. Automator is a new Applescript-based application that enables you to create automated workflows more easily; but while it looks pretty neat, most music and audio applications don't support Applescript directly, so at the moment it's mostly going to be useful for creating workflows for encoding audio files or burning them to CD.

As discussed in September 2004's preview of Tiger in Apple Notes, Tiger also offers significant improvements for those writing 64-bit applications for the G5 processor, and applications can now address much more physical and virtual memory than in Panther. Another significant technological improvement in Tiger, and one more relevant to music applications, or at least to their developers, is Quartz 2D Extreme, which accelerates 2D graphics by moving the drawing operations over to the GPU (graphics processing unit) on the video card. This offers the same substantial performance improvement for 2D graphics that the original Quartz Extreme brought to 3D graphics in Jaguar, as long as you have a video card capable of running Quartz Extreme. It's therefore and especially important for music applications, where the user interfaces rely much more heavily on 2D than 3D graphics. In order to make use of Quartz Extreme, Apple state that you need to have a video card with at least 16MB of RAM and either an Nvidia GeForce2 MX or better graphics card, or an ATI Radeon GPU.

Changed To The Core

So far, this is pretty much what any magazine will have told you about Tiger. So what of the stuff that will improve the lives of SOS readers? These can broadly be summed up in two ways, which we'll cover in detail over the next few pages. Firstly and perhaps most importantly, Tiger finally removes the OS-level restriction on the number of simultaneous audio I/O devices that can be addressed by the Mac OS, restoring the ability to use multiple interfaces for audio I/O that has been missing in action since the switch from OS 9 to OS X (unless you're a user of MOTU's Digital Performer that is, which got around the problem at an application level — but this new development is a permanent, OS-level fix, so any application can now benefit from it). Secondly, Tiger introduces many new features that make the networking of music computers much more viable than previously. If you want to set up multiple Macs to handle complex, processor-heavy audio tasks, or stream MIDI easily from one central computer to others that contains all your library sounds or CPU-hungry virtual instruments, it's now easier than ever before.

Tiger's Dashboard is activated by pressing F12, and displays various Widgets you can move and arrange as you like for translating words, looking up words in a dictionary, checking the weather and more. More Widgets can be added and many others can be downloaded from Apple and various third-party developers.

Tiger's Dashboard is activated by pressing F12, and displays various Widgets you can move and arrange as you like for translating words, looking up words in a dictionary, checking the weather and more. More Widgets can be added and many others can be downloaded from Apple and various third-party developers.

Before starting explanations in detail, the first thing to say is that the basic foundations of Mac OS X's MIDI and audio technologies have remained the same since the publication of the original SOS article on Mac OS X For Musicians, which looked at Mac OS 10.2 (see SOS April 2003). So, if you want to know more about the background to these, you might want to check out this previous article.

The audio and music-related abilities in OS X are represented by three different technologies: Core Audio, Core MIDI, and Audio Units. Core Audio deals with how applications send and receive audio data from either your Mac's built-in audio hardware or other devices you might attach via USB, Firewire or PCI, while Core MIDI handles how OS X deals with MIDI devices attached to your system. The Core prefix is adopted by the majority of OS X's frameworks that provide functionality (such as audio and MIDI) to applications — other examples introduced in Tiger include Core Data (a data-modelling framework for providing database-like features) and Core Image (which is to image processing what Core Audio is to audio processing).

Finally, Audio Units is a format for developing audio instrument and effects plug-ins that work in compatible audio and music applications under OS X (Apple's nomenclature, incidentally, is consistent — so plug-ins for image processing that work with the new Core Image format are called Image Units). Audio Units are equivalent to DirectX plug-ins in Windows, providing a non-application-specific format for audio plug-ins that means developers can produce one plug-in and have it work without alteration in applications from multiple vendors.

From a user's perspective, the details of configuring how different audio and MIDI devices are used by Core Audio and Core MIDI are taken care of with the Audio MIDI Setup (or AMS) tool, which can be found in the Applications/Utilities folder of your boot disk. The first change you'll notice in the new version 2.1 of AMS supplied with Tiger is cosmetic, although this rather belies the significant new features of Core Audio and MIDI that can be configured in this application — the brushed-metal look has been replaced with a regular Aqua application window appearance. Also on the subject of appearance, you'll notice that in keeping with other areas of Tiger, the various windows and sheets of AMS now contain lilac-coloured circular buttons with question marks to indicate there is help documentation available for this part of the program via OS X's Help Viewer.

Core MIDI Networks

OS X has always had a well-thought-out MIDI subsystem in Core MIDI, and this is perhaps no surprise given that one of its architects is Doug Wyatt, who previously worked for Opcode and developed, amongst other things, the most widely used MIDI subsystem in the days of OS 9, OMS, and Acadia, the audio engine used by later versions of Opcode's old Vision sequencer. Indeed, as has been discussed in the past, the structure of the MIDI Devices page of AMS bears a resemblance to the OMS Studio Setup application of yesteryear, and the most visible new MIDI-related feature added to OS X with Panther from the user's perspective was the IAC (Inter-Application Communication) Driver device. The IAC device (or buss) was a concept originally introduced in OMS to provide MIDI ports for sending and receiving MIDI data between multiple applications running on the same computer.

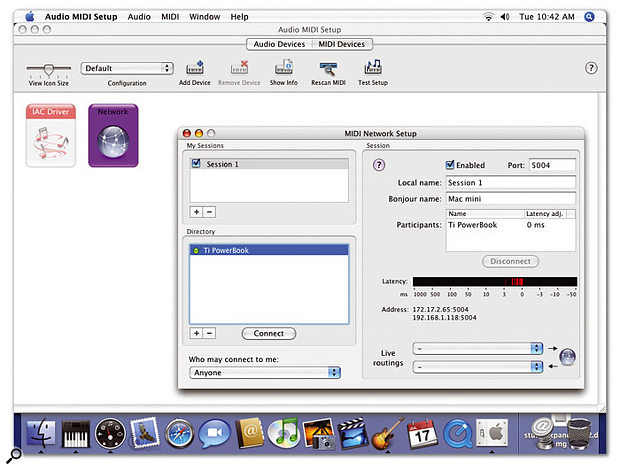

This is what the MIDI Network Setup window looks like on a Mac receiving MIDI data over a network under Tiger. The Local name also appears in the Participants list on the transmitting Mac (see the screengrab overleaf). The Latency monitor showing the timing of incoming MIDI events.

This is what the MIDI Network Setup window looks like on a Mac receiving MIDI data over a network under Tiger. The Local name also appears in the Participants list on the transmitting Mac (see the screengrab overleaf). The Latency monitor showing the timing of incoming MIDI events.

Core MIDI in Tiger takes the idea of sharing MIDI data between applications one step further with the addition of a Network device (shown above), which makes it possible to send MIDI data between applications running on different Macs via a network — so long as each machine is running Tiger, of course. This feature has many possible uses, the most significant being the idea of using other Macs to run software instruments that can be played from your main sequencing application, either with hosts such as Steinberg's V-Stack or instruments that can run as stand-alone applications, like NI's Kontakt. Another possible use is when you want to send MIDI timecode from one Mac to another Mac. For me personally, this is usually the only reason I have a MIDI interface on a Pro Tools rig, so hopefully using the new MIDI networking facilities will enable yet more hardware to disappear from the studio.

The configuration of a MIDI network is fairly easy. In AMS, you open the MIDI Devices page by clicking on the MIDI Devices tab at the top of the main window, and double-click the Network device to open the MIDI Network Setup window (see the screenshot below). The MIDI Network is based on the concept of Sessions, where computers on your network can participate in a given Session to send and receive MIDI data. Each Session you have in the MIDI Network Setup window represents a single pair of MIDI input and output ports on your system.

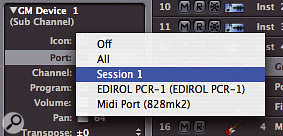

To create a new Session, you click the '+' button in the 'My Sessions' pane at the top left of the MIDI Network Setup window. The various settings in the 'My Sessions' pane are configured in the 'Session' pane on the right-hand side of the same window. The name of the Session is displayed under the 'Local Name' setting and can be changed by simply typing in a new one; this is also the name that will identify the Session's MIDI port in the MIDI applications. You can also specify a Bonjour name for the Session, which is the name OS X's Bonjour service will use to publish that Session on your local network (the default setting is that your computer's name from the Sharing Preferences Panel is used here). Underneath the 'My Sessions' pane is the Directory, which lists all the available Sessions you can join on your network, also listed by their Bonjour names. The name of the MIDI Networking Session is used as the name for the corresponding MIDI input and output ports in Core MIDI Applications, as seen here in Logic Pro 7, where 'Session 1' shows up as an available MIDI Output port.

The name of the MIDI Networking Session is used as the name for the corresponding MIDI input and output ports in Core MIDI Applications, as seen here in Logic Pro 7, where 'Session 1' shows up as an available MIDI Output port.

The next step is to select a Session in the Directory from another computer on the network to connect with the Session you're currently setting up. If the connection is successful, the Session name from the Directory will be listed in the Participants list in the right-hand Session pane. At this point, you should have a functioning MIDI network.

If you send MIDI data to an active Session on one Mac (by selecting the name of that Session as your MIDI output port in whatever MIDI application you're running — see the screenshot above to see what this looks like in Apple's Logic), it should be received by all the other Macs participating in that same Session. The neat thing is that if you have two other Macs participating in the same Session, both Macs will receive the same data from the 'master' Mac, which is useful for slaving multiple machines to the same timecode stream, or layering instruments from two computers playing the same music.

The latency of incoming MIDI data for a selected Session is plotted by red lines on the black Latency bar in the MIDI Network Setup window, and ideally you obviously want the latency of events to be as close to 0ms as possible. If you notice timing errors in the MIDI events that are sent across the network, you can set a number of milliseconds by which to offset incoming events to smooth out the timing errors by double-clicking in the 'Latency adj.' column in the Participants list next to the Participant you want to offset. Entering a positive value will cause all incoming events from that Mac to be delayed by the given number of milliseconds.

A nice touch in the MIDI Network Setup window is the 'Live routings' option at the bottom of the window, which allows you to route the input or output of a Network MIDI Session to or from another MIDI Device on your Mac without having to run any other MIDI software. For example, if you want to play MIDI input from a keyboard and send this via a Session to another computer on the network (but without running a sequencer like Logic, for example), you select the appropriate Session, and then, in the 'Live routings' section at the bottom right of the MIDI Network Setup window, you set the uppermost pop-up menu (with the arrow pointing to the network symbol) to the MIDI port to which you've connected your keyboard.

Alternatively, if you want MIDI output from a Session on the network to be output to a sound module, or some other hardware sound source, then again, you simply choose the appropriate Session and, in the lower pop-up menu in the bottom right of the window, select the MIDI port where the sound source is connected. Any MIDI data received from this Session across the network will then be automatically output to the selected MIDI port.

Here you can see the MIDI Network Setup page for a Mac sending MIDI data over a network. The receiving Mac Mini, from which the grab on the previous page was taken, is listed under Participants. Note the 'Live routings' settings that enable the MIDI data from an Edirol PCR1 keyboard to be transmitted across the Network via 'Session 1'.

Here you can see the MIDI Network Setup page for a Mac sending MIDI data over a network. The receiving Mac Mini, from which the grab on the previous page was taken, is listed under Participants. Note the 'Live routings' settings that enable the MIDI data from an Edirol PCR1 keyboard to be transmitted across the Network via 'Session 1'.

Finally, if you have your Macs connected to a network that's populated by many other people's Macs, (as in many larger studio environments), there are some permission controls you can exert by using the MIDI Network Setup's directory list. In addition to displaying available Bonjour sessions, you can also add systems manually to the Directory by clicking the plus '+' button underneath and entering the Name to identify a Session on another computer and the host address of that computer before clicking OK. The host address must include a port number to identify a specific Session on the computer, since the computer could be hosting multiple Sessions, potentially with other Macs. A neat side-effect of this is that because you can enter a specific host address in this sheet, it's even possible to add the address of a computer that's not even connected to your local network, and exchange MIDI data with another Mac somewhere else in the world!

Once you've added the other Sessions to the Directory, you can control who connects to Sessions on your Mac by selecting an option from the 'Who may connect to me' pop-up menu below the Directory. Setting this option to 'Anyone' will allow any other system to connect to a Session on that Mac, while selecting 'Only computers in my Directory' allows only those computers with the specified addresses and port numbers to connect to a Session. In this way, you can allow only a specific Mac to join a specific Session, blocking access to all other Sessions you might be hosting. Finally, you can set this permission option to 'No one', which doesn't allow anyone to connect to a Session on your Mac.

Networking in Core MIDI is definitely a great addition to Mac OS X, and the only slight disappointment is that there's no compatibility with Windows-based systems, which would be perfect for those who use Mac-based sequencers with computers running the Windows-only Gigastudio software. I guess Apple's idea is that everyone needs to swap to entirely Mac-based systems...!

Installation & Requirements

The installation process for Tiger is pretty much the same as for previous versions of Mac OS X: you can either boot from the supplied DVD directly, or run the application provided on the disc that will restart your Mac and boot from the DVD automatically without you having to hold down the 'C' key. You read correctly; it's a DVD-ROM, not a CD-ROM. In fact, Tiger is supplied only on DVD, so those with older systems should note that they'll need a DVD drive in their Mac in order to install it. Other minimum requirements from Apple include having a PowerPC G3, G4 or G5 processor, a Firewire port, and 256MB of RAM. On this latter point, I would say 512MB is probably a more practical minimum for day-to-day applications, and 1GB for doing anything with audio or MIDI applications.

It's possible both to upgrade existing versions of Mac OS X or install a clean version, either by archiving your previous System folder or by erasing your entire boot disc. I tried both procedures and had no problem upgrading Macs running Panther to Tiger, with no obvious issues arising or lost data due to the upgrade. While the upgrade might be the better option for general-purpose Mac computers, I still favour the clean install on dedicated audio and music systems. While wiping and starting again with your day-to-day computing needs is a pain, audio and music workstations tend to have less installed on them in the first place, so there is less to reinstall. And remember, many applications and certainly drivers will need upgrading for Tiger anyway, so why not get a completely clean system while you upgrade with a new OS, application version and drivers? Just remember to clone the disk when you're finished with a utility like Carbon Copy Cloner (www.bombich.com/software/ccc.html) to avoid having to repeat this tedious process in the future.

Before the installation process proper begins, you'll have the option of performing an Easy or Custom install, and, as with Panther (see Apple Notes November 2004 for more information), it's best to choose a Custom install to eliminate a large number of files you'll probably never need. Unless you plan on using your Mac in multiple languages or require fonts for Chinese, Korean, Arabic, and so on, you can save a considerable amount of space on your hard drive. Disabling Language Translations saves you 449MB, skipping Additional Fonts saves 129MB, and it's well worth weeding through the 1.2GB of printer drivers OS X would like to install by default. Generally speaking, I usually leave the family of printer I use myself, such as HP, and also leave the Gimp drivers required by some applications. Installing just the HP, Canon and Gimp printer drivers requires just 404MB.

In the end, my basic Tiger installation required just 1.7GB (only 200MB more than my basic Panther installation), saving an entire Gigabyte on the drive, although I later added the X11 window server (required for some applications ported from UNIX), which required around 80MB and isn't necessary for any audio and MIDI software that I've seen.

After you've installed Tiger, and your Mac boots up for the first time running the new operating system, you'll need to enter the usual registration information and confirm various time, date and network settings. Users upgrading from a previous version of OS X will notice an Assistant appear so you can confirm your registration information, although it's possible to quit this Assistant application without completing the information if you wish. After this, if you've performed an upgrade rather than a clean install, you might notice your hard drives spinning away rather feverishly; this is due to the new Spotlight feature that basically needs to index your drives before it can work. I didn't see a way of skipping this step, but you can check on the progress by clicking the Spotlight icon at the top right of the menu bar. Spotlight can be useful, so your patience will be rewarded.

Core Audio Aggregation

As mentioned earlier, Core Audio under Tiger finally resolves the OS X restriction on using multiple simultaneous audio I/O devices. Rather than providing this solution such that each application developer has to rewrite their software to support it individually, Apple have elegantly implemented a new type of audio device that can be created by users from the Audio MIDI Setup window: the Aggregate device.

In many ways, Aggregate devices simply extend ideas that were already in Mac OS X; those based on the idea of the Hardware Abstraction Layer (or HAL). In computer science, a HAL is a software layer that's created between physical hardware and software running on a system, so that the software can talk to the hardware in a consistent way without having to know the specifics of the actual hardware device. At the heart of Core Audio, a HAL is used so that no matter what audio hardware device is connected to your Mac and irrespective of its individual features, the device's Core Audio driver allows Core Audio to present the device to an application in exactly the same way as it would any other device. In other words, so long as an application supports Core Audio devices, it doesn't matter which Core Audio device you use: an application won't deal with the act of playing audio out of the built-in headphone port of your Mac or through a USB audio device any differently.

The Aggregate Device Editor sheet in Audio MIDI Setup enables you to configure Aggreate Devices. The upper list details the available Aggregate Devices, where you can create new or remove existing Devices, while in the lower part of the sheet you can configure the Structure of the currently selected Aggregate Device.

The Aggregate Device Editor sheet in Audio MIDI Setup enables you to configure Aggreate Devices. The upper list details the available Aggregate Devices, where you can create new or remove existing Devices, while in the lower part of the sheet you can configure the Structure of the currently selected Aggregate Device.

As their name suggests, Aggregate devices simply combine multiple audio devices connected to your Mac as if they were one virtual audio device. So if you have two audio devices connected to your Mac, each offering two inputs and two outputs, you can now create an Aggregate Device in AMS that combines these two devices into one device with four inputs and four outputs. Core Audio, thanks to the HAL, presents an Aggregate Device to an application as if it were any other audio device, so when you select the Aggregate Device in your application, the application essentially thinks it's talking to a device with four inputs and four outputs. It's also possible for an application to know that it's talking to an Aggregate device, so that it can gather more information about the individual devices in the Aggregate for clocking and other properties, but the basic operation is exactly as described above.

To create an Aggregate device, you select the Audio Devices page in AMS and select the Open Aggregate Device Editor option from the Audio menu, or hold down Shift and Command/Apple and hit the 'A' key. Clicking the button marked '+' below the (initially empty) Aggregate Devices list adds a new Aggregate device to that list. Similarly, you remove an Aggregate device by selecting it on this list and clicking the '-' button. It's worth noting that there's no warning when you do this, or any other way to bring back the Aggregate device afterwards other than by recreating it from scratch.

The structure (or configuration) of the currently selected Aggregate Device is shown in the lower part of the Aggregate Device Editor sheet and is a list of the audio hardware available to Core Audio (see the screenshot above). To add audio devices to the Aggregate device, you click the Use tick box in the structure list for every audio device you want to include, and as you do this, you'll notice that the number of inputs and outputs changes for the Aggregate device listed in the top part of the sheet.

Each Aggregate device uses one of the included hardware devices for a master clock source, and by default this is usually the clock Mac's built-in audio hardware. However, you can change the clock source by simply clicking the Clock radio button in the structure list for the device whose clock you want to act as the master. If you run into problems with clocking Aggregate devices, you might notice that each device in the structure list also has a Resample option, which performs a sample-rate conversion at the current sample rate that effectively reclocks the incoming audio to the master clock.

Once you've configured your Aggregate device, you simply click the Done button to close the Aggregate Device Editor sheet and return to the main AMS Audio Devices page. One of the neat things about Aggregate devices is that it's possible to create multiple Aggregates on the same system comprising different combinations of the audio devices attached to your computer, for different possible tasks.

Aggregate Devices can be selected in applications just the same as any other Audio Device, as you can see here in Apple's Logic Pro 7.

Aggregate Devices can be selected in applications just the same as any other Audio Device, as you can see here in Apple's Logic Pro 7.

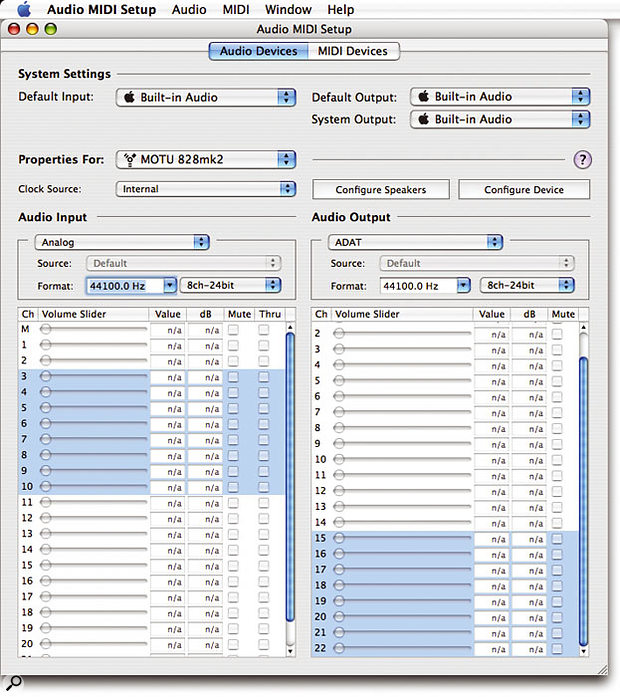

At the top of the Audio Devices page in AMS is a section for System Settings where you can choose the audio devices used for the default audio Input and Output by Mac OS X applications that don't specifically let you set which audio devices to use, such as iTunes (see the screenshot on page 146). You can also set an audio device to use as the System Output, which is the device through which you'll hear various system sounds, such as alerts and user interface sound effects as configured in the Sound Effects page of the Sound Preference Panel. While you can select an Aggregate device to be used for either the Default Input and Output, or both, you can't choose an Aggregate device for the System Output — which some might consider a blessing!

The Audio Devices page also offers controls for setting various audio device properties, such as master levels, sample rate and bit depth. You can see the properties for an audio device by choosing that device from the 'Properties For' pop-up menu underneath the System Settings section, which lists all Core Audio audio devices — including Aggregate Devices. If you select an Aggregate, you can modify the structure properties for this device without opening the Aggregate Device Editor sheet by clicking the Configure Device button in the main Audio Devices page instead. While this also opens a sheet, you don't get the option of adding or removing Aggregate devices, which provides a slightly safer way of configuring these devices.

For devices that have their own configuration window or sheet, the Configure Device button will also be visible when that device is selected in the 'Properties For' pop-up menu. You can also select the clock source (where the device supports different clock sources) underneath the 'Properties For' heading, although this will be greyed out if an Aggregate device is selected — even if the master clock source for that Aggregate device can be selected from different clock sources on the corresponding hardware device. In this case, you need to switch the Properties back for the individual device before you can change the clock source.

Two columns in the lower part of the Audio Devices page show the Audio Input and Audio Output properties for the device selected in the 'Properties For' pop-up menu, both of which are laid out identically. At the top of the pane is a pop-up menu that selects the Stream to be configured. Which brings me to...

OpenAL

Tiger's Open Audio Library, or OpenAL, is designed to make it easier for developers to add audio to applications, especially where the placement of sound sources is required in a three-dimensional space. Games are what has really driven OpenAL's development, so that there is a common, OS-level way for developers to provide 3D sound in applications. There have been plenty of non-Apple solutions to this problem, such as Creative Labs' EAX and Microsoft's DirectSound3D technologies, but using these means that developers have to rewrite parts of their code for different platforms.

The development of OpenAL started in 1998-99 at Loki Entertainment Software, with Creative Labs getting involved for the 1.0 specification, which was released in 2000. OpenAL is similar in conception to Open GL (Open Graphics Library), a cross-platform standard for 3D graphics that has hardware acceleration support in most graphics cards on the market today. When you want to draw a Cube in OpenGL, for example, the basic code looks the same no matter what computer or graphics hardware you're using. In the same way video cards usually offer hardware acceleration for OpenGL, there has also been hardware support for OpenAL in hardware from manufacturers such as Creative Labs and Nvidia.

Mac developers have been able to download and utilise OpenAL for some time now, and OpenAL was even supported by companies such as Creative Labs back in the OS 8 and 9 days with the company's Soundblaster Live card. The Mac OS X OpenAL library for developers available from Creative Labs requires at least Mac OS 10.2.8, along with the Core Audio enhancements available with Quicktime 6.4, although Apple now include OpenAL v1.2 with Tiger, and you'll notice an OpenAL Framework if you go lurking in the System/Library/Frameworks folder.

For more information about OpenAL, visit www.openal.org, and for more about OpenAL in Mac OS X, take a look at Apple's developer FAQ at http://developer.apple.com/audio/openal.html.

Streams & Streaming

In Core Audio speak, each audio Device is regarded as being comprised of multiple audio Streams that deal with how the audio data is passed between the Core Audio driver and the application. The reason for this hierarchy of devices and Streams is easier to understand if you consider an audio device capable of supporting up to 96kHz with analogue I/O and a single I/O pair of ADAT ports. If we run at a sampling rate of 44.1kHz you get 16 channels of audio (eight analogue plus eight ADAT); however, if we change the sampling rate to 88.2kHz, two ADAT channels are required to send and receive a single channel's worth of 88.2kHz audio. Therefore, at 88.2kHz you get 12 channels of audio from your device (eight analogue plus four ADAT).

The Audio Input and Output properties for the Audio Device selected in the 'Properties For' pop-up menu are listed in the lower part of the Audio MIDI Setup application's Audio Devices page. Notice how the background for the channels contained in currently selected input and output Streams are highlighted with a blue background: using a MOTU 828 MkII, the Analogue stream is selected for the Input and the ADAT stream is selected for the Output.

The Audio Input and Output properties for the Audio Device selected in the 'Properties For' pop-up menu are listed in the lower part of the Audio MIDI Setup application's Audio Devices page. Notice how the background for the channels contained in currently selected input and output Streams are highlighted with a blue background: using a MOTU 828 MkII, the Analogue stream is selected for the Input and the ADAT stream is selected for the Output.

The sampling rate and bit depth are described as the Format in Core Audio, and each Stream within a Core Audio Device can theoretically have a different Format. So when writing a Core Audio driver, developers split the different banks of inputs and outputs into different Streams within the single device so that each Stream can contain a different number of channels with a different Format. If you take MOTU's 828 MkII Firewire audio device as an example, this device has five input Streams (Mic/Guitar, Analogue, S/PDIF, Mix1 and ADAT) and five output Streams (Main Out, Analogue, S/PDIF, Phones, ADAT) to support its capabilities.

Underneath the pop-up menu where you select a Stream are two pop-up menus to configure the sampling rate and bit depth for that Stream; however, despite the notional ability for different Streams to have different Formats, changing the format for one Stream will change other Streams to the same Format in most current Core Audio drivers. As already mentioned, the best example of different Streams having different Formats is where you need to select dual-wire operation for ADAT ports, which really shouldn't affect any other I/O ports.

Below the Stream parameters in the System Settings is a list of the total input or output channels available with the device, and you'll notice in the screenshot overleaf that the channels that belong to the currently selected Stream are highlighted with a blue background. Depending on the functionality offered by the Core Audio driver, it's possible to change the gain of channels, mute and unmute channels, as well as setting the through option to automatically pass input channels to the corresponding output channels on the audio hardware. These various options are greyed out when unsupported.

Part of what makes it easy for Aggregate devices to be supported in Core Audio is the ability for a single Device to have multiple Streams. For example, if you have two audio Devices each with single input and output Streams, the Aggregate Device will be presented as a single device with two Streams for both input and output: one for each Device. If you create an Aggregate Device with your Mac's built-in audio hardware and a MOTU 828, for example, you'll end up with a device consisting of six Streams for both input and output. The only thing to bear in mind is that not all Core Audio Drivers name Streams appropriately, notably those that only contain single streams: in these cases you end up with unhelpful names such as 'Stream n', where n is the number of the Stream in that Device.

Compatibility

So far it seems that Tiger has broken fewer music and audio applications and drivers than previous releases; many third-party developers were ready at the release of Tiger to supply the necessary patches and driver updates. Generally speaking, Core MIDI drivers seem unaffected by the move to Tiger, but here's a brief rundown on the current state of Tiger compatibility from various manufacturers at the time of writing (mid-May 2005).

- APPLE

As one would expect (or at least hope), Logic v7.0 and v7.01 seem to work fine under Tiger; sadly, I didn't have chance to try 7.1 in time to comment. On the hardware side, there has been no mention of driver updates or incompatibilities for Emagic's previous interfaces, but using an MT4 MIDI interface with a laptop running Tiger didn't seem to be a problem.

- BIAS

Both the current shipping versions of Sound Soap 2 and Sound Soap Pro are Tiger-compatible, while Peak and Deck will require 4.14 and 3.5.4 updates respectively that should be available by the time you read this. Users of Peak 4.13 and Deck 3.5.3 can apparently experience problems when recording via a USB audio device in Tiger, although it's possible to work around this issue by creating an Aggregate Device (as described elsewhere in this article) containing just the USB audio device with which you're trying to record.

- DIGIDESIGN

At the time of writing, Pro Tools, the Digidesign Core Audio driver, and the Digidesign Audio Engine (DAE) used by other applications such Logic Pro and Digital Performer are not yet qualified for 10.4, although hopefully this situation will have changed (or be about to change) by the time you read this. On the plus side, I did successfully install the 10.3 version of Digidesign's Core Audio driver on 10.4 and had audio output to an Mbox from iTunes.

- EDIROL

While Edirol's previous Mac OS X MIDI drivers remain compatible with Tiger, and some of the company's audio devices use the default Core Audio driver included with Tiger (FA101, FA66, UA1X, UA1D and UA3D), Edirol have posted Tiger-compatible versions for all other audio and MIDI + Audio devices.

- M-AUDIO

At the time of writing, M-Audio were verifying compatibility of the company's MIDI and audio devices with Tiger, saying that Tiger-compatible drivers would, where necessary, be available shortly.

- MOTU

Users of MOTU's Firewire-based audio interfaces will need to download v1.2.5 of the Firewire audio driver and console package, while those with either a PCI324 or PCI424 audio card will need v1.08 of the PCI driver and console package, which offers support for devices going back to the original 2408 audio interface. MOTU's current v1.31 MIDI interface driver is already Tiger-compatible, and so is the current v4.52 shipping version of Digital Performer, although MOTU recommend removing and reinstalling this version for users upgrading a system to Tiger, and it's worth mentioning that version 4.52 is the first version of DP to be fully compatible with Tiger, so those who haven't downloaded the update will need to do so.

A public beta of MOTU's Mach Five sampler v1.22 can be downloaded from the company's web site and offers Tiger's compatibility for the MAS, VST and Audio Units versions. The only incompatibility with this version is when running with Logic 7.0.1 under Tiger as an Audio Unit, although MOTU are hoping to resolve this issue fairly quickly. MX4 v2 is compatible with Tiger for the Audio Units and MAS formats, while a v2 RTAS-compatible version is expected to ship when Pro Tools becomes available for Tiger.

Of MOTU's remaining applications, Unisyn v2.11 is already Tiger-compatible and users should make sure they have the latest version to ensure compatibility. Audiodesk users can download a Tiger-compatible v2.04 public beta and, as with DP v4.52, MOTU recommend removing the current version of Audiodesk before installing the new version if you've chosen to upgrade to Tiger from a previous version of Mac OS X.

As a side note, all Tiger-compatible version of MOTU's applications, plug-ins and drivers are backwardly compatible with previous versions of Mac OS X, including Jaguar.

- NATIVE INSTRUMENTS

Most products from Native Instruments are already Tiger-compatible, with Traktor DJ Studio 2.6 and Traktor FS 2 requiring updates that can be downloaded from Native Instruments' Update Manager on the company's web site. The company advise that users of Final Scratch v1.x should note that the Scratch Amp drivers are not approved for Tiger — or Panther for that matter — and that they may experience the odd hardware initialisation error before they can start using the application. Nice!

A small update is also required for users wishing to perform a clean install of Komplete 2 on Tiger (rather than those upgrading to Tiger on a machine that already has Komplete 2 installed) and fixes for Audio Units validation errors are apparently on the way for Battery 2 users and those with products based on Kontakt Player, such as Garritan Personal Orchestra.

- SIBELIUS

Sibelius claim that all of the company's products have been tested with Tiger and that no serious compatibility problems have been found, with the exception of Sibelius Starclass, which apparently reports problems playing sounds with Quicktime when Adobe's Acrobat Reader v7 is installed. The solution is to remove version 7 and download and install the older version 6 from www.adobe.com/products/acrobat/alternate.html instead.

- STEINBERG

While Steinberg have yet to comment on testing with the final versions of Tiger, they've indicated in their support forum that Cubase, Nuendo and other products have been tested with pre-release versions of Tiger and seem to be fine. Products that showed problems with the pre-release version of Tiger are Halion Player, the Nuendo Dolby Digital Encoder and the driver for the MI4 audio hardware that's part of the Cubase System 4 bundle. Full compatibility information will be available from Steinberg's web site in due course, and the company has also indicated that future Cubase and Nuendo releases will support Quartz 2D to improve graphics performance, which should offer a significant acceleration to the user interface.

Speaker Output Configuration

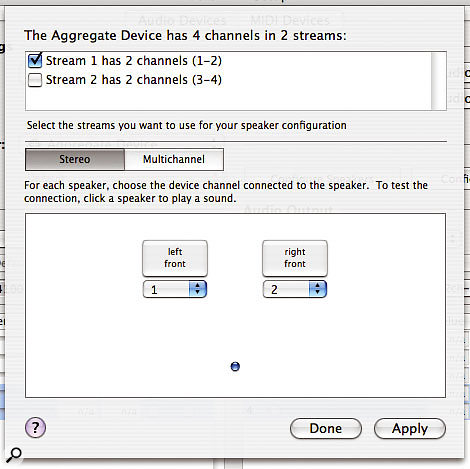

Panther introduced a way to configure which outputs on your audio devices are assigned to which speakers in audio applications with a single stereo or multi-channel audio output. This is the Configure Speakers sheet, which is opened from the Configure Speakers button for the currently selected audio device in the 'Properties For' pop-up menu. While this doesn't have too many uses in programs like Logic that deal with specific input and output audio channels on your hardware, it is useful to know about, since musicians and audio engineers are the ones most likely to need to manage multi-channel audio devices on a Mac.

One simple example where Configure Speakers can be useful is with an application like iTunes. Say you have an audio device with eight outputs: by default, iTunes will always play out of the first two outputs, but what if you want to hear to monitor via outputs seven and eight instead?

The Configure Speakers sheet enables you to specify what outputs on your Audio Device are used by applications that output audio to Mac OS X's stereo or multi-channel speaker arrangement. Notice how multiple Streams can be selected in the upper part of the sheet if the Audio Device has multiple Streams, as you can see here for an Aggregate Device.

The Configure Speakers sheet enables you to specify what outputs on your Audio Device are used by applications that output audio to Mac OS X's stereo or multi-channel speaker arrangement. Notice how multiple Streams can be selected in the upper part of the sheet if the Audio Device has multiple Streams, as you can see here for an Aggregate Device.

In the Configure Speakers sheet, if you're using a device with multiple Streams, you enable the Stream with the outputs you want to assign in the upper part of the sheet — in this example, you need to enable the Stream that encompasses outputs seven and eight on your audio device. Next, in the lower part of the sheet, you'll see a graphical representation of the speaker arrangement Mac OS X applications use when outputing audio, meaning that when an application like iTunes outputs stereo audio, it's this model that's used for the audio output.

The speakers are labelled with their configuration (left front and right front in the case of simple stereo) and are positioned in relation to the listener, who is illustrated with a blue dot (see the screenshot on the right). Clicking on the speaker button sends white noise to that speaker until you click it again, and you can set which output on your audio device is used for this speaker in the pop-up menu below that speaker. In this example, you'd select '7' in the pop-up menu for the left speaker and '8' for the right speaker, and click Apply. All stereo output from basic audio applications that don't specify audio outputs themselves (iTunes, Quicktime Player, DVD Player and so on) will now take place via outputs seven and eight on your audio hardware. Programs that address specific outputs on Core Audio devices, like Logic, will ignore these settings.

The process of setting the assignments for applications that output basic multi-channel audio (such as DVD Player and the new version 7 Quicktime Player) is the same as for stereo configurations. In the Configure Speakers tab, click the 'Multi-channel' button and select a multi-channel configuration from the pop-up menu that now appears beside the button. You'll only be able to select multi-channel configurations based on the number of total streams in your audio device, so four speakers will only allow you to set stereo and quadraphonic configurations, for example. As before, getting it all to work is a question of enabling the required Streams at the top of the sheet, selecting the multi-channel configuration from the pop-up menu, and then assigning the outputs on the speakers in the lower part of the sheet.

Tiger's Built-In Audio Units

Apple include many Audio Units with Tiger, most of which are self-descriptively named, as detailed in the following list. You'll notice many Audio Units that don't usually get presented in your Audio Units host applications. Audio Units included with Panther are indicated with an asterisk.

OUTPUT

- HALOutput.

- Default Output.

- System Output.

- Generic Output.

MUSIC DEVICE

- DSLSynth(plays back a Downloadable Sound file containing sample-based instruments, such as the Quicktime Music Synthesizer bank.

FORMAT CONVERTER

- AUConverter.

- Varispeed.

- DeferredRenderer.

- TimePitch.

- Splitter (takes an input and splits in into multiple outputs, such as a mono input to a stereo output).

- Merger (the opposite of Splitter: takes a multiple inputs and merges them into one output, such as a stereo input to a mono output).

EFFECT

- AUBandpass.

- AUDelay.

- AUDynamicsProcessor.

- AUFilter.

- AUGraphicEQ.

- AUHighShelfFilter.

- AUHipass.

- AULowpass.

- AULowShelfFilter.

- AUMatrixReverb.

- AUMultibandCompressor.

- AUNetSend.

- AUParametricEQ.

- AUPeakLimiter.

- AUPitch.

- AUSampleDelay.

MIXER

- StereoMixer.

- 3DMixer.

- MatrixMixer.

GENERATOR

- AUAudioFilePlayer.

- AUNetReceive.

- AUScheduledSoundPlayer.

Audio Units

Audio Units, as the OS X audio plug-in standard, has had a somewhat tumultuous journey compared with other plug-in formats. A great deal of development was carried out during the early 10.0 and 10.1 releases of OS X, which, coupled with third-party developer feedback, culminated in a v2 revision of Audio Units with the release of Mac OS 10.2. Since few v1 Audio Units plug-ins existed, this didn't create too many problems for developers, and the specification settled down during 10.2 and most of 10.3, until Apple coerced developers into making their Audio Units plug-ins conform to Apple's validation tool after including this as part of Logic 7 's start-up procedure. Plug-ins that failed were set to one side, requiring manual user activation to bring them back into the application again. Although this created a few problems for users in the interim, overall it was a good move, and encouraged the development of 'fully legal' Audio Units plug-ins to improve overall system stability.

While many audio plug-in formats were created for specific purposes, such as to process incoming audio to create an effect, the Audio Units format was created to be broad container for a variety of different audio tools. There were actually seven different types of Audio Units in the specification released with Panther, and there are nine in Tiger. The original seven types are: Effect (one of the most common types, this is a standard process that takes an incoming audio signal and creates an outgoing audio signal), Music Device (the other common type, which is used to implement software instruments), Music Effect (which is similar to Effect, but allows a MIDI input for controlling the effect's parameters), Output (which can send an incoming audio signal to an output stream on a Core Audio Device or to a file), Format Converter (to implement a utility that converts audio in one format into another, such as a sample-rate converter), and the Mixer and Panner types, which are fairly self-explanatory.

A full list of the new Audio Units plug-ins that ship with Tiger can be found in the box opposite, and Tiger also adds two new Audio Units types. There's Offline Effect, for creating effects that work on an off-line audio stream, such as when processing files, and there's Generator, which generates an audio output, but doesn't require an audio or MIDI input to do so, (like a test-tone signal generator). Tiger ships with two examples of the new Generator class of AU plug-ins you can use in most applications, AUAudioFilePlayer and AUNetReceive, which I'll discuss in the next section. In order to use these Audio Units, applications need to be written to support these new types. However, as you might expect, Apple's own applications, Garage Band and Logic, in their latest 2.01 and 7.1 versions, both support AU Generators.

Here you can see AUNetSend in Garage Band where it's used as an insert effect on the Drum Kit Track. The Status indicates 'Connected' when the plug-in is connected to a corresponding AUNetReceive plug-in.

Here you can see AUNetSend in Garage Band where it's used as an insert effect on the Drum Kit Track. The Status indicates 'Connected' when the plug-in is connected to a corresponding AUNetReceive plug-in.

Potentially the most intriguing Audio Units plug-ins included in Tiger are AUNetSend and AUNetReceive, which, as their names imply, can be used to send and receive audio streams across a network. Basically, audio passes through the AUNetSend plug-in, which can be used as an insert effect, for example, and while this plug-in carries out no processing on the actual audio signal, it does pass any incoming audio to a connected instance of AUNetReceive running within any Audio Units-compatible application on the network. However, you don't have to have a network of Macs for these Audio Units to be useful, as you can also send audio from AUNetSend to AUNetReceive with both plug-ins running under the same application on the same computer.

To set up AUNetSend, you simply add it as an insert effect to an audio channel you want to transmit over the network. You can set a data format for the audio being transmitted, and although it defaults to 16-bit integer PCM, you can also choose from higher bit-depth PCM formats, 16- or 24-bit Apple Lossless format, µ-Law, IMA 4:1, or varying data rates of AAC compression from 32 to 129 kilobits-per-second. The Bonjour Name, as with MIDI networking in Core MIDI, is the name that will identify this audio stream to AUNetReceive, and AUNetSend won't actually start sending audio until it's connected to an instance of AUNetReceive.

Here's the corresponding AUNetReceive plug-in running as a Generator in Garage Band, but on a different Mac.

Here's the corresponding AUNetReceive plug-in running as a Generator in Garage Band, but on a different Mac.

All you need to do now is open an instance of AUNetReceive on another computer on the network, or the same computer — but remember that you need a host application compatible with Audio Units Generator plug-ins to do this. The Directory will list all available AUNetSend streams by their Bonjour names, and you can join a stream by selecting it and clicking Select Host. As with MIDI networking, you can also add computers and streams to the Directory manually by clicking the plus '+' button, which also means that it's possible to specify the address of a computer that's not connected to your LAN.

Core Audio Format (CAF) Files

Tiger introduces a new file format for storing audio data known as CAF (Core Audio Format), which is a 64-bit file format capable of storing 'a thousand channels of audio for a thousand years in a single file' according to Apple, in both uncompressed and compressed formats such as Apple Lossless and AAC. Quicktime 7 offers support for CAF files, although there is no support in any other application, including Apple's own offerings, at the time of writing.

While little information is available yet as to the specifics of this new audio file format, CAF is pretty similar to other audio file formats, such as the Broadcast Wave file format, and consists of a series of data chunks, including one chunk that stores the actual audio data, and another that describes the format of the audio, such as the sample rate, bit depth, number of channels, and other settings associated with streaming the file across a network. Other chunks specify other characteristics concerning the audio stored in the file, such as an overview, so that the data for displaying the audio on screen is stored with the audio rather than it having to be created from scratch when the file is opened in multiple applications.

There's also an Instrument chunk to store data such as MIDI note values, velocities, gain adjustments and so on, if the file is to be used as part of a sampled instrument, and further chunks define a list of regions with the file or marker locations for storing loop points, program start and end points, marker start and end points, and so on. In addition to being able to store marker points as positions in terms of the number of samples from the start of the file, CAF also allows for SMPTE times to be recorded with sample-level sub-frame offsets (if required) and the original frame rate. This is an improvement over Broadcast Wave, where the time stamp is usually only represented in samples and the original frame rate is rarely stored, leaving it up to the programmer to decipher the original SMPTE time based on a guess of the correct frame rate.

It will be interesting to see how this new file format develops as it gains support from other Mac applications in the audio, music and video markets.

Summary

As this article hopefully demonstrates, Mac OS X is the most feature-rich operating system for music and audio purposes. It's not that other operating systems are less suitable or perform less well for music and audio purposes — it's just that Mac OS X includes more features specifically designed for professional audio and MIDI users, leaving developers free to use the OS-based features provided for them, rather than having to take time to create their own, application-specific ones. You might ask why this matters to end users, but it means that under Mac OS X, there is a more consistent approach to addressing matters concerning audio and MIDI hardware, along with plug-ins. Or rather there should be — unfortunately major applications like Cubase still don't support Audio Units under Mac OS X, meaning that if you use Cubase for your music production and Final Cut Pro for video, you need a set of VST plug-ins for Cubase and a set of Audio Units for Final Cut Pro, whether wrappers are involved or not.

While no MIDI or audio applications are becoming Tiger-dependant at this point, Tiger is still a must-have upgrade for musicians and audio engineers. The general OS X improvements are nice, but the improved 64-bit support is going to help samplers and other memory-intensive applications running on G5-based Macs, and you can expect to see your applications' interfaces redrawing a little quicker if you have a graphics card that can take advantage of Quartz 2D Extreme. More specifically, I expect a great many people are going to be taking advantage of Core MIDI Networking and Aggregate Devices in Core Audio. It's nice to see Apple designing their OS with the professional audio market in mind.