Can you hear the difference between an MP3 and a WAV file? We explain how lossy audio data compression works, and how to spot the tell-tale signs it leaves behind.

Analogue consumer dissemination formats for audio have all had their problems. Listen to some vinyl and cassette and you're going to experience (on one format or the other) limited dynamic range, wow and flutter, clicks and pops, background noise, tonal inconsistencies, and degraded quality, to name a few. When Compact Disc came along, it offered a relatively convenient format with stable and consistent playback speeds, an increased dynamic range, no added format-induced background noise, and no degradation of the storage medium each time it was played. However, it was often criticised for sounding 'brittle' or 'harsh' in the early days — a characteristic that can be blamed on the early generations of analogue-to-digital (A-D) and digital-to-analogue (D-A) converters. Later generations of converters have sounded much better, making the CD a pretty good format compared to its predecessors.

The MP3 player, iPod, iTunes and various download formats are now ubiquitous parts of many consumers' listening experience, and let's not forget that many people experience music and other multimedia entertainment via streaming sources such as YouTube, Pandora, Spotify and SoundCloud. All these sources use 'lossy' data-compressed audio formats. So what does this compression do to your audio, what are the limitations of these formats, and to what audible artifacts does the data compression give rise?

Frequency Response & Dynamic Range

Let's first identify some key audio concepts and terminology, specifically as they relate to the notion of sound quality. Regarding frequency response, the theoretical range of human hearing is said, broadly, to be 20Hz-20kHz. Obviously, the lower the lowest frequency that an audio system can reproduce, and the higher the highest frequency an audio system can reproduce, the better. This applies both to the audio file format and to the recording and playback hardware, though in practice, the lower frequencies are usually more of a problem for the playback system's loudspeakers or headphones than for the format in which the music is stored and disseminated.

Frequency response isn't the only important factor, though. 'Real' acoustic sounds (ie. not those captured and then played back) can have an incredibly wide dynamic range, and the theoretical dynamic range of human hearing extends from 0dB SPL (Sound Pressure Level) to anywhere between 130 and 140 dB SPL. The dynamic range of an audio system is the range between the quietest sound that it can store or reproduce, or the point at which the quiet sound becomes buried or masked by background system noise, and the loudest sound that can be stored or reproduced before the system becomes overloaded and distorts. By way of example, analogue tape can capture a dynamic range of anywhere between 50 and 70 dB (with 70dB rarely achievable even on the best systems).

Digital Audio Terminology

To put these frequency and amplitude units and specifications in a more modern context, let's define 'CD-quality' audio. The audio is stored digitally on a CD via a technique known as PCM, or Pulse Code Modulation. PCM data consists of snapshots of an audio waveform's amplitude measured at specific and regular intervals of time. The CD format consists of 44,100 measurements of the waveform's amplitude per second, so is said to have a sample rate or sampling frequency (fs) of 44.1kHz. This is important, because the Nyquist Theorem states that the high-frequency limit of a PCM digital audio system is dictated by the sample rate, and that the sample rate must be at least double the highest frequency that will be recorded. So a 44.1kHz sample rate can theoretically store frequencies up to just above 20kHz, approximating the theoretical upper limit of the best human hearing. 1. PCM-encoded audio.The grey boxes represent samples, amplitude measurements taken at regular intervals of time. The captured or reconstructed analogue signal is depicted by the red line that runs through the first point of the grey box (ie. the point in time at which the sample begins).

1. PCM-encoded audio.The grey boxes represent samples, amplitude measurements taken at regular intervals of time. The captured or reconstructed analogue signal is depicted by the red line that runs through the first point of the grey box (ie. the point in time at which the sample begins).

CD-quality audio uses 16 binary digits to represent the amplitude value of each measurement, or sample. This is known as the word length (often incorrectly described as 'bit depth'), and the greater the word length, the greater the dynamic range that can be captured. The 16-bit word length used on audio CDs allows for a theoretical signal-to-noise ratio of 93dB. The dynamic range is actually slightly greater than this, as we can perceive sounds below the measured noise floor. Roughly, each 9dB increase in dynamic range equates to a perceived doubling of the volume of the sound — so although better than analogue tape in this respect, the CD format's dynamic range is far short of the 140dB dynamic-range capability of the human ear.

The above should not be confused with bit rate, which is expressed in kbps (kilobits per second) and is a measure of the amount of data required to represent one second of audio. CD-quality stereo audio has a bit-rate of 1.4 Mb/second, as the following calculation shows:

16 (bits) x 44,100 (fs) x 2 (two channels in a stereo signal) = 1411.2kbps.

Remember this, because it will be significant later in the article.

PCM is a 'raw' or uncompressed format, meaning that all of the data that is captured is stored and all of it is used to recreate the waveform on playback. In other words, the bit-stream entering the system when recording is, theoretically, identical to the bit-stream reproduced by the system on playback. To put CD quality in context, most professional PCM audio recording systems currently use 24-bit word lengths — resulting in a theoretical 141dB (144 minus 3dB for dither) signal-to-noise ratio — and sample rates of up to 192kHz, for increased high-frequency response.

PCM data is commonly stored as WAV or AIFF files, which are relatively large files when compared with compressed formats. That's no surprise, of course, because the whole point of audio data compression is to reduce file sizes, so that content can be more quickly downloaded over the Internet, and so that more songs can be stored on your iPod.

Compressed audio formats can be categorised as either 'lossy' (these include MP3, AAC, WMA, and Ogg Vorbis) or 'lossless' (such as FLAC, Apple's ALAC and MP3 HD). The lossy formats listed are examples of what we call perceptual audio encoding formats, which exploit the psychoacoustics of human hearing: assumptions are made about what we might hear and what we might not in different contexts, in order to determine which elements of data can be 'lost'.

Simply put, lossy formats always exhibit some quality loss, because the audio content exiting the decoder on playback is not the same as the audio content that originally went in to the encoder. Whether an individual can actually perceive that quality loss is another matter — so let's explore what the effects of lossy data compression are, and how they manifest themselves in terms of audible artifacts.

Exploiting Psychoacoustics

Psychoacoustics is the study of how humans perceive sound, and it's relevant here because advocates of lossy data compression argue that when listening to CD-quality audio, it is impossible for our brains to perceive all the data reaching our ears. It is, therefore, unnecessary — the argument goes — to store and reproduce all of that data. But which data can be removed is another question, and this is why various psychoacoustic principles are exploited in different amounts by different perceptual audio coding algorithms. The main principles used are described below.

• Minimum Audition Threshold: The human ear is not equally sensitive to all frequencies. It is most sensitive at 1-5 kHz, and gets less sensitive in the extremes either side. By middle age, few people can hear above 16kHz, and even young people with good hearing perceive these high frequencies far less efficiently. Therefore, quieter content in frequency ranges to which we are less sensitive may be deemed imperceptible by the encoder — so it's not encoded.

However, our perception of how loud a frequency is relative to other frequency content occurring simultaneously changes with playback volume. For example, try listening to a track at a high playback level, and then turn it down and listen again. It's not just the overall level that sounds different: the quieter version will appear to have less bass, and a reduction in crispy high-frequency details. Minimum audition thresholds can only be an indicator, not an absolute, because our ability to perceive details changes with playback volume — and the encoding algorithm cannot know how loud you might play something back! (For more information, search the Web for Fletcher-Munson equal loudness curves, or see the feature in SOS April 2012 on mixing for loudness at /sos/mar12/articles/loudness.htm, which explored this concept in more depth.)

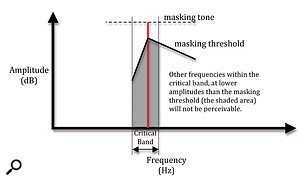

• Simultaneous Masking: Research has shown that the human ear responds to frequency content using what are known as critical bands. These are narrow bandwidth divisions of the 20Hz-20kHz frequency spectrum. If a loud frequency component exists in one of these critical bands, that noise creates a masking threshold that will render quieter frequencies in the same critical band potentially imperceptible.

2. The masking threshold around a loud tone within a critical band.

2. The masking threshold around a loud tone within a critical band.

• Temporal Masking: A loud sonic event will mask a quieter one if they both occur within a small interval of time — even if the louder event actually occurs after the quieter one. The closer in time the potentially masked sound is to the louder, masker, sound event, the louder it needs to be in order to remain perceivable.

What Are You Listening To?

Amazon and iTunes are, at the time of writing, probably the most commonly used legal music download services. Amazon offers MP3 files at 256kbps (it used to only offer 128kbps), and iTunes now offers AACs at 256kbps, though some older tracks may still only be available at 128kbps. Many more consumers listen to free Internet radio stations, which use different bit rates, often determined by whether a desktop or mobile device is being used, or whether the subscriber has paid for premium audio quality. Pandora streams 64kbps AAC files for desktop use, and can stream lower bit rates for mobile devices. Spotify's standard free service uses the Ogg Vorbis format at 160kbps for computers and 96kbps for mobile devices, while their Premium subscription service can stream at 320kbps. Slacker streams at 128kbps MP3 for computers, and can go down to 40kbps and 64kbps AACs for its mobile formats... and how many hours do people spend on sites such as YouTube, where the audio quality varies hugely? At its very lowest quality, YouTube audio was encoded as mono 22.05kHz 64kbps MP3 files, though most new material is now encoded to 44.1kHz stereo AAC or Ogg Vorbis formats.

As you can see, there's quite a lot of variation — and for that reason I've used several different formats in creating the audio examples that accompany this article, and which are discussed later on. Some are presented at deliberately low bit rates to make the artifacts more obvious on a casual listen on most playback systems, but as you get more tuned into and aware of the characteristics being discussed when listening at lower bit rates, you should begin to identify them at higher bit rates too. The audio files themselves are available at /sos/apr12/articles/compressionartifactsmedia.htm.

It's worth noting that some online vendors have been improving the quality of the sources they offer. The number of sites offering lossless downloads is increasing, and the number of titles offered as lossless downloads (and even some hi-res downloads) is also steadily increasing. However, the majority of consumers seem to be unaware of this, or of the audio quality they're missing out on! They spend endless hours experiencing audio at sub-128kbps bit rates, at the mercy of whoever uploaded the material, without knowing what it should sound like, without realising how bad it sounds, and unaware of the artifacts they're hearing that shouldn't be there.

How Encoders Work

Most encoders work by splitting the full-range frequency content into around 32 narrower bands (similar to the critical bands I mentioned earlier when discussing the human hearing system) and then into variable numbers of finer sub-bands. The frequency content of a section of time of each of those sub-bands is then analysed to determine what content is perceivable and what might be imperceptible to the listener. Only frequency content deemed perceivable and possible to store at the given bit-rate is encoded.

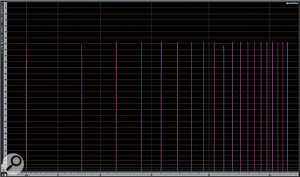

Dominant frequency components within each of these narrow bands are detected, and masking thresholds are calculated. Frequency content is analysed for its loudest frequency components, and masking thresholds are calculated for those frequencies. Only frequency content with amplitudes above the masking threshold is then 'sent' for encoding. Any frequency content with amplitudes below the masking threshold is discarded. Obviously, this results in the output frequency content being slightly different from that of the input signal. If the data sent for encoding still exceeds the bit-rate selected for the encoding, this process is repeated as necessary until bit-rate requirements are met — resulting in more and more data (frequency content) being discarded. Note that the algorithm can still end up discarding content deemed audible by the encoder, in order to satisfy finite bit-rate requirements. 3. Frequency content that is deemed to be masked by louder sounds is often discarded during lossy encoding processes.

3. Frequency content that is deemed to be masked by louder sounds is often discarded during lossy encoding processes.

However, before this analysis occurs, the incoming narrow bands of audio must be 'windowed'. Windows are crossfaded chunks of audio that will be analysed for frequency content. Different window lengths are used: longer windows when the current frequency content is similar to adjacent content, and shorter windows when the current content is less similar to, or different from, adjacent content. Shorter windows increase the temporal accuracy of the encoding, at the expense of spectral resolution — in other words, less frequency content may be resolved. Longer windows increase the spectral resolution, but at the expense of temporal accuracy.

Practical Options

Common lossy audio compression formats work at sample rates between 8 and 48 kHz — and remember that the standard audio CD sample rate is 44.1kHz, which gives a frequency response up to 20kHz. Audio that's encoded at 22.05kHz has a high-frequency limit of about 10kHz, and audio encoded at 8kHz only up to about 4kHz.

Some codecs have the option of being either constant bit rate (CBR) or variable bit rate (VBR) within the 8kbps-320kbps range. VBR files lower the bit rate when the waveform and frequency content is 'simpler', allowing the encoded file to be made smaller — which means that more songs fit on your iPod or hard drive. However, some people argue that the VBR approach creates more undesirable artifacts.

Remember that the bit rate for uncompressed, CD-quality audio is 1411kbps. The initial goal of MP3 was to produce 'acceptable' results when coding at 128kbps. That's a data reduction of over 90 percent, producing a file about an 11th the size of the raw 16-bit, 44.1kHz PCM file. This is undeniably a commendable and significant achievement in terms of audio quality versus bit rate, but unfortunately, at this bit rate, many side-effects are present and audible. Higher bit rates result in less information being discarded and fewer undesirable sonic artifacts — but fewer songs will fit into the same storage space.

Different encoders can also use different stereo encoding modes. With some encoders, it's possible to specify which stereo mode will be used, and others will dictate the mode themselves. At higher bit rates, most encoders, including MP3, AAC and WMA, use M/S (Mid/Sides) stereo mode. With M/S encoding, there are two channels, just as with Left/Right stereo. However, one channel is used to store information that is identical on the left and right channels (the Mid), while the other stores the differences between the two channels (the Sides). The Sides signal usually contains a much smaller amount of information than a typical left or right channel, therefore reducing file size.

At lower bit rates, some MP3 encoders offer the choice of 'Intensity Stereo' or 'Independent Channel' encoding. Intensity Stereo mode sums the channels together at higher frequencies, so should only be used at low bit rates where file size is critical. In Independent Stereo mode the channels are not linked, and processing is discrete on each channel. It should be obvious that this can negatively impact stereo imaging accuracy, as details fundamental to the stereo image may not be resolved identically for each channel.

Common Artifacts Of Lossy Compression

The following examples, for which I've created audio examples, demonstrate some of the most common and obvious artifacts of various perceptual audio encoding technologies.

• Loss Of Bandwidth

Not all encoders are created equal, and even those of the same format do not perform identically at identical settings! The previous 'standard' of 128kbps is inadequate for fully representing the frequency spectrum, and thus the intentions of the artist and engineer.

4. Pink noise encoded by iTunes MP3 at 128kbps.

4. Pink noise encoded by iTunes MP3 at 128kbps. 5. LAME MP3 at 128kbps.

5. LAME MP3 at 128kbps. 6. iTunes AAC at 128kbps.

6. iTunes AAC at 128kbps. 7. Audio Example AAt 128kbps, MP3s and AACs show the effects of a brickwall filter on the upper frequencies, removing high-frequency content above about 16kHz from the pink-noise source material. Apple's iTunes MP3 encoder even creates anomalies and distortions in this frequency range, which are not apparent using the LAME encoder or iTunes' AAC encoder. To retain full bandwidth, iTunes needs to encode MP3s at or above 256kbps VBR, or the AAC format has to be used.

7. Audio Example AAt 128kbps, MP3s and AACs show the effects of a brickwall filter on the upper frequencies, removing high-frequency content above about 16kHz from the pink-noise source material. Apple's iTunes MP3 encoder even creates anomalies and distortions in this frequency range, which are not apparent using the LAME encoder or iTunes' AAC encoder. To retain full bandwidth, iTunes needs to encode MP3s at or above 256kbps VBR, or the AAC format has to be used.

• Pre-Echoes (And Post-Echoes)

Encoded audio content can become time-smeared by the action of the critical band filtering and processing, resulting in a sound signal occurring before or after the wanted sonic event. A short, sharp transient event demonstrates this. If these smeared sounds exist beyond the pre- and post-masking thresholds of the human auditory system, then they become audible as pre- and post-echoes. If a short transient burst of noise is encoded to different formats, we see and hear smearing and pre- and post-echoes. The following audio examples highlight this:

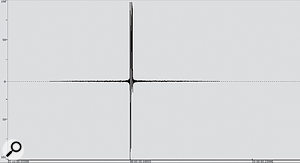

8. Audio Example BAudio Example A: A raw, uncompressed AIFF file with a duration of <2ms.

8. Audio Example BAudio Example A: A raw, uncompressed AIFF file with a duration of <2ms.

9. Audio Example CAudio Example B: 256kbps VBR MP3. Pre- and post-echoing are clearly seen, smearing the event over a 35ms duration.

9. Audio Example CAudio Example B: 256kbps VBR MP3. Pre- and post-echoing are clearly seen, smearing the event over a 35ms duration.

10. Audio Example DAudio Example C: 256kbps VBR AAC. The amplitude of the pre- and post-echoes has increased, but over a shorter duration (<15ms).

10. Audio Example DAudio Example C: 256kbps VBR AAC. The amplitude of the pre- and post-echoes has increased, but over a shorter duration (<15ms).

11. A spectragraph plot of an AIFF file on the left and a compressed format on the right.Audio Example D: A 256kbps VBR WMA smears the event over 130ms — the horizontal scale of the waveform had to be changed to make it all visible!

11. A spectragraph plot of an AIFF file on the left and a compressed format on the right.Audio Example D: A 256kbps VBR WMA smears the event over 130ms — the horizontal scale of the waveform had to be changed to make it all visible!

Even at these relatively high bit rates, this artifact gives the short, sharp sound a softer, smoother, less abrupt character, with differing noise content occurring either side of the transient burst dependent on the encoding type. What does this mean in the real world? Well, this short event could well be the snare drum, kick drum, or acoustic-guitar attack transients that took a lot of effort and money in expensive mics and preamps and hours of time to carefully and accurately record!

Roughness & 'Double-speak'

Audio content timing errors tend to occur more with low bit rates, and the effect is particularly noticeable on the human voice. You've probably heard this before: a single voice can sound like it has been double-tracked and made robotic. Listen to audio examples E and F and you'll see what I mean:

Audio Example E: A raw WAV file of some speech.

Audio Example F: A 56kbps MP3 file, demonstrating grainy roughness and robot-like 'double-speak'.

Audio files G-I demonstrate that pre-echoes and roughness are easily heard in the sound of applause:

Audio Example G: A raw WAV file of applause. Pay attention to the foreground (the loud, obvious claps) and the background (the details of the unfocused individual claps).

Audio Example H: In an MP3 at 128kbps, the definition and clarity of the background applause suffers, and it becomes more of a smooth, undetailed, fluttery 'wash'.

Audio Example I: At 64kbps, this is an extreme example, but it's actually not uncommon when watching downloaded videos or YouTube-type material. The temporal displacement and time-smearing results in audible ringing at different frequencies.

Transients, Transparency, Detail & Stereo Imaging

The following two music examples demonstrate some artifacts that should be apparent on a casual listen via a decent playback system:

Audio Example J: A raw 16-bit, 44.1kHz WAV file.

Audio Example K: A 128kbps MP3 file.

Bear in mind that this bit rate is higher than in the formats offered by many streaming multimedia sources. On Now Now Sleepyhead's track 'Milestones', which I've used as an example, the following differences can be heard:

• The clarity and attack of the snare drum is lost. Lacking crispness, punch and bite, it sinks back into the mix, behind the vocals. This is particularly noticeable under the lyrics "as long as you and I are on the page” (5-9 seconds), and "racing as fast as whatever it takes” (14-19 seconds).

• The vocal reverb tails lose detail and texture, becoming less smooth and transparent, and grainier. In places, the reverb is made less obvious, for example under "as you and I” at about 6 seconds, and "I'd assume that you'd have come to find…..” at about 25 seconds.

• The vocal exhibits several changes depending upon its context in the mix. It becomes thick and woolly (for example, "go away” at 3 seconds), and coarse and hollow, missing body, depth and timbral details (for example, "that you would come to” at 26 seconds).

The following example, from the Barclay Martin Ensemble's 'Eyes Of A Child', reveals how the stereo image and details carefully crafted by the recording, mix and mastering engineers are changed:

Audio Example L: A raw 16-bit/44.1kHz WAV file.

Audio Example M: A 128kbps MP3 file.

• The bongos panned hard left and right lose their crack and punch.

• The shakers, panned to the left and right, and centred hi-hat lose their focused, 'point-source' precision. They become smudged or blurred horizontally, and sound a little distorted and crunchy.

• The strummed chords on the acoustic guitar become grungey, and less crisp and detailed. The transparency, clarity, and energy is changed.

• The bass towards the end is less full, and lacks its original power.

• Overall, the stereo image loses its focus, precision and sense of envelopment.

For more obvious artifacts, try encoding some music from a CD you own at about 96kbps. Once you are 'tuned in' to a specific artifact, encode the same track at a higher bit rate to see if you can still notice it. These artifacts do occur less as the bit-rate is increased, but many remain noticeable to a certain extent, particularly as you train your ear to notice them.

Lack Of Bass Solidity

The MP3 format has a reputation for making bass and low-frequency content sound weak: that slammin' bass line can easily lose its phatness! (See audio examples L and M referenced above.) Low frequencies are harder for DSP algorithms to analyse because their durations are long, and amplitude differences over the short analysis windows used by the encoders may only be slight — so the analysis system doesn't get an entire cycle of a low frequency per analysis window. In some situations, the encoder will be presented with less than a half cycle of any frequency below 114Hz. The AAC format fares much better in bass resolution, and it is thus much more forgiving to the bass.

Dynamics & Phase Shift

Because perceptual audio coding schemes remove frequency content, our masking perceptions and our sense of the amplitude of the remaining frequency content can be changed. The energy and dynamic range of some sounds can be attenuated or compressed. Other content can appear to be boosted, simply because what surrounds it, both spectrally and temporally, is attenuated. Stereo imaging and the clarity/transparency of material can be affected when the relative phase (timing) of frequency content is changed. When frequency content is smeared over time, its average level over time increases slightly. This can result in the listener perceiving it as louder, with further consequences for timbre and frequency masking. 12. Audio Example Q: spaced test tones.

12. Audio Example Q: spaced test tones.

Image 11 shows high-frequency content missing (red arrows): hot colours which represent greater amplitudes are either missing in the output of the compressed format or smeared and less precise (green, blue and black arrows). Generally, the hot-coloured frequency content shapes of the original are less clear in the compressed format, and we can see that the time and amplitude components of the material have been changed.

The Dreaded 'Swirlies'

Another common artifact, commonly referred to as 'swirlies', is caused by the rapid coming and going of lower-level frequency content in the compressed format, which is stable in the raw file.

Audio Example N: A 16-bit/44.1kHz excerpt from Now Now Sleepyhead's 'How The Hunter Hawk Hung In There'. Pay close attention to the hi-hat and cymbals throughout the excerpt, and the sibilant consonants of the vocal, all of which are consistent and smooth.

Audio Example O: An MP3 encoding in which the hi-hat and cymbals exhibit moderate grainy swirling during the first half of the example, and more severe, gurgling swirlies during the second half. There is a general 'chattering' instability throughout. Additionally, the vocal sibilance that was smooth in the uncompressed example is now harsh and detached from what is now a less clear, more woolly, darker vocal timbre.

Audio Example P: A standard 'free' Pandora-quality AAC encoding exhibits different swirling artifacts. The hi-hats and cymbals are dull, grainy and raspy – 'rough' in a different way. The vocals, and indeed the entire mix, is flat, lifeless, and lacks clarity and definition.

Frequency Content & Noise Addition

Perceptual coding relies on our ears' inability to hear frequency content obscured by other frequency content. It also allows the format to generate a certain amount of by-product noise before we are capable of hearing that additional noise.

Audio Example Q:  13. Audio Example R: spaced test tones encoded as MP3.16-bit, 44.1kHz spaced test tones at multiples of 500Hz (500Hz, 1 kHz, 1.5kHz, 2kHz, 2.5kHz, 3kHz, and so on to 20kHz) should look and sound like this.

13. Audio Example R: spaced test tones encoded as MP3.16-bit, 44.1kHz spaced test tones at multiples of 500Hz (500Hz, 1 kHz, 1.5kHz, 2kHz, 2.5kHz, 3kHz, and so on to 20kHz) should look and sound like this.

Audio Example R:  14. Audio Example S.The effects of 128kbps CBR MP3 encoding show the 16kHz brickwall HF roll-off, and added fluctuating frequency content around and in between the original tones, resulting in clearly audible artifacts.

14. Audio Example S.The effects of 128kbps CBR MP3 encoding show the 16kHz brickwall HF roll-off, and added fluctuating frequency content around and in between the original tones, resulting in clearly audible artifacts.

Audio Example S:  15. Even a 320kbps, CBR MP3 encoding (what some dub 'CD quality') does not approach the noise floor of the raw PCM file, even though the noise floor is clearly lower than at lower bit rates.AAC, at a similar bit rate, produces better results by concentrating the added content in the upper frequencies where our hearing is less sensitive to it.

15. Even a 320kbps, CBR MP3 encoding (what some dub 'CD quality') does not approach the noise floor of the raw PCM file, even though the noise floor is clearly lower than at lower bit rates.AAC, at a similar bit rate, produces better results by concentrating the added content in the upper frequencies where our hearing is less sensitive to it. 16. AAC looks like it measures up much better in its 320kbps CBR format. However, increasing the analyser resolution (not shown) does reveal an arguably inaudible increased noise floor. But how many consumers are downloading or encoding material to their iPods in the 320kbps AAC format?

16. AAC looks like it measures up much better in its 320kbps CBR format. However, increasing the analyser resolution (not shown) does reveal an arguably inaudible increased noise floor. But how many consumers are downloading or encoding material to their iPods in the 320kbps AAC format? Most people don't even think about changing their default iTunes import settings! Make sure you do if you want to hear the record as intended.

Most people don't even think about changing their default iTunes import settings! Make sure you do if you want to hear the record as intended.

Musical Content Removed & Noise Added

The audio examples linked here, from the Barclay Martin Ensemble's 'Tumbling Down', allow one to hear the actual audio content that is discarded by lossy encoders. In addition, varying levels of added fluctuating noise content can be heard.

Audio Example T: The content removed (and added) by iTunes' 128kbps VBR MP3 encoder. Almost every element of the track is audible! This shows how much content is removed in order for the encoder to achieve its goal of a 90 percent data reduction. MP3's lack of low-frequency solidity discussed earlier is explained by the continuousness and clarity of the bass line (and bass drum 'woof') that is present in the 'removed' signal. Also of note is the amount of transient content removed from the snare-drum hits.

Audio Example U: The artifacts produced by iTunes' AAC encoding at 128kbps VBR. The amplitude of the removed content is much less than in the comparable MP3. Also the bass is much more 'grungey', and less solid and continuous here, showing that a less continuous amount of it is removed, and why AAC is much more forgiving of low frequencies. The noise content is very different from that added by MP3: it sounds more obvious and perhaps louder than in the MP3 version, but this is an illusion caused by the see-saw effect of the amplitude of the musical content removed being lower. The added noise is, in fact, lower in level than the noise added by a similar bit rate MP3 encoding.

Audio Example V: So that we don't end on such a bad-sounding example (and so that the musicians who kindly gave permission for me to abuse their music get some good-quality exposure!), here's the original 16-bit, 44.1kHz file.

Abridged Too Far?

The average consumer, downloading, purchasing or stealing their MP3s or AACs from Internet sources, has little control over the encoding quality of the material they download. They get to choose MP3 or AAC by purchasing or streaming from this site or that site. If a consumer is getting content posted online by other individuals from one of the many free download sites, who knows what quality of files they are listening to? Some files may even have been converted from one format to another more than once, which will bring about even greater losses in quality with each generation!

MP3 was the original consumer format in the genre, there are still many MP3-only players in use, and there will continue to be so for quite some time. Even though Amazon and iTunes now offer 256kbps VBR MP3s and AAC files respectively, many people have grown up listening to the lower-quality 128kbps files originally offered, and now accept the format's limitations as the norm. Unfortunately, most consumers will probably continue to do so, because they won't pay to upgrade the music they already own to a higher bit-rate format.

Also, remember that ithese artifacts are not only experienced via music downloads: listen closely next time a DJ is 'spinning' from a computer-based system, when you're unfortunate enough to be at a karaoke bar, or you're watching/listening to news reports on a broadcaster's web site.

The consumer encoding from their own CDs at home does have control, of course — but how many average consumers know about the merits and disadvantages of MP3 or AAC, of different bit rates, of CBR versus VBR, and different stereo modes? How many haven't thought to change the encoding settings on their iTunes software? And we haven't even discussed teenagers happily listening to bad audio through their built-in mobile-phone speakers, or 50p ear-buds. Before you encode your next batch of CDs for iPod use, please check your preferences!

These compressed formats are not going anywhere for a while, so what is the best type and what are the best settings to use? If one must encode at 128kbps, AAC clearly creates less distortion and adds less noise. However, there are still many devices being used by consumers that will not play AAC files. At higher bit rates, preferences should be based on more qualitative listening tests to determine which format you prefer and what your bit-rate/quality tolerances are. At 320kbps, AAC is clearly the winner, but the sound will be different from the raw PCM format — things will just not quite sound the same, and the stereo image will probably not be as clear, defined or wide as it should be.

The bottom line is that higher bit rates produce better-sounding encoded files, and ultimately your ears must be the judge, based on the type of material you are encoding. Spend an afternoon encoding the same song to different formats and different constant and variable bit rates. Listen for the subtle and not-so-subtle changes that the lossy compression introduces. Be sure to keep referencing the raw PCM file to remember what it should sound like. If file size is not a problem, then 320kbps CBR AAC files seem to be the best of the lossy codecs to my ear — but where file size isn't an issue, there's nothing wrong with the PCM files or lossless codecs! If limited storage space is a concern, then you have to balance your preferences and tolerances. It all comes down to this: do you want more songs, or more accurate frequency content?

Audio examples © 2011 Ian Corbett, except:

Now Now Sleepyhead: 'Milestones' and 'How The Hunter Hawk Hung In There', from the album …For Science. Written by Aaron Crawford, Brian McCourt & Phil Park. © 2011 Now Now Sleepyhead. Used by permission. All rights reserved.

www.facebook.com/nownowsleepyhead

Barclay Martin Ensemble: 'Eyes Of A Child' and 'Tumbling Down', from the album Pools That Swell With The Rain. Written by Barclay Martin. © & ℗ 2010 Lucky Kiwi Music, LLC. Used by permission. All rights reserved.