A digital mixer can be a great advantage in a stage setup, but if your set is highly automated you could have a big problem in the event of a crash.

A digital mixer can be a great advantage in a stage setup, but if your set is highly automated you could have a big problem in the event of a crash.

It could be argued that technology hasn't encroached as much on the average gig as it has on the typical project studio. Dave Shapton would like to change all that...

I was musing with some friends the other day about the band we used to play in. We got together in 1989 and played sporadically until a couple of years ago. There was absolutely nothing remarkable about the band except, perhaps, our first gig, which should stand as an abject (sic) lesson in how not to do it. Which provides a somewhat tenuous link to my subject for this month — how I think we may set up live gigs in the future.

Live & Dangerous

Gibson's GMICS digital interconnection protocol could revolutionise live performance.

Gibson's GMICS digital interconnection protocol could revolutionise live performance.

I've dabbled with electronic music ever since I discovered that by putting my fingers on the circuit board of an early batterypowered transistor tape recorder I could make it break into oscillation. With my fourth and third fingers in a certain position I could make it sweep through a couple of octaves. (Obviously, don't try this on anything mains powered!) Moving my fingers around made random squeaks and farts that were inordinately satisfying to a 10‑year‑old, if not necessarily to his parents. With much of my creative life spent making bleeps and bloops, I came very late to the art of playing live.

So when a mate suggested we should put a band together to play at his thirtieth birthday bash at a cricket club, I figured we should do it properly. I was in the business of selling digital audio workstations at the time, so I managed to blag some kit. The trouble is, so did the other members of the band, and we ended up with enough gear to play Wembley Arena.

<!‑‑image‑>It's not that we did anything wrong, particularly: it was just a matter of scale. What was, essentially, a glorified pub gig required 12 car‑loads for the equipment alone. Most spectacular of all was the lighting rig. With a total power consumption of 30KW, it blew every fuse in the building instantly (and probably a few on the national grid as well). But we were prepared for this. Luckily, the drummer had a degree in electrical engineering. What he did next, you should never, EVER think about doing yourselves, because it's so dangerous. He put on some Wellington boots (for insulation), walked into the ladies' toilet, and — without turning off the power — wired an extension cable to the national grid side of the fuse box. He ran it across the roof, and into the kitchen behind where the band were setting up. Nice job. You could have seen the lights from outer space.

The gig was a triumph, if getting to the end of most of the songs could be used as a measure of success, and the guests were surprisingly understanding about the band and its kit taking up threequarters of the venue.

As our experience grew, we managed to reduce our setup time from nine hours to a couple. And we always wondered how the other bands managed to do it in 10 minutes. The secret, it turned out, was simplicity, and only taking the gear that you actually need. Like a small mixer (possibly powered), and cable looms that plug in simply and unambiguously.

Fixing The Mix

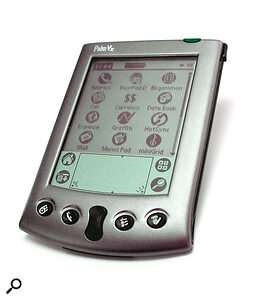

Live mixing on a PDA? It could happen!

Live mixing on a PDA? It could happen!

I started wondering how I would do it today, 10 years on from our original efforts to be a very average covers band. I think for starters I'd look at mixing. Of course, I'd downsize the desk. And I'd dispense with the frontof‑house sound‑mixing person (impractical in very small venues). I think I'd be very tempted by digital mixing, but I'm not completely convinced I'd use it in a live context.

What's good about digital mixing is very good indeed. Virtually all digital mixers have memories that can store 'snapshots' of a complete setup at any given time. This is useful not so much for individual levels, but for all the other facilities you get with a digital desk, like reverb, dynamics, and, of course, EQ.

It's quite possible that you could have different settings for each song, or even for parts of a song: it all depends how structured your performances are. It's also a question of how much control the individual musicians want over their own sound. If you're running a sequencer, then of course you can dynamically automate just about everything within a song.

But, however attractive these capabilities may be, I'm still not convinced that I'd want to work that way. There are several reasons.

Firstly, it takes a long time to set up an automated performance. It all depends on how deeply you want to get involved with setting up every aspect of the show in advance. I can certainly see the advantage of setting up a mix (and its associated effects) on a per‑song basis. I used to do something similar with my keyboard rig. Once I'd figured out that the 'Combinations' on my Korg M1 could be accessed by external MIDI devices, I had a different setup for virtually every number we did, with the M1's sounds mapped between two keyboards, or with the selected combination on the M1 also selecting a patch on another keyboard or my M1R.

The up‑side of this was that I was able to use much more complex sets of instruments than if I had to punch in several patch‑changes and keyboard zones manually during a song. I even allocated sound effects to single notes on an auxiliary controller keyboard. The downside was demonstrated to me when I lent my M1 to a fellow musician and, not thinking to check it when it came back, found, in the course of my next gig, that my friend had overwritten all my Combinations.

After this experience, I found that it was actually much more useful to have a good knowledge of the individual sounds on the keyboards, and to select them manually at the start of each song. Although not as polished, it was more flexible, especially when our repertoire started to run into hundreds of songs, and with the idea of a setlist a distant memory.

I suppose it's for the same reason that I would hold back from programming a whole gig into a digital mixer. Losing your settings from a digital desk could be worse than losing them from your keyboard. With a keyboard, you can always bring up a Hammond organ or a piano — you'll always get a sound, even if it's not the one you intended.

But if you lose your mix settings you might just get nothing. The last thing you want to worry about as the venue is (hopefully) filling up with punters is which menu you need to poke around in to get anything out at all! What might change my mind is if all small digital desks defaulted to a very simple and obvious routing, such that anyone used to conventional mixing desks could have a go at sorting it out.

Gigs Of The Future

<!‑‑image‑>Putting aside any doubts about reliability and usability for a while, let's look at how we could use some of the new, emerging technologies to make the life of a gigging band easier. Those of you with long memories may remember a Cutting Edge report on GMICS (Global Music Instrument Communication Standard), a digital audio interconnect protocol for use specifically in live environments (see SOS December 1999). Based on computer industry standard Ethernet, it was bi‑directional and capable of carrying at least 16 channels of digital audio per cable. Well, judging from the GMICS web‑site, not much seems to have happened in the last year, which is a shame because Gibson (the guitar people behind GMICS) defined and solved many of the problems presented by a digital live environment.

Still, now we have MLAN. It's rather different from GMICS, being based around IEEE 1394 (Firewire), rather than Ethernet. If you've read Paul Wiffen's excellent articles in SOS about MLAN, you'll have a good appreciation of its potential.

The first, and perhaps most obvious, way to use a digital interconnect system is to replace the cables between the instruments and the mixer. There would be several benefits arising from this. For a start, you would be virtually immune to interference. Any digital system is, by definition, less prone to disruption by transient analogue phenomena, and, where errors do happen, there is robust error correction. Digital stage‑boxes have been feasible for years. MADI (Multitrack Audio Digital Interface) would be OK for the last application, but it's not a network protocol, so you couldn't have one cable winding its way around several instruments.

And, of course, you would start from a position of unity gain throughout the system: you wouldn't have any problem with levels, as you'd be receiving digits and not voltages, which would make the sound person's job easier. The only discussion about levels would thus relate to the artistic rather than the technical — for example, how loud the keyboard player thinks he should be during solos.

So far, so dull, because we've only talked in terms of replacing analogue 'string' with digital 'string'. But when we move on to the next stage of the technology things get a lot more interesting. The new digital interconnect technologies are bi‑directional and can carry many channels. MLAN can carry at least 50 digital audio channels, and double that in ideal conditions. So you're no longer limited to a setup where the individual instruments have to send their audio to a central mixer. You could, for example, daisy‑chain instruments and devices together.

There should easily be enough channels available for a small to mid‑sized stage setup, which is good for simplicity — although I would worry about someone tripping over the one cable that connects everything together. You'd need to build in some redundancy somewhere — perhaps have two cables, taking different routes?

Decentralised Control

Modern keyboards and effects processors tend to be based on Digital Signal Processors (DSPs). Signal paths to and from these DSP devices are limited because of the cost of building inputs and outputs. Keyboards, these days, despite massive polyphony and multitimbrality, rarely have more than a stereo output. This restricts their usability in any context, including live, where it might be desirable to bring a bass part out of the keyboard into its own mixer channel, so it won't have to be drowned in reverb or whatever. Using MLAN, or something like it, should reduce the cost of I/O enormously, because at some point it will be as cheap to have 100 channels in and out of a device as two.

<!‑‑image‑>Once this is in place, we just need one more technological leap to completely revolutionise live setups (and possibly studio configurations, for that matter — but that's a separate discussion).

If we could free up any spare DSP on each device so that it could be used to do general‑purpose digital signal processing, we could, for example, dispense with a central mixer altogether. You'd need to put in place a protocol that could analyse available DSP on the network, and co‑ordinate processing resources to create a 'distributed' virtual mixer. With suitable software, you could use control panels on any of the devices in the network to set up the mix. Put a web server in there somewhere (manufacturers should be thinking about building web servers into all their equipment now) and you could even set up the mix from a mobile phone or Personal Digital Assistant. Or you could connect a control surface that looks and behaves like a mixer but has no audio processing itself — on balance, I think I'd prefer this arrangement to doing a live mix on a mobile phone!

If all the above actually came to pass, we would be able to turn up to a gig, string our instruments (including microphones and guitars) together with one cable, connect the stereo outputs from a keyboard or effects unit to the main PA speakers, the outputs from another to the monitors, and mix the whole thing from a control panel or even from a web browser.

Getting this to work wouldn't be easy. It would demand extraordinary cooperation between manufacturers, and some very clever software. But I think we will get there. This idea makes MIDI look like child's play. But don't forget that MIDI was revolutionary in its own time.

Celestial Harmony?

I was speaking to someone recently who used to work at an Antarctic research station. His job was analysing the ionosphere (the layer of charged particles in the atmosphere that allows short‑wave radio to travel round the world), and, bizarrely, he therefore had an interest in the lightning that happens at the North Pole. Apparently, the lines of magnetic flux surrounding the earth act like pipes, or channels, that can carry the electromagnetic vibrations caused by the atmospheric discharges all the way from the North Pole to the South Pole.

You can hear lightning on AM radios. It's a very short burst of white noise. But these magnetic flux conduits, running from the top of the Earth to the bottom, also act like prisms, except that they work at audio frequencies. A prism splits up white light into its component frequencies, creating rainbow effects. And that's exactly what happens to the noise of the lightning. The magnetic flux lines transport high frequencies faster than low ones, and so what started as a splat of white noise ends up as a sine‑wave sweep, starting with a high note and ending with a low one, over a period of about half a second. A kind of celestial Syn Drum, in fact.

It bestows a kind of cosmic splendour on the works of Kelly Marie and Rose Royce, doesn't it?