The head of the design team behind the Lexicon Studio talks to Paul White about the Lexicon sound and the benefits of the Studio approach — and offers some practical tips on optimising PCs for audio recording.

Bob Reardon headed the team that designed the Lexicon Studio digital system, but he's also the right guy to ask about the technology behind the legendary Lexicon reverb sound. Before talking about the new Studio system, I just had to see if I could get any closer to understanding what makes Lexicon reverbs sound the way they do.

Why is it that Lexicon reverbs sound different to the competition?

Bob Reardon: "The core stuff is based on David Griesinger's [distinguished acoustics specialist whose work has been central to the Lexicon sound] research, and it really comes from not trying to sample a room, analyse it, and then recreate it technically from that point of view. That's not going to produce all the right characteristics over time, and even if you do a more in‑depth analysis over time, and improve the model, is it still going to sound right when you start to adjust things like the room size? David uses the same techniques as everybody else for analysing acoustic spaces around the world, but when the analysis is complete, he's looking for some fairly easy code ways to execute the job. He tries to reduce the problem to simple variables.

<!‑‑image‑>"In terms of the basic blocks that make up reverb, those are now fairly well known. It's more to do with how they are connected together and how they interact, especially when you change parameters."

I remember when I spoke to David a number of years ago, he told me that the shape of the reverb build up and decay is very important in defining a space, and very often the decay isn't a simple exponential.

"That's exactly what I'm talking about — decay shape is very important, as are the diffusion characteristics, and how that relates to reverb time. If you take a look at our programs, there's actually a link parameter that links shape size and spread."

There seems to be something very different about the way a Lexicon reverb starts because, with a lot of competing designs, if you take the dry sound out of the mix you're left with a rather disembodied sound. With a Lexicon, however, as you fade out the dry sound, it just seems the source moves further away, but it still appears to be there.

"It's based on a lot of listening experience, and when I talk to David about it, he always goes back to the ears. There has been a big increase in the understanding of sound behaviour over the past couple of decades, and David has intuitively put together some of these interactions, often before other acousticians.

"The onset of reverb is also very important, and if you look at what other people have done, they've taken an early reflections section, and if you turn it off, it sounds as though everything comes all at once. Maybe the decay is OK, but the onset doesn't sound realistic. You have to add the early delays back in to create the illusion of a real acoustic space.

"Although we have delay shells around our large hall algorithm to simulate early reflections and to add detail, those aren't required to be there to have it sound like an acoustic space. What happens with early reflections in the first 50mS is particularly important."

So what you're saying is that early reflections also feature as an integral part of your algorithm, rather than just being superimposed over the reverberation the way most of your competitors do it?

"That's correct. And to do reverberation properly requires a great deal of processing power, which is one reason we decided to add hardware reverb to the Studio system."

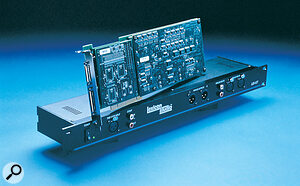

Lexicon Studio

"I'm personally very much a believer in what you can do with a computer, but there are certain things that can be done more nicely in specialised custom hardware. We've developed our own custom LexiChip ASICs, optimised for reverb, and what it comes down to is that different types of processors are good for different things. For example, the Motorola 56K family of DSPs is very good for linear maths and so is good for doing multiply‑accumulates — for doing mixers and things like that. You can even do a pretty good filter, though floating‑point will always yield better results on filter design.

"We made a custom chip to do our reverb because none of the processors out there are optimised for the job, so it's not as if we could have done it some other way. Reverb is a complex process that combines time processing with filtering — you're trying to recreate the acoustics of a real room, and that's a very complex algorithm. If you look at axial room modes that involve just two surfaces, the math is well known, but as soon as you get oblique or tangential modes that include multiple surfaces, the math gets very complex.

"With Lexicon Studio, we talked to a number of software companies and said, 'If you had somebody who was good at the hardware portion of this equation, what would you like them to do?' The answer was that, as well as I/O synchronisation of word clock and timing — which are big issues — they wanted good reverb that didn't depend on the host processor."

The Computer Studio

David Griesinger's research has been influential in Lexicon's unique approach to simulating reverberation.

David Griesinger's research has been influential in Lexicon's unique approach to simulating reverberation.

"What I notice is that there are lots more computers around in music and production, and they're increasing in number. What people need is a way to connect their computer to the real working world, and the concept of Studio is really summed up by a couple of things.

<!‑‑image‑>"We say that Studio is intelligent hardware for your software. There are software companies who say you can do everything in your computer, but in most cases that isn't true. For example, in post‑production you're probably going to use external samplers for short duration sound effects, and of course for music you have synths run by sequencers. The challenge for most people is how do they intelligently interface their computer to the outside world? Also, people are using multiple software packages depending on whether they're doing audio, sequencing, PQ coding, mastering and so on, and there are some very nice offerings out there in any number of these areas. The point is that people don't want to have to buy a different set of proprietary hardware every time they want to use another nice software package, so we're there trying to be one way to address that problem by providing good synchronisation, good sample alignment, good quality outboard I/O and DSP effects.

"You have to get the converters out of the computer, because inside it's like a giant radio station! You also have to be careful when you buy these systems that your converters really are outside and that you haven't bought on‑card converters attached to a breakout box. Lexicon has not traditionally been a first time buyer's company — people come to us when they understand the difference in quality between our products and what they bought first time around. Then they understand what they're getting for their money.

"Another problem with inexpensive cards is that you have to have a mixer to deal with your cue mix — you can't listen to your source signal because the travel from the input, up onto the buss, onto the RAM and back means you'll get a delay. You'll strike a note and it will sound late, sometimes up to a second. There are problems with Windows 95 as to what the minimum delay can be and that's determined by the task‑switching granularity of Windows, which has roughly a 20mS heartbeat. It works a bit like audio sampling where your sampling rate has always got to be at least twice as fast as your highest audio frequency, so it ends up that the practical limit is in the low 40s of milliseconds. This is true for everybody out there, so what we did is come up with a mode where you can mix the monitoring signal with the playback, right on the card, so you have a zero latency for overdubs."

So you're intelligently handling the delays to make the system transparent to the user. In fact you should only be aware of latency when you either start or stop the audio, and with your short latency, even that shouldn't be a problem.

"Latency is one area where we've been able to make a lot of improvements, and this has to do with another aspect of soundcards. There's a lot of work involved to make a card that's both a PCI buss master — which that means you're controlling the traffic on buss and dealing with modem cards, network cards and all that kind of thing — to do that and still get a lot of channels takes some very serious coding. One way to test latency is to hit play, hit stop, hit play... Do you hear audio as soon as you hit play or do you have time to make a cup of coffee?"

I'm personally very much a believer in what you can do with a computer, but there are certain things that can be done more nicely in specialised custom hardware.

It seems that a lot of your design work involves finding ways around the timing things that the PC doesn't normally handle too well.

"The PC is a challenging machine to write for, but there has to be a distinction between what's a limitation of the hardware and what's a limitation of the operating system. The latency is fundamentally a function of the operating system, and there are some specialist scientific and research operating systems, not widely available, that have very low latencies. Software drivers are also critical, and a lot of our work has been in the area of writing a good driver."

PC Friendly?

This all sounds great, but I know from the reader phone calls we get that a lot of people have problems getting PCs to work properly. Tracks wander out of time, glitches or clicks turn up in the audio, and one piece of software either conflicts with or refuses to communicate with another. What's the ideal PC setup for music?

"Well, that's a big one. There are a number of problem areas, but I can go over some specifics that might be valuable to your readers. What makes a good PC for hard disk audio? Let's start with what people already have, because it's tough to tell people to throw away what they have and buy something new — they always suspect it's a plot to get them to spend more money. So instead of telling you what to buy, let's have a look at ways to optimise what you've got.

"If you're going to do multi‑channel audio, about the minimum system is a Pentium I 166. I did say a Pentium, and a Pentium processor is an Intel product, but I'm not getting paid by Intel to tell you this. However, as a developer, it is likely to be the most solid processor choice. Yes, you can save some money by buying a Cyrix or AMD part, but in either case, the equivalent Intel part typically runs faster and if you buy one of the less expensive alternatives, often what will happen is that the manufacturers speed up the clock rate to make them behave more like an Intel part. That can cause problems where you're out of sync with your PCI buss. This doesn't affect word processors, but if you're streaming audio in real time, timing is critical and you can end up with glitchy audio.

"Let's say I've got a P166 and I want to optimise it. First go into System, go into Advanced Settings and there are three buttons there that control your computer's characteristics. You need to turn off the reader head for your disk. Often setting the machine up as a network server, even though you're not going to use it as a network server, also gives you a speed gain that is good for audio. And graphics cards can also be a real problem. In extreme cases, you run into a card that says it's a 32‑bit card and it actually only uses 8‑bit video transfers, which means it's hogging this big buss and you're not letting the audio or anything else go through. Hardware acceleration is also often a problem, and is not well written, so often you can turn the hardware acceleration off. Also, visit the web sites — don't presume that what you have in the box is the latest driver. You may also be able to find better video drivers.

"If you are going to buy something new, I'd look for an ATX motherboard that has an advanced graphics port which gets the video processing off your PCI buss. You might also look for onboard SCSI so that it keeps that off your PCI buss, so that the PCI buss can be used for your I/O. This way you distribute the load rather than always having everything fighting for space on the PCI buss."

<!‑‑image‑>When it comes to drives, nearly everyone advises you use SCSI for audio as it's faster, but is it true?

"Technically yes, but it's a statement in isolation so you have to look at the whole problem. For example, with a P166, if you get a SCSI card it's going to go on your PCI buss along with your audio card and video card. If you use a fast IDE drive instead, it'll reduce the traffic on the PCI buss, which can actually speed up the overall performance. If you get a modern extended IDE drive, you're going to get good performance, and on my P166, I've been able to pull over 24 tracks, 26 tracks off my IDE drive during playback."

Are there hidden aspects of drive speed that people need to be aware off?

"Sure — you have to look for a high sustained data transfer rate, fast access time and so on. A faster rotational rate generally mean you'll get data off the drive faster, and check out the sustained transfer rate in burst mode."

So, how may tracks is it realistic to expect to get off a single drive? We see so many systems offering huge numbers of tracks, but does that mean using multiple drives?

"Let's talk about tracks for a minute. An I/O system, like our Studio card, is handling streams of audio, and I find it more useful to think of the number of playable tracks in the same way as you might think of polyphony on a sampler. You might have a whole lot of samples loaded, but how many can you play back at once? During a mix you may be pulling 24 tracks off your hard drive, but if you're mixing internally, it's all coming out via two audio streams. The maximum number of tracks you can play at once is a function of the drive itself — if you're using SCSI, is it SCSI 3, is it IDE? What I can say is that with our hardware and using Cubase VST as a reference system, 24 is a rational number to focus on. With IDE drives, once you do a lot of punch‑ins or overdubs and put other songs on there, you can get at the hairy edge if you don't defragment your drive. With SCSI you'll have a bit more bandwidth, and with SCSI you might be able to play back 32 tracks at once. But again, I must advise caution. It's one thing doing y our tests on a freshly formatted drive, but after you've recorded a few songs and done a few overdubs, the performance is bound to be worse due to fragmentation."

Certainly Lexicon is a processing company, and there are plans for expanding Studio with a buss that is actually on the card. We're not announcing any DSP expansion to the system yet, but stranger things have happened.

If you have a separate drive for audio, is it sufficient just to erase all the files when you start a new project, or is it important to defragment?

"You can do that, but it's better to defragment or reformat, because as your drive ages sectors occasionally go bad and need to be mapped out. While I'm using a drive I'll do periodic defragmentations to keep additions to the drive efficient, and then when the project is done and you're backed up, reformat the drive. A short format is usually OK."

Being pragmatic, then, should you knock say 25% off the maximum quoted number of tracks and then use that as a guideline?

"With a high‑end machine, 24 tracks is still a rational number to talk about, but with slower machines, you might get 18 to 20 tracks. Slower still you should still be able to get 16 tracks. It's also more work for the computer to handle the record side than the playback side, so another question you hear is, 'How many tracks can I play back while I'm recording how many tracks?' That's also a factor of the I/O system and the drive, and on a fast computer we feel a realistic figure for our system is 16 tracks of recording with eight tracks of playback, or vice versa. We aren't imposing the limit — it's down to the hardware, and though we can get 32 tracks on the fastest hardware, if you use older technology and have your drive fragmented, you should expect rather less. That's why you need a second drive for your audio. I've recorded stuff onto the main boot drive at trade shows where the second drive has been damaged, and it works. I've managed 16 tracks, including overdubs, but I wouldn't recommend it."

Studio Of The Future

People don't want to buy into a closed system, so what are you doing to ensure Studio grows with the needs of the user? At the moment it is only supported by Cubase VST PC, but surely Mac drivers must follow, soon as well as support for other major audio sequencers?

"This is going to be a big year for us, with a number of things coming on line with Studio. We're now out in the market with the PC version working with Steinberg, and the Mac drivers are expected by the end of the summer. We also have the 16S interface, which is the large I/O box expected in autumn. Also this summer there's the MM I/O multi‑channel package that we're working on with SEKD, and of course we have other development parties coming on line, such as Sonic Foundry.

"We're also talking to other sequencer manufacturers — this is an open system with a lot of advantages, and the user wants to be able to use a number of software packages, not be extorted into a single hardware solution."

It would also seem logical to extend the on‑board processing capabilities to include multi‑effects, or even DSP areas where you or other developers can load more software‑driven processes that you don't want to burden the computer with. Do you have plans in these areas?

"Certainly Lexicon is a processing company, and there are plans for expanding Studio with a buss that is actually on the card. We're not announcing any DSP expansion to the system yet, but stranger things have happened. Hardware effects and DSP‑based processes are the two areas of most interest to the user, and there are some nice tools out there that do use generic DSP. Again, some tools work better with proprietary DSP solutions, so we can expand either way. Our expansion buss is 24‑bit and has 288 channels. That's all I can say for now, but the system will evolve in interesting ways."