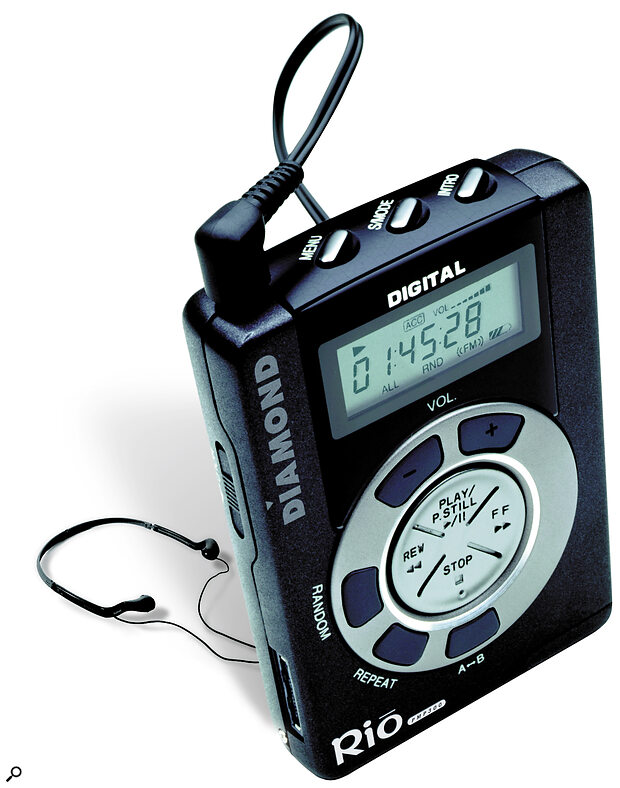

Diamond Rio — perhaps the best‑known MP3 player.

Diamond Rio — perhaps the best‑known MP3 player.

Most of us are aware that MP3 encoding offers a way of drastically reducing the size of digital audio files while preserving reasonable sound quality. But how many of us know how it works? Paul Sellars explains the theory behind MP3, and offers some tips on making your own coded files sound better.

Anybody who takes an interest in music and audio can hardly have failed to notice that 'MP3' is an increasingly popular media buzzword. It is typically mentioned in connection with the Internet, and with the associated piracy, fraud and corruption that are often associated with it. However, very few commentators take the time to explain either what MP3 actually does, or why it is important. In this article, I'll try to explain exactly what MP3 is and how it differs from conventional digital audio.

Definitions

Let's start by looking at the 'official' definition of MP3. 'MP3' is an abbreviation of MPEG 1 audio layer 3. 'MPEG' is itself an abbreviation of 'Moving Picture Experts Group' — the official title of a committee formed under the auspices of the International Standards Organisation (ISO) and the International Electrotechnical Commission (IEC), in order to develop an international standard for the efficient encoding of full‑motion video and high‑quality audio. The plan was to find a method for compressing audio and video data streams sufficiently that it would be possible to store them on, and retrieve them from, a device delivering around 1.5 million bits per second — such as a conventional CD‑ROM. In 1993, the MPEG committee published the fruits of their labours in the form of the document ISO/IEC 11172. This set out a standard for coding of moving pictures and associated audio for digital storage media at up to about 1.5 Mbit/S, more commonly known as the MPEG 1 standard.

The requirement that encoded video and audio must be retrievable from a medium delivering only 1.5 million bits per second dictated that one of the principal concerns of this document was data compression, since the transmission of uncompressed audio and video streams would require many more bits per second. It is the particular method of data compression employed that makes MP3 interesting — we'll consider this in more detail later.

The MPEG 1 standard was concerned with techniques for compressing both video and audio. What we now call MP3 is really the audio half of the MPEG 1 standard, put to a new use: specifically, it is 'Layer 3' of MPEG 1 audio. Three 'layers' of MPEG 1 audio coding were defined, each being more complex than its predecessor, and each providing better results. The layers are backwards‑compatible, which means that any software or hardware capable of playing (or 'decoding') Layer 3 audio should also be able to decode Layers 1 and 2.

The MPEG 1 standard was concerned with techniques for compressing both video and audio. What we now call MP3 is really the audio half of the MPEG 1 standard, put to a new use: specifically, it is 'Layer 3' of MPEG 1 audio. Three 'layers' of MPEG 1 audio coding were defined, each being more complex than its predecessor, and each providing better results. The layers are backwards‑compatible, which means that any software or hardware capable of playing (or 'decoding') Layer 3 audio should also be able to decode Layers 1 and 2.

Before we go on to look at what MP3 encoding actually entails, however, it might be helpful to consider the traditional form of digital recording that MPEG 1 audio sought to replace, and why its replacement was deemed necessary.

Conventional Digital Audio

The vast majority of digital recording systems work in broadly the same way. An incoming audio signal is fed into what is known as an Analogue‑to‑Digital (A‑D) converter. This A‑D converter takes a series of measurements of the signal at regular intervals, and stores each one as a number. The resultant long series of numbers is then placed onto some kind of storage medium, from which it can be retrieved. Playback is essentially the same process in reverse: a long series of numbers is retrieved from a storage medium, and passed to what is known as a Digital‑to‑Analogue (D‑A) converter. The D‑A converter takes the numbers obtained by measuring the original signal, and uses them to construct a very close approximation of that signal, which can then be passed to a loudspeaker and heard as sound.

Figure 1: Conventional PCM digital recording aims to store and recall waveforms that are used to represent sounds. Perceptual coding represents a move away from the idea that digital audio systems should accurately reproduce waveforms to the idea that they should be able to reproduce our perception of a waveform.The generic name for this system is Pulse Code Modulation (PCM), and it is used in all modern samplers, digital recorders and computer audio interfaces. In order to achieve a faithful reproduction of an audio signal, PCM aims to make an accurate record of the waveform of that signal. Anybody who has ever seen an oscilloscope, or is familiar with samplers and audio editing software, will have come across waveforms. They are the interesting‑looking wavy lines that are used to represent sound (see Figure 1, below). Put simply, a waveform is a kind of graph. The horizontal axis represents time, and the vertical axis represents amplitude. Amplitude, as far as sound is concerned, is related to volume. For example, were a microphone to be attached to an oscilloscope and placed in a noisy environment, the amplitude of the waveforms displayed would correspond with amount of air pressure acting on the diaphragm of the microphone, and hence the volume of the sound.

Figure 1: Conventional PCM digital recording aims to store and recall waveforms that are used to represent sounds. Perceptual coding represents a move away from the idea that digital audio systems should accurately reproduce waveforms to the idea that they should be able to reproduce our perception of a waveform.The generic name for this system is Pulse Code Modulation (PCM), and it is used in all modern samplers, digital recorders and computer audio interfaces. In order to achieve a faithful reproduction of an audio signal, PCM aims to make an accurate record of the waveform of that signal. Anybody who has ever seen an oscilloscope, or is familiar with samplers and audio editing software, will have come across waveforms. They are the interesting‑looking wavy lines that are used to represent sound (see Figure 1, below). Put simply, a waveform is a kind of graph. The horizontal axis represents time, and the vertical axis represents amplitude. Amplitude, as far as sound is concerned, is related to volume. For example, were a microphone to be attached to an oscilloscope and placed in a noisy environment, the amplitude of the waveforms displayed would correspond with amount of air pressure acting on the diaphragm of the microphone, and hence the volume of the sound.

Different kinds of sound correspond with characteristically different waveforms. The waveforms of certain pitched instrument sounds may often have clearly visible repeating 'cycles', and the quantity of repeating cycles within a given time‑frame will vary according to the pitch of the sound. Sounds that have no distinct pitch tend, on the other hand, to correspond with more irregular waveforms.

By taking and storing a series of very accurate measurements of a waveform, PCM is able to reconstruct a very close approximation of the sound that corresponds with that waveform. In a high‑quality PCM system, in fact, the approximation can be so close that a recorded sound is practically indistinguishable from its source. However, in order for the system to work well, it has to operate within certain limits. There are essentially two variables. The first of these is known as sampling frequency, and the second is known as bit depth. Sampling frequency describes the number of times that an incoming audio signal is measured or 'sampled' in a given period of time. It is typically specified in kilohertz (kHz, meaning thousands of cycles per second) and, to record so‑called 'CD‑quality' audio, a sampling frequency of 44.1kHz is required.

Bit depth, on the other hand, pertains to the accuracy with which each measurement or 'sample' is taken. When the A‑D converter in a PCM digital audio system measures an incoming signal, and stores the measurement as a number, this number is represented as a series of 0s and 1s, also known as a 'binary word'. Bit depth, therefore, refers to the length of the binary words used to describe each sample of the input signal taken by the A‑D converter. Longer words allow for the representation of a wider range of numbers, and thus for more accurate measurements and more faithful reproductions of a signal. In a 16‑bit system, each sample is represented as a binary word 16 digits long. As each of these 16 digits can be either a 0 or a 1, there are therefore no less than 65,536 (216) possible values for each sample.

The Problem Of File Sizes

A 16‑bit system with a sampling frequency of 44.1kHz is widely accepted to be the benchmark for consumer digital audio, and when manufacturers boast of 'CD‑quality' audio, they are basically describing a system that operates within, or is capable of operating within, these limits. However, one down side of PCM audio is that, whilst the sound quality can be excellent, storing recordings will use up substantial quantities of whichever medium is used. This is mathematically inevitable: 44,100 16‑bit samples per second will yield 88,200 'bytes' of data (since there are 8 bits to a byte) per second — and twice that (176,400 bytes per second) for a stereo signal. So, to record one minute of stereo audio requires 10,584,000 bytes (around 10 megabytes) of available space on a storage medium.

Whilst this is acceptable as far as conventional audio CDs are concerned, for other applications it can be problematic. In situations where sound needs to be recorded and stored as a file on a computer, it is usually considered desirable to reduce the size of said file as far as possible, in order to make the most of limited system resources. When computers are connected to the Internet, the need to minimise file sizes becomes all the more pressing. Space on web servers is limited and can be costly, and domestic telephone lines simply do not have the necessary bandwidth to allow for the transmission of very large files at anything better than excruciatingly slow speeds.

Sampling Frequency

In order to make audio files more manageable it is necessary to reduce their size, and there are a number of ways in which this can be done. One method is to reduce the sampling frequency of the recording system. For example, if the sampling frequency is halved, half as many measurements of the input signal are taken, and thus only half as much data is produced. However, this has some serious side‑effects as far as sound quality is concerned. It would be an exaggeration to say that sound quality per se is reduced by half, but the recording is nevertheless in some respects half as accurate. Specifically, the 'frequency response' of the recording system is halved. In effect this means that much of the high‑frequency content of the sound is lost, leading to recordings lacking in brightness and clarity.

The correlation between sampling frequency and frequency response is explained by what's known as the 'Shannon‑Nyquist Theorem', which states that, in order for a signal to be accurately reproduced by PCM, at least two samples of each cycle of its waveform must be taken. In practice, therefore, the highest frequency that can be accurately recorded is one half of the sampling frequency used. This is known as the 'Nyquist Limit'. a conventional 'CD‑quality' digital recording system uses a sampling frequency of 44.1kHz, and thus can only reproduce frequencies up to 22.05kHz. All frequencies above this limit are discarded. This is not usually considered to be a problem, since research has shown that most human beings are capable of hearing little or nothing above that frequency anyway. If sampling frequency is reduced to 22.05kHz, however, all frequencies above 11.025kHz will be discarded — and this will result in a noticeable degradation of sound quality. Many musical instruments produce frequencies beyond this range, and may sound dull and unpleasant in recordings made with a reduced sampling frequency.

Bit Depth

An alternative method of reducing audio file sizes is to reduce the bit depth of the recording system that creates them. For example, 8‑bit samples could be used instead of 16‑bit ones. Just as with a reduction in sampling frequency, this undoubtedly has the desired effect of reducing the amount of data generated by making a recording. If each sample of the input signal is stored as an 8‑bit rather than a 16‑bit binary word, then the recording yields only one byte per sample rather than two. This doubles the capacity of the storage medium used, by halving the file size.

An illustration of the distortion introduced by using a low bit depth — in this case, a 3‑bit recording. Because of the limited range of values available to record each sample, the actual value has to be misrecorded, generating so‑called 'quantising errors'.

An illustration of the distortion introduced by using a low bit depth — in this case, a 3‑bit recording. Because of the limited range of values available to record each sample, the actual value has to be misrecorded, generating so‑called 'quantising errors'.

A reduction in bit depth, however, also has some undesirable side‑effects as far as sound quality is concerned. As we have seen, a 16‑bit system allows for 65,536 or 216 possible values for each sample taken. You might be forgiven for thinking that an 8‑bit system would allow for exactly half this resolution, but this is not the case: an 8‑bit binary word has in fact only 28 ( 256) possible values. This allows for considerably less accurate sampling of the input signal, and thus makes for far inferior recordings. With fewer possible values for each one, an 8‑bit recording system is forced to sometimes 'misrepresent' samples by a quite significant amount (see diagram, above). This misrepresentation can be described as a reduction in the 'signal‑to‑noise ratio' of the system, and leads to recordings that tend to sound harsh and unnatural.

In spite of the problems inherent in reductions in the sampling frequency and bit depth of PCM audio, these methods are often used in applications where pristine sound quality is considered a lesser priority than the conservation of system resources. Various other technical refinements of the PCM model, such as DPCM (Differential Pulse Code Modulation) and ADPCM (Adaptive Differential Pulse Code Modulation), have also been developed in order to try to reduce file sizes without sacrificing too much in the way of sound quality. These formats basically aim to improve upon ordinary PCM with more effective methods of managing and storing data. The sound quality and efficiency of these methods is generally quite reasonable. However, even at their best, they do not yield sufficient reductions in file sizes to solve the problem of how to deliver full‑length, high‑quality sound recordings in 'multimedia' and Internet applications. In order to do this, quite a different approach is required.

Perceptual Coding

What makes MP3 encoding effective as a method of audio data compression is its deviation from the PCM model. As we have seen, in a PCM system the goal is to digitally reproduce the waveform of an incoming signal as accurately as is practically possible. However, it could be argued that the implicit assumption of PCM — namely that the reproduction of sound requires the reproduction of waveforms — is simplistic, and involves a misunderstanding of the way human perception actually works.

The fact of the matter is that our ears and our brains are imperfect and biased measuring devices, which interpret external phenomena according to their own prejudices. It has been found, for example, that a doubling in the amplitude of a sound wave does not necessarily correspond with a doubling in the apparent loudness of the sound. a number of factors (such as the frequency content of the sound, and the presence of any background noise) will affect how the external stimulus comes to be interpreted by the human senses. Our perceptions therefore do not exactly mirror events in the outside world, but rather reflect and accentuate certain properties of those events.

Frequency‑domain masking: the solid line shows the minimum threshold of hearing against frequency. The dotted line shows how this threshold changes in the presence of three loud tones at 250Hz, 1kHz and 4kHz. Although a quieter 5kHz tone (shown as a vertical line) would be audible on its own, in the presence of these other tones it is 'masked' and therefore inaudible.

Frequency‑domain masking: the solid line shows the minimum threshold of hearing against frequency. The dotted line shows how this threshold changes in the presence of three loud tones at 250Hz, 1kHz and 4kHz. Although a quieter 5kHz tone (shown as a vertical line) would be audible on its own, in the presence of these other tones it is 'masked' and therefore inaudible.

We might therefore decide, as our goal is to reproduce a sound for the benefit of a human listener, that it is quite unnecessary to accurately recreate every characteristic of that sound's waveform. Instead we might concentrate on determining which properties of the waveform would be most important to the listener, and prioritise the recording of these properties. This is the theory behind 'perceptual coding'. To put it more simply, we might say that whilst PCM attempts to capture a waveform 'as it is', MP3 attempts to capture it 'as it sounds'.

In order for this to be possible, a certain set of judgements as to what is or isn't meaningful to a human listener has had to be determined. This set of judgements is sometimes called a 'psychoacoustic model'. In order to understand how the psychoacoustic model works, we need to consider two important concepts in digital audio and perceptual coding: 'redundancy' and 'irrelevancy'.

Both words describe grounds on which a certain amount of audio data is deemed to be unnecessary, and sufficiently unimportant that it can be discarded or ignored without an unacceptable degradation in sound quality. We have already seen an example of redundancy in the discussion of PCM waveform coding earlier in this chapter. CD‑quality PCM audio discards frequencies higher than 22.05kHz — the sampling frequency of 44.1kHz was chosen because frequencies about 22.05kHz were deemed to be beyond the range of human hearing, and therefore redundant. Of course, if we were to decide (as some audiophiles have) that frequencies above 22.05kHz actually do carry important information about the colour and tone of sound and music, we might choose to use an increased sampling frequency, thereby capturing some of the frequencies the CD‑quality system would have treated as redundant. Even if we were to do so, however, we would not have done away with redundancy altogether: we would simply have moved the goalposts (or, more accurately, the 'Nyquist Limit') so that redundancy occurred at higher frequencies than before. Redundancy, in other words, is not new as far as digital audio is concerned: it is in fact an inevitable fact of digital life.

Irrelevancy, however, is a rather more radical concept. The theory behind psychoacoustic coding argues that, because of the peculiarities of human perception, certain properties of any given waveform will be effectively meaningless to a human listener — and thus will not be perceived at all. However, because of its insistence on capturing the entire waveform, a PCM system will end up recording and storing a large amount of this irrelevant data, in spite of its imperceptibility on playback. Perceptual coding aims, by referring to a psychoacoustic model, to store only that data which is detectable by the human ear. In so doing it is possible to achieve drastically reduced file sizes, by simply discarding the imperceptible and thus irrelevant data captured in a PCM recording.

...the sound of an electric guitar might seem to dominate the mix, up until the moment the drummer hits a particular cymbal — at which point the guitar might seem to be briefly drowned out. These are examples of 'time‑domain' and 'frequency‑domain' masking respectively.

Masking

The psychoacoustic model depends upon a particular peculiarity of human auditory perception: an effect known as masking. Masking could be described as a tendency in the listener to prioritise certain sounds ahead of others, according to the context in which they occur. Masking occurs because human hearing is adaptive, and adjusts to suit the prevailing levels of sound and noise in a given environment. For example, a sudden hand‑clap in a quiet room might seem startlingly loud. However, if the same hand‑clap was immediately preceded by a gunshot, it would seem much less loud. Similarly, in a rehearsal‑room recording of a rock band, the sound of an electric guitar might seem to dominate the mix, up until the moment the drummer hits a particular cymbal — at which point the guitar might seem to be briefly drowned out. These are examples of 'time‑domain' and 'frequency‑domain' masking respectively. When two sounds occur simultaneously or near‑simultaneously, one may be partially masked by the other, depending on factors such as their relative volumes and frequency content.

Time‑domain masking: a loud signal will mask quieter ones which occur both a short period before it starts, and for a longer period after it has ceased.

Time‑domain masking: a loud signal will mask quieter ones which occur both a short period before it starts, and for a longer period after it has ceased.

Masking is what enables perceptual coding to get away with removing much of the data that conventional waveform coding would store. This does not entail discarding all of the data describing masked elements in a sound recording: to do so would probably sound bizarre and unpleasant. Instead, perceptual coding works by assigning fewer bits of data to the masked elements of a recording than to the 'relevant' ones. This has the effect of introducing some distortion, but as this distortion is (hopefully) confined to the masked elements, it will (hopefully) be imperceptible on playback. Using fewer bits to represent the masked elements in a recording means that fewer bits overall are required. This is how MP3 coding succeeds in reducing audio files to be around one‑tenth of their original size, with little or no noticeable degradation in sound quality.

MP3 Encoding

The first requirement for creating an MP3 data stream is an existing PCM audio stream. MP3 should be thought of not as a method of digital recording in its own right, but rather as a process for removing irrelevant data from an existing recording. The audio to be encoded will typically be 16‑bit, and sampling frequencies of 32kHz, 44.1kHz and 48kHz are supported. The first stage of the process involves taking short sections from the original PCM stream and processing them with what's known as an 'analysing filter'. The MPEG 1 standard does not specify exactly how this filter should be constructed, only what it should do. Typically MP3 encoders employ a variation of a mathematical algorithm such as the 'Fast Fourier Transformation' (FFT) or 'Discrete Cosine Transformation' (DCT) to do the job. We do not need to discuss how these algorithms actually work, only what their effect on the incoming audio is: namely to divide each section up into 32 'sub‑bands'. These sub‑bands represent different parts of the frequency spectrum of the original signal. But why is this necessary?

Well, a random section from a PCM recording is likely to contain a mixture of very different sounds. It might contain a predominantly low‑frequency sound like a bass drum, a predominantly high‑frequency sound like a ride cymbal, and a sound such as a vocal from somewhere in between, all occurring at once. As we know, MP3 needs to separate 'irrelevant' sound from 'relevant' sound, and to process each kind differently. By separating sections of audio into sub‑bands, it is possible for the MP3 encoder to sort different kinds of sounds according to their frequency content — and so to prioritise some over others, according to the requirements of the psychoacoustic model. If, in the above example, some of the low‑frequency sounds of the bass drum were deemed to be irrelevant, the encoder could use fewer bits of data to encode the sub‑bands containing those frequencies, thereby leaving more bits free to encode the sub‑bands carrying some of the frequencies from the vocal — which might be more 'relevant' to a listener, and thus less forgiving of distortion and noise caused by lower bit rate encoding.

In the next stage of the process the sub‑band sections are grouped together into 'frames'. The encoder examines the contents of these frames, and attempts to determine where masking in both the frequency and time domains will occur, and thus which frames can safely be allowed to distort. The encoder calculates what's known as a 'Mask‑to‑Noise' ratio for each frame, and uses this information in the final stage of the process: bit allocation.

During bit allocation, the encoder decides how many bits of data should be used to encode each frame. The more bits allowed, the more effective the encoding can be. The encoder therefore needs to allocate more bits to frames where little or no masking is likely to occur — but can afford to allocate fewer bits to frames where more masking is likely to occur. The total number of bits available varies according to the desired bit rate for transmission, which is chosen before encoding begins according to needs of the user. Where sound quality is a high priority, a rate of 128 kilobits per second (kbps) is often used.

When encoding is finished, all the frames are saved — each with some bytes of header data — and an MP3 file can be saved. The resulting file can then be read by an MP3 decoder, and played as audio. An MP3 decoder performs a simplified reverse form of the encoding process. The sub‑band frames are 'resynthesized' into time‑domain sections (using an inverse form of the analysing filter), and joined up to recreate an audio stream. However, as the encoder provides information about bit allocation in the frame headers for the decoder to read, the decoder does not have to make such decisions itself. Consequently it has much less work to do. a decoder can therefore be implemented in a somewhat simpler program or device than an encoder — which probably explains why there is so much more decoding software available than encoding software.

The Future

MP3 thrives because it is an open, cross‑platform format with a user base of many millions world‑wide, but it no longer represents the pinnacle of perceptual coding for audio. Since 1993, the MPEG committee has gone on to develop various new standards for audio, video and multimedia applications. MPEG 2 AAC ('Advanced Audio Coding'), for example, provides higher‑quality audio at lower bit rates than MP3, but is not backwards‑compatible with MPEG 1 audio. Furthermore, various software developers have attempted to launch their own proprietary 'replacements' for MP3. The most serious contenders to date are so‑called 'MP4' (actually nothing to do with MPEG 4, but rather a derivative of MPEG 2 AAC) and Microsoft's WMA (Windows Media Audio) format, which can also provide impressive sound quality at low bit rates.

Whether or not any such format will succeed in overthrowing MP3 in the near future is impossible to predict. Certainly it will not be easy to tempt people away from a format which provides them with good sound quality and impressive data compression, which allows them to make clean digital copes of whatever they like (regardless of legal technicalities) and for which there is variety of good, free software available. In the final analysis, however, it may prove to be irrelevant.

The MPEG 1 standard was devised at a time when CD‑ROM‑based multimedia was being touted as the future of home entertainment and education, and the final nail in the coffin of the printed media. In this Internet age the ambitions of less than 10 years ago already seem somewhat quaint, and no doubt 10 years from now the 56.6kbps modems and slow domestic telephone lines which make MP3 encoding a practical necessity will seem just as archaic. At the time of writing, BT are preparing to release ADSL ('Asymmetric Digital Subscriber Line') packages for both business and home users, which will provide dramatically faster Internet connections, with far greater bandwidth than could be achieved with a conventional connection. Technology being what it is, however, it is bound not to be too long before ADSL is itself replaced by cheaper, better, more powerful alternatives.

As bandwidth restrictions become a distant memory, and RAM and hard disk space become cheaper and more plentiful, might we find ourselves living in a world where better‑than‑CD‑quality audio streams from every teenager's home page, and the cheapest entry‑level sampler records hours of 96kHz, 24‑bit PCM audio into a multiple‑gigabyte reservoir of RAM? If so, what need will we have for data‑compression techniques such as MP3? Or will perceptual coding become ever more advanced and efficient, with near‑perfect psychoacoustic models allowing us to banish every single bit of irrelevant data from an audio stream — without a hint of discoloration or distortion in the sound? In which case, who would want to waste resources by recording megabytes of irrelevant PCM data?

Who knows? By then we'll probably all be living on the moon anyway...

Paul Sellars is the author of the forthcoming Wizoo Guide To MP3.

Getting The Most Out Of MP3

MP3 is what's known as a 'lossy' data‑compression technique (so‑called because a certain amount of the original data is removed and lost forever during compression), and consequently it is inevitable that an MP3 is never going to sound quite as good as the PCM source from which it was created. As a musician and producer, you obviously take pride in the quality of your recordings, and will be unwilling to compromise too much in the way of sound quality for the sake of data compression. There are a few things you can do to ensure that you are getting the best possible performance out of the format.

Xing Technology's Audio Catalyst is one of few MP3 encoding programs to offer variable bit‑rate coding.First of all, you can try experimenting with higher bit rates. It has become conventional on the web to offer 'lo‑fi' MP3s as 96kbps files and 'hi‑fi' MP3s as 128kbps files. However, these are by no means the only possibilities. a good encoder such as Xing Technology's Audio Catalyst will allow you to encode at bit rates as high as 224, 256 and 320kbps. These higher rates can yield MP3s that come close to living up to the claims of 'CD‑quality audio' that are all too often inappropriately made for the format. The downside, of course, is that higher bit rates yield greater file sizes. Taking as an example a 16‑bit 44.1kHz AIFF‑format drum loop sample, the original 426K PCM file can be reduced to a 204K 320kbps MP3 or a 93K 128kbs MP3. Imagine scaling up those file sizes up to cover a full‑length track, and it is apparent that what may, with some material, seem only to be a quite subtle improvement in sound quality can come at a high price in terms of poor data compression.

Xing Technology's Audio Catalyst is one of few MP3 encoding programs to offer variable bit‑rate coding.First of all, you can try experimenting with higher bit rates. It has become conventional on the web to offer 'lo‑fi' MP3s as 96kbps files and 'hi‑fi' MP3s as 128kbps files. However, these are by no means the only possibilities. a good encoder such as Xing Technology's Audio Catalyst will allow you to encode at bit rates as high as 224, 256 and 320kbps. These higher rates can yield MP3s that come close to living up to the claims of 'CD‑quality audio' that are all too often inappropriately made for the format. The downside, of course, is that higher bit rates yield greater file sizes. Taking as an example a 16‑bit 44.1kHz AIFF‑format drum loop sample, the original 426K PCM file can be reduced to a 204K 320kbps MP3 or a 93K 128kbs MP3. Imagine scaling up those file sizes up to cover a full‑length track, and it is apparent that what may, with some material, seem only to be a quite subtle improvement in sound quality can come at a high price in terms of poor data compression.- Another possibility to consider is Variable Bit Rate encoding, or 'VBR', which is a method of dynamic encoding wherein the bit rate is altered continuously according to the content of the music. Complex passages can thus be encoded at higher bit rates, whilst simpler passages are encoded at lower bit rates. In this way, VBR aims to strike a balance between better sound quality and better data compression. The primary advantage of this method is that it allows for better encoding of music with a very wide dynamic range, such as certain orchestral pieces for example. The primary disadvantages are that VBR invariably yields somewhat larger file sizes, the improvement in sound quality is often quite imperceptible, and VBR is by no means universally implemented at the present time. Only a few encoders (most notably Audio Catalyst) support VBR encoding, and some decoders and hardware players may be confused by VBR files — incorrectly reporting track times in some cases, and simply refusing to play in others.

- Finally, you might want to consider the various stereo mode options offered by some encoders. Typically most encoders will default to a 'Joint Stereo' setting, where information about the differences between the left and right channels in the PCM source is encoded in one channel, whilst information that is identical in both the left and right channels is encoded in another (this is sometimes known as middle/side stereo). Another possibility is so‑called 'simple' stereo encoding, where the two channels of a stereo PCM recording are encoded independently. With simple stereo encoding the overall bit rate is constant, but bit allocation is dynamic between the two channels according to the complexity of the audio in each channel at any given moment. It's impossible to say categorically which method is 'better', however it may be worth experimenting with each of them to see which suits a particular track best.