You name it, they can model it: the studio in a PC comes ever closer.

You name it, they can model it: the studio in a PC comes ever closer.

The totally software studio, with sound quality at least as good as that offered by studio hardware, is now more feasible than ever before. But what are the factors to consider if you're going to go completely 'soft'?

A few years ago lots of PC musicians started to get excited about the possibility of being able to run all the audio and MIDI tracks required by a song, plus effects, on a single PC. After all, we were being offered lots of new software synths, samplers and plug-in effects, all claiming to sound as good as their hardware equivalents. At the time, the only thing holding this concept back was lack of CPU 'grunt' — it was just so easy for everything to grind to a halt when a decent reverb plug-in could consume 50 percent of your entire processing capability. However, the arrival of dual-core PCs has resulted in a colossal leap in processing power, so is it now time to sell our remaining hardware and go totally 'soft'?

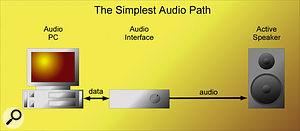

The simplest possible audio path requires a single stereo pair of cables between the output of your audio interface and your active speakers (or power amp and passive speakers), but relies on your audio interface providing an analogue output-level control to easily adjust speaker volume.

The simplest possible audio path requires a single stereo pair of cables between the output of your audio interface and your active speakers (or power amp and passive speakers), but relies on your audio interface providing an analogue output-level control to easily adjust speaker volume.

Audio Quality Benefits

Until a few years ago, most musicians started their musical journey with an instrument, such as a guitar or keyboard. When they wanted to record some music, they bought a reel-to-reel tape deck or cassette multitracker. Once they had two two or three instruments to record, they bought a small mixing console, and perhaps a microphone or two for recording vocals or other acoustic instruments. The mixer allowed them to connect all these sources, tweak their sounds using its EQ controls, plug in hardware effects units to add reverb, chorus, and so on, and then mix the sounds together, using the channel faders, to produce the final stereo output.

Even if you want to record multiple hardware synths and vocals or acoustic instruments, as long as your audio interface provides enough inputs you won't necessarily need to buy a hardware mixing console.

Even if you want to record multiple hardware synths and vocals or acoustic instruments, as long as your audio interface provides enough inputs you won't necessarily need to buy a hardware mixing console.

Today, many musicians start this process in reverse, by first buying their PC and then looking at what needs to be added to it for making music. Ironically, freed from the preconception that a mixing console is required, many adopt the much simpler approach of relying totally on the facilities of their audio interface, which may result in better audio quality. When I started recording my music, many years ago, I learned the hard way that even keyboards and hardware synths can sound significantly better if you listen to them plugged straight into a power amp/speakers rather than first passing them through a budget mixing console, as handy and versatile as these are.

Every device you pass your audio through affects its sound quality slightly, even when EQ controls are set to their central positions or bypassed. Some expensive devices can add some desirable 'fairy dust' to the proceedings during recording, but we want playback to be as neutral and transparent as possible, so that we can mix our songs knowing that they will 'travel well' and essentially sound the same on other systems.

Thus one of the incidental advantages of recording directly to the inputs of your audio interface and playing back directly from its outputs to a pair of loudspeakers or headphones is that the audio signal path often ends up considerably less complex, and this may mean that the end result sounds cleaner and more transparent. Some musicians also find that replacing a large mixing desk with a comprehensive multi-channel audio interface gets rid of nasty early reflections between their speakers and listening position, thus improving the acoustics of their studio! You can see various suggested setups with simple audio paths in the diagrams opposite.

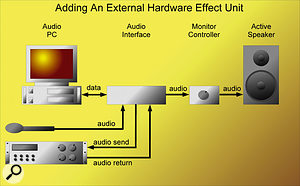

As long as you have some spare inputs and outputs you can plumb in external effect boxes if you need to, although they will be subject to buffer delays (see main text).

As long as you have some spare inputs and outputs you can plumb in external effect boxes if you need to, although they will be subject to buffer delays (see main text).Soft Synths: Poor Relations?

If you're considering creating all your music inside a PC, it's important to make sure that the software synths and plug-ins you choose provide audio quality on a par with their hardware counterparts. When I first broached the subject of the software studio, way back in SOS November 2000, many musicians remained unconvinced that a software version could ever sound as good as the 'real thing'. However, my view remains exactly the same as it did then: there's absolutely no fundamental reason why a software synth should sound any different from a hardware synth containing DSP chips.

My only reservation at the time was that, unlike developers programming for dedicated DSP chips, who could splurge processing power on a few luxury plug-ins, those designing for native (computer) processing had to keep overheads as low as they reasonably could, to avoid accusations that their products were 'CPU hungry'. This could result in corner-cutting. However, whereas in 2000 we were getting excited about 750MHz processors, we now have dual-core versions wherein each core is clocked over four times as fast. And with this ability to compute eight times faster, cutting corners can become a thing of the past.

However, one area does catch people out. While many hardware synths incorporate multi-effects that result in all their presets sounding polished and complete (but which sometimes have to be disabled because they can be overpowering when heard in the context of a complete mix), software synths can sometimes sound raw in isolation (other descriptions I've noticed on forums include "tinny" and "thin", or just "bad", but they are all referring to the same thing). This is simply because their developers expect that you'll mostly be using plug-in effects to mould them to perfectly fit your songs.

Moreover, polished tracks generally require a lot of creative input, so it's perhaps not surprising that some beginners feel disappointed when they create a track featuring a clutch of soft synths and it sounds a bit flat in comparison with commercial music. This isn't because software "sucks"; it's more the case that a little more effort may be required in honing the sounds and effects.

Monitor Controllers: Some Options

If your sound creation is done entirely in software, the very simplest hardware setup is to connect the output of your PC's audio interface directly to a pair of active monitor speakers, or to a power amp and pair of passive speakers (see the first diagram on the previous page). With some acoustic treatment in your studio, the results obtainable via this simple audio path can be superb.

However, such a minimalist approach has one possible drawback: your audio interface really needs an analogue output-level control so that you can easily adjust the speaker volume. Using the digital faders found in the software Control Panel utilities included with many audio interfaces not only throws away digital resolution, but also runs the risk of a full-strength signal accidentally reaching your speakers or your ears. Some active speakers do have level controls, but jumping up and down to adjust them is tedious, especially when there are usually two controls (one on the back panel of each speaker) whose positions you have to keep in sync to maintain a balanced stereo image.

Few audio interfaces provide an analogue output-level control, so if you dispense with a hardware mixer you may need a monitor controller to provide this function. Sadly, few audio interfaces currently provide a dedicated analogue output-level knob, so many musicians are instead buying a 'monitor controller' to perform this function. Recommended models include the Samson C-control (www.samsontech.com) for about £80, Mackie's Big Knob (www.mackie.com) for about £250, and the £400 Presonus Central Station (www.presonus.com). All offer three sets of switched outputs so that you can connect up to three pairs of speakers to hear how your mixes translate to other systems, plus talkback functions with built-in microphones, allowing you to talk to musicians in your live room, if you have one. The Presonus model also features a totally passive audio path (no power supply required, and containing no transistors, FETs or integrated circuits), so it can't add any background noise, distortion or other colouration to your audio as it passes through — although the relatively simple active path of the other two should only have a tiny effect on your sounds.

Few audio interfaces provide an analogue output-level control, so if you dispense with a hardware mixer you may need a monitor controller to provide this function. Sadly, few audio interfaces currently provide a dedicated analogue output-level knob, so many musicians are instead buying a 'monitor controller' to perform this function. Recommended models include the Samson C-control (www.samsontech.com) for about £80, Mackie's Big Knob (www.mackie.com) for about £250, and the £400 Presonus Central Station (www.presonus.com). All offer three sets of switched outputs so that you can connect up to three pairs of speakers to hear how your mixes translate to other systems, plus talkback functions with built-in microphones, allowing you to talk to musicians in your live room, if you have one. The Presonus model also features a totally passive audio path (no power supply required, and containing no transistors, FETs or integrated circuits), so it can't add any background noise, distortion or other colouration to your audio as it passes through — although the relatively simple active path of the other two should only have a tiny effect on your sounds.

If you don't have multiple speakers or require talkback functions there are several simpler products available, all of which feature high-quality passive components. The £99 PVC (Passive Volume Control) from NHT (www.nhthifi.com) features a clearly calibrated, large rotary knob in a small one-third-rack width or desktop unit. It allows precise 1dB level adjustments over a 40dB range and features completely balanced operation via its Neutrik combo XLR/TRS input jacks and XLR outputs. Another, slightly more sophisticated, alternative is SM Pro's M-Patch (www.smproaudio.com). Once again, this one costs about £99, and it has identical I/O socketry, but also offers two switched inputs, each with individual rotary level controls but smaller knobs, and two switched outputs, all housed in a half-rack-width case. SM Pro have also just launched a new M-Patch 2, at about the same price, with the same passive main signal path, but with larger knobs, additional stereo/mono and mute switches and a budget headphone amp with its own level control.

While we're talking about hardware, there's also a lot to be said for the dedicated control surface. A knob for each function makes real-time sound-tweaks far more intuitive. Once audio reaches your MIDI + Audio sequencer, you can use one of the many available control surfaces to do your mixing, sound editing, synth tweaks, and so on, just like you did with hardware.

Software Studio Advantages

The biggest advantage of the software studio is undoubtedly that each song is stored along with all the sounds it uses, plus effect settings, mix settings, and so on — in other words you have total recall, just like really expensive analogue mixing desks with motorised faders that remember their positions. Your entire sound library also resides on the computer, making it very easy to audition many different sounds with a few mouse clicks, instead of laboriously loading sample and synth banks into your hardware. Nearly all software sequencers also let you capture real-time movements of on-screen faders and other controls, alterations to sounds, or tweaks to plug-in effects. This automation goes far beyond what was possible a few years back, and enables far more creative possibilities.

With the audio quality from soft synths such as Arturia's Moog Modular V being so good, is there any longer a need to worry about replacing hardware with software?

With the audio quality from soft synths such as Arturia's Moog Modular V being so good, is there any longer a need to worry about replacing hardware with software?Being able to memorise absolutely everything means that you never have to worry about losing track of a song — even if you have to leave it for months, when you come back to it every setting and sound will be perfectly preserved. This is great for not only the home studio owner, but also for professional bands, producers and recording engineers. Anyone who requires lots of different mixes and remixes to suit different markets round the world also benefits: they can load up any song from any album within seconds, start on a new version and save it under a different file name, without disturbing the original.

The Other Side Of The Coin

It's only fair to point out that the rise in popularity of the software studio has had some repercussions in the wider musical world, both good and bad. Those who have started to replace their old hardware synths with software equivalents and now want to sell the hardware to make more space in the studio will find that second-hand prices seem ridiculously low (I just can't bear to part with my immaculate Korg M1 for £50, for instance). On the other hand (as you'll see if you visit the SOS Forum), there are still plenty of musicians who swear by hardware rather than at it, so now's the time to snap up lots of bargains.

One-box Songwriting

Many PC musicians prefer the all-in-one approach of 'software studio' applications such as Propellerheads' Reason, Cakewalk's Project 5, Arturia's Storm and Image Line's FL Studio, where a single programme contains a virtual version of everything you might find in an electronic music studio, including synths, sample players, drum machines, effects, a sequencer to record and play back the notes, and an audio mixer to mix them all together. However, for a more open-ended approach, the flexible MIDI + Audio sequencer still reigns supreme, Cubase and Sonar being probably the most popular on the PC.

Some PC audio applications, such as Gigastudio and Audiomulch, prefer to run in stand-alone mode rather than inside a sequencer, so if you have to run them alongside a MIDI + Audio sequencer you'll have to find a way of combining their audio outputs. The traditional way is to allocate the final stereo output of each application to a different stereo output on your audio interface and then plug them into different channels on a hardware mixing console (I've worked in this way for years).

However, there are various options if you don't have a mixing console and want to keep your signal path as short as possible. One is to buy an interface with multi-client drivers that allow different applications to be allocated to the same pair of stereo outputs. You can't always tell this is possible from the documentation, but I've done it with quite a few interfaces over the years.

If you want to run several music software applications side by side, there are various ways to combine their outputs. One of the most elegant is ESI Pro's Direct WIRE utility, which lets you plumb the output of one application into the input of another, allowing one app to record the contribution of the other.

If you want to run several music software applications side by side, there are various ways to combine their outputs. One of the most elegant is ESI Pro's Direct WIRE utility, which lets you plumb the output of one application into the input of another, allowing one app to record the contribution of the other.Other interfaces may provide several stereo playback channels that can be individually allocated to different applications, but have a DSP mixer utility that lets you mix these playback outputs to emerge from a single physical output socket on the interface. Examples of these on the PC include most models from Edirol, Emu, ESI, M-Audio and Terratec. A few, like Echo's Mia, provide several virtual outputs that you can allocate to different applications and then mix, to emerge from its single physical stereo output.

The only disadvantage of these approaches is that although you hear all the applications mixed together through your speakers, at some stage you'll need to record this combined output. Some interfaces provide very useful internal loopback options, the best known being the Direct WIRE utility functions of the ESI range (see screen above), which allow you users route the inputs and outputs of any of its MME, WDM, ASIO or GSIF driver formats to each other, so they can record the output of one application in another, to capture it as an extra audio track.

Other interfaces with integral 'zero latency' DSP mixers may let you re-record the output of their mixer, so you can capture any combination of the software playback channels from different applications, plus additional signals arriving at the interface inputs, as a new 'input' in your sequencer. Failing this, you can nearly always loop cables from the interface outputs back to its inputs, to record the combined signal, although if you have to rely on analogue I/O your final audio mix will have to pass through both D-A and A-D converters, lengthening the audio path and compromising audio quality slightly.

If your particular interface doesn't provide any of these options, there may yet be another internal mixing solution, this time thanks to software. Rewire is a technology introduced by Propellerheads that's now included in quite a few audio applications, including Ableton Live, Adobe Audition, Apple Logic Pro, Arturia Storm, Cakewalk Sonar and Project 5, Digidesign Pro Tools 6.1, MOTU Digital Performer, Propellerheads' own Reason, RMS Tracktion, Sony Acid, Steinberg Cubase and Nuendo, and Tascam Gigastudio 3. If your audio applications support Rewire, one can be the 'synth' to generate sounds, while the other becomes the 'mixer' and receives one or more output streams from the synth while simultaneously locking them in perfect sync.

Software Studio Monitoring

For the very simplest audio path, look for an audio interface that provides a dedicated and extremely handy analogue output level control, like the Focusrite Saffire shown here, which also offers built-in DSP effects that you can use during recording, with no latency.Nowadays, nearly all audio interfaces offer monitoring functions, so you needn't be too apprehensive about abandoning a traditional hardware mixer when 'going soft'. So-called zero-latency monitoring basically lets you hear the signals you're recording almost instantly, by providing a direct link between the output of the audio interface's A-D converters and the input of its D-A converters. Incoming audio is therefore only subject to conversion delays, which generally amount to about 2ms (1ms for each converter). This is negligible, being identical to listening to audio from a loudspeaker just two feet away, and even vocalists shouldn't find many problems listening to their voices on headphones with such a short delay.

For the very simplest audio path, look for an audio interface that provides a dedicated and extremely handy analogue output level control, like the Focusrite Saffire shown here, which also offers built-in DSP effects that you can use during recording, with no latency.Nowadays, nearly all audio interfaces offer monitoring functions, so you needn't be too apprehensive about abandoning a traditional hardware mixer when 'going soft'. So-called zero-latency monitoring basically lets you hear the signals you're recording almost instantly, by providing a direct link between the output of the audio interface's A-D converters and the input of its D-A converters. Incoming audio is therefore only subject to conversion delays, which generally amount to about 2ms (1ms for each converter). This is negligible, being identical to listening to audio from a loudspeaker just two feet away, and even vocalists shouldn't find many problems listening to their voices on headphones with such a short delay.

Unfortunately, if you want to add plug-in effects to the signals being recorded (for instance, many vocalists like to hear some reverb on their performance to help them with pitching, while electric guitarists may want to pass their instruments through distortion or amp-modelling plug-ins), you face the additional path of getting the signals into the PC's CPU and back out again. With a fairly typical audio interface latency setting of 6ms, the total delay becomes 14ms (1ms through the A-D converters, 6ms through the interface's input buffers, a further 6ms through its output buffers, and a final 1ms from the D-A converters).

This 14ms delay is on the borderline of acceptable for the majority of fretted and keyboard instruments, but way above the comfort zone for vocalists. You can try reducing your audio interface buffer size to 3ms, for a total path delay of around 8ms, while some musicians even try a 1.5ms setting, for a total delay of around 5ms. The only disadvantage of this drop in latency is that your CPU overhead may rise considerably.

One way around this situation is to choose an audio interface with built-in DSP effects, such as Focusrite's Saffire or one of Emu's DAS models. The beauty of these effects is that they also have zero latency, and you can apply them to vocals and instruments when monitoring, even if you intend to actually record the signals dry. Another clever twist can be found in M-Audio's Firewire 1814 interface, which features an Auxiliary buss that you can either use for setting up a separate monitor mix to send to headphones, or for adding external hardware effects to live inputs.

Outside Help

Even if you want to generate the bulk of your sounds using software applications, you may still want to occasionally plumb in some hardware. If you've got a hardware synth that offers unique sounds, you can record its output directly into your PC's audio interface as an audio track. If you've got several such synths, you simply need an extra mono or stereo input for each one (see the third diagram on page 161) and can then record or audition them all simultaneously, in real-time, with added plug-in effects.

There may also be times when you want to treat one or more software-generated tracks with hardware effects that you can't duplicate in software — perhaps a favourite EQ, compressor or reverb, for instance. To connect external effect boxes, you once again need some spare inputs and outputs on your audio interface to act as send and return channels (see the last diagram on page 161). I covered this topic in more depth back in SOS March 2004, in a feature on using hardware effects with your PC software studio.

If you like the idea of the software studio but want to give your PC a helping hand with effects processing, you could consider installing one or more dedicated audio DSP cards, such as the TC Powercore or Universal Audio UAD1, inside your PC, to provide 'hardware quality' effects that simply appear to your PC as extra VST plug-ins.

If you find that a single PC still doesn't have enough clout to run all the software synths and plug-ins you fancy, you could create a PC network to share the load, as described in SOS August 2005 in my PC Musician feature on spreading your music across networked computers. One of the options outlined there, using FX Teleport (www.fx-max.com), relies on LAN (Local Area Network) connections to ferry both MIDI and audio to your secondary and additional PCs, with all the mixing still being carried out digitally by your main PC sequencer application, so your analogue audio path can still remain simple for optimum quality.

Finally, with sufficient spare I/O channels on your audio interface you could even patch in an analogue summing mixer such as the Audient Sumo (reviewed SOS February 2006), to replace software digital mixing of all your audio channels with analogue hardware mixing, while still avoiding a traditional mixing console. However, I suspect this is going too far. In most setups your audio will sound significantly better if you instead spend the money you might have spent on a summing mixer on an interface with better converters in the first place, and keep the shortest possible analogue signal path.