The advice of professional acousticians can be hard to evaluate on paper — but what if you could actually hear the results of their work before building starts?

"If you have a recording space that is 100 square metres and four metres high, and you tell the client 'You're gonna have a 0.7 second reverberation time, is that OK for you?', the client usually says 'I don't know. How does it sound?' Because it's hard to relate these kinds of numbers to a sound, even for some professionals. They can say 'I like that room, that sounds great.' But is it 0.6? Is it 1 second? It makes it a lot easier to actually listen to it."

Dirk Noy is the founder of WSDG's Swiss operation.

Dirk Noy is the founder of WSDG's Swiss operation. Gabriel Hauser joined soon after WSDG was set up, and both he and Dirk have been involved in hundreds of projects since.Gabriel Hauser is explaining the motivation behind the AcousticLab, an innovative tool developed by the acousticians and studio design team at the Walters-Storyk Design Group. The idea is that clients no longer have to make choices on the basis of visual mock-ups and dry data. Instead, they can actually hear what WSDG's work will achieve, and audition different choices that are better made at the design stage than after the fact.

Gabriel Hauser joined soon after WSDG was set up, and both he and Dirk have been involved in hundreds of projects since.Gabriel Hauser is explaining the motivation behind the AcousticLab, an innovative tool developed by the acousticians and studio design team at the Walters-Storyk Design Group. The idea is that clients no longer have to make choices on the basis of visual mock-ups and dry data. Instead, they can actually hear what WSDG's work will achieve, and audition different choices that are better made at the design stage than after the fact.

"Let's say you have a client here and you want to discuss materials for the ceiling treatment, for example," continues WSDG's Dirk Noy. "You have a choice between the expensive ceiling cloud, which is super reflective or super absorptive, a medium-priced version, and a cheap version that the carpenter from around the corner could make. You can have the client make a decision based on numbers and graphs, but you can also have them listen to it. We can model all the three cases, and we can have an intelligent conversation about it. The thinking behind it is that we can have the dialogue with people that have no acoustical background, and don't know the terminology, but they still know how to listen."

Real & Virtual Models

At present, there are three AcousticLabs, located in New York, Berlin and Basel. I visited Gabriel and Dirk at the Swiss site, to experience the lab for myself and find out more about how it works.

Acoustical simulatons of one sort or another have actually been around for a surprisingly long time. From the 1930s onwards, the acoustic properties of a building would be tested by constructing a miniature version of that building in scales of 20:1, 10:1 or even 8:1. High-frequency test signals would then be fed into these models. "People built little scale models and took measurements in them," says Dirk. "It had a tiny source, usually a spark generator; you had little receivers with microphones and then you could measure and then scale the measurement result and learn something about the absorption characteristics, reverb times et cetera of that space. And it's still being done for bigger projects."

Dirk Noy: "The thinking behind it is that we can have the dialogue with people that have no acoustical background, and don't know the terminology, but they still know how to listen.

There are, however, obvious drawbacks to this approach. For one thing, the need to scale the results down by a factor of 10 or 12 means that you need to generate and record test signals that extend to 30 to 40 kHz, and even then, you won't learn much about how the real building will behave at frequencies higher than 3 or 4 kHz. For another, it's expensive and time consuming, as Dirk relates: "Unfortunately, when you have to change the front of the balcony railing or something, you have to actually bring in real stuff to make the change, or perforate it for real. Then you find, 'Oh, no, I made too many holes and I have to redo the whole thing,' so another three days are lost! So this is a nice approach, but very few projects actually support this type of procedure."

On all but the largest projects, physical scale models have now been replaced by virtual counterparts. Of course, architects too use CAD tools to model their structures; in theory, these architectural models could be used as a basis for acoustic calculations, but WSDG almost always prefer to create their own. "You can take an AutoCAD drawing and put it into the acoustical simulation environment, but the level of detail is too high in the architectural drawing," says Dirk. "There might be a door handle that you don't really want to have in your acoustical model because it will increase the calculation time tenfold without giving any additional information. So we usually make the models ourselves."

"Creating the model is an acoustician's work, really," agrees Gabriel. "For example, there could be a screen that I don't need to include in an acoustic model because it's acoustically transparent, it's just a visual screen. Or if a column is really small, say 10 centimetres in diameter, I just leave it out, because for the wavelengths that we're looking at, it's too small. So creating the model is the first step that already needs acoustic knowledge. That's why we don't use architectural models, except as a baseline."

Conversely, an acoustic model can also include data that might not be part of a standard architectural model, most obviously the reflective and absorptive properties of the materials involved.

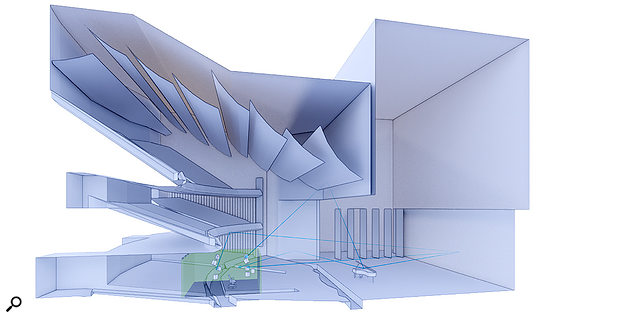

WSDG use a program called EASE to generate 3D models of building interiors.

WSDG use a program called EASE to generate 3D models of building interiors.

Rays, Cones & Pyramids

Once all the acoustically significant surfaces have been incorporated into the model, it can be used to generate not only a visual but also an auditory simulation of the space. Key to this is the use of a technique derived from ray-tracing. The user specifies where in the room a hypothetical source (such as a musician or a PA speaker) should be placed, along with the location and directivity of a virtual multi-channel microphone array capturing that source. WSDG's software will then project paths extending outwards in all directions from the source. If these do not reach the receiver either directly or after a given number of reflections from walls and other surfaces, they are disregarded. If they do reach the receiver, they are adjusted for the absorption coefficients of the materials they've encountered along the way, and an impulse response is generated. Eventually, all of these responses are summed to create a single impulse response that represents the complete sound captured by that virtual microphone for the chosen source.

This graphic explains, conceptually, the principles behind the Acoustic Lab. Paths that sound can travel are traced from a hypothetical source (represented by the piano) to a number of simulated microphones located around a hypothetical listening position. The positions and orientations of these microphones correspond exactly to the placement of the loudspeakers around the sweet spot in the Acoustic Lab itself.

This graphic explains, conceptually, the principles behind the Acoustic Lab. Paths that sound can travel are traced from a hypothetical source (represented by the piano) to a number of simulated microphones located around a hypothetical listening position. The positions and orientations of these microphones correspond exactly to the placement of the loudspeakers around the sweet spot in the Acoustic Lab itself.

"The ray-tracing or cone-tracing or pyramid-tracing algorithms radiate energy from the source in a certain room area and see what happens with it, if it bounces back towards the receiver or not," explains Gabriel. "So they actually follow cones of sound, so to speak, and if one of those hits the receiver, you start to narrow it down and look at that cone a bit more precisely."

When suitable impulse responses have been obtained, these can then be convolved onto test tones for measurement — but they can also be applied to anechoic recordings, to create an auralisation that recreates the experience of standing in the as-yet-unbuilt concert hall, live room or railway station. The directivity, position and number of the virtual microphones is directly related to the intended playback system. For example, the AcousticLab in WSDG's Berlin office uses an Ambisonics speaker array to recreate the soundfield at the virtual listening position. Modelling a space for playback on a first-order Ambisonics rig would involve creating impulse responses for three orthogonal figure-8 microphones and one omni, all positioned at the same point in space. In theory, you could also create a binaural auralisation for headphone listening, by mimicking what would be captured on a pair of in-ear mics.