After 85 years of active service, the humble VU meter remains as useful as ever in today’s digital studios — despite BBC engineers nicknaming it ‘virtually useless’!

As I was listening to David Mellor’s SOS Podcasts about gain‑staging (www.soundonsound.com/author/david-mellor) recently, my ears pricked up at his passing comment that “BBC engineers refer to the VU meter as virtually useless.” It wasn’t a surprise, exactly, as I was told exactly that during my initial BBC training in the early 1980s and I believed it for years. But I came to understand that the claim was, in fact, based on a fundamental misunderstanding of how the VU meter was designed to be used!

My view today — and it’s shared by many professional mastering and recording engineers all around the world — is that the VU meter remains very useful, even in today’s digital studios. So, in this article, I’ll take you through the virtues and practical benefits of the octogenarian VU meter, and explain how the BBC got it so wrong. To do that, we need to start with a little history...

Origins: PPMs & SVIs

The SVI meter — the first to be scaled in Volume Units — was described in a paper published in 1940, and its core specification remains in use today.The VU meter was conceived in 1939, through a collaboration between research company Bell Labs and the American broadcasters CBS and NBC, and a paper they published in 1940 described what they called the Standard Volume Indicator (SVI). As the SVI meter’s scale was calibrated in ‘volume units’ (and marked ‘VU’), it became known popularly as the VU meter — in much the same way that the modern BS.1770 Integrated Loudness meter is often called simply an ‘LUFS meter’. It’s testament to the genius of those 1940s engineers that the SVI’s core specification lives on today, virtually unchanged, as IEC 60268‑17:1990 Sound System Equipment. Part 17: Standard Volume Indicators.

The SVI meter — the first to be scaled in Volume Units — was described in a paper published in 1940, and its core specification remains in use today.The VU meter was conceived in 1939, through a collaboration between research company Bell Labs and the American broadcasters CBS and NBC, and a paper they published in 1940 described what they called the Standard Volume Indicator (SVI). As the SVI meter’s scale was calibrated in ‘volume units’ (and marked ‘VU’), it became known popularly as the VU meter — in much the same way that the modern BS.1770 Integrated Loudness meter is often called simply an ‘LUFS meter’. It’s testament to the genius of those 1940s engineers that the SVI’s core specification lives on today, virtually unchanged, as IEC 60268‑17:1990 Sound System Equipment. Part 17: Standard Volume Indicators.

Of course, various audio meters were already available in the 1930s, so why exactly did CBS, NBC and Bell Labs feel the need to design their own? Well, in the late 1930s, radio broadcasting was a rapidly expanding business globally, and there was a requirement to monitor and control the broadcast programme levels consistently and reliably. Over in Europe, many national broadcasters had developed their own metering systems and nearly all were Peak Programme Meters (PPMs or, more accurately, QPPMs — see the ‘Quasi‑PPM’ box for more on this). To protect the transmitters from overload, European engineers believed it was necessary to monitor the peak programme levels, and their work resulted in the DIN (German), Nordic (Scandinavian) and BBC (British) PPM meters. Although these each had different scales they all employed similarly complicated, valve‑based active electronics to detect and display pseudo‑peak levels.

Such complex metering systems, though, were relatively expensive to manufacture, so although the American broadcasters were aware of the European designs they thought them impractical for use across the vast US radio industry. A simpler, more affordable, passive solution was desired that, preferably, would be capable of indicating the average signal level in a way that reflected how listeners would perceive the volume.

Hence their SVI, and this meter comprised just three passive elements: an adjustable attenuator, a copper‑oxide rectifier and a bespoke moving‑coil meter, built to detailed specifications in terms of its sensitivity and needle ballistics. The rectifier was required to convert an AC audio signal into a DC voltage that could move the meter, and the passive copper‑oxide type they specified avoided the expensive valves employed in European PPMs, as well as the associated power supply. The bespoke moving‑coil meter’s relatively slow (300ms) needle rise and fall times ensured the meter would register low‑frequency and sustained sounds better than brief transient peaks, so it correlated reasonably closely to perceived audio volume, hence the decision to use the Volume Unit scale. By far the most complex and expensive element was the constant‑impedance variable attenuator, which had to maintain a consistent 600Ω load across the line being metered.

A glimpse inside SIFAM’s old MkIV VU meter. Note that, as with all VU meters, there are two scales on the front: one in Volume Units, and the other showing modulation from 0 to 100%.Photo: SIFAM

A glimpse inside SIFAM’s old MkIV VU meter. Note that, as with all VU meters, there are two scales on the front: one in Volume Units, and the other showing modulation from 0 to 100%.Photo: SIFAM Photo: SIFAMBack in the 1930s (and well into the ’70s), professional audio interfacing used a matched‑impedance format rather than the matched‑voltage one of today, but the variable attenuator was an essential element to this meter. It adjusted the moving‑coil meter’s native sensitivity to accurately assess the signal level present on the line being monitored. Today, we expect metering to indicate the signal level directly: if you look at a conventional sample‑peak bar‑graph in your DAW, you can see whether the signal is peaking at 0dBFS or ‑10dBFS, or whatever. But the SVI was designed to be used differently, and this explains its relatively limited and cramped scale range. In practice, the SVI was wired across the signal line to be monitored, and the attenuator was adjusted until the meter hovered just below the 0VU mark (nearly 100% modulation). It was actually the resulting attenuator setting rather than the meter display itself that informed the user of the nominal signal level. The SVI’s attenuator covered levels from 0 to +24 dBm, and that made it suitable both for standard studio lines (which typically operated at +8dBm) as well as telecoms lines (which operated at +16dBm).

Photo: SIFAMBack in the 1930s (and well into the ’70s), professional audio interfacing used a matched‑impedance format rather than the matched‑voltage one of today, but the variable attenuator was an essential element to this meter. It adjusted the moving‑coil meter’s native sensitivity to accurately assess the signal level present on the line being monitored. Today, we expect metering to indicate the signal level directly: if you look at a conventional sample‑peak bar‑graph in your DAW, you can see whether the signal is peaking at 0dBFS or ‑10dBFS, or whatever. But the SVI was designed to be used differently, and this explains its relatively limited and cramped scale range. In practice, the SVI was wired across the signal line to be monitored, and the attenuator was adjusted until the meter hovered just below the 0VU mark (nearly 100% modulation). It was actually the resulting attenuator setting rather than the meter display itself that informed the user of the nominal signal level. The SVI’s attenuator covered levels from 0 to +24 dBm, and that made it suitable both for standard studio lines (which typically operated at +8dBm) as well as telecoms lines (which operated at +16dBm).

Although often overlooked, both the SVI meter and the VU meters that evolved from it have two scales. The primary calibration, with which we’re all no doubt familiar, is marked in decibels relative to 0VU. But there’s a secondary scale beneath that shows the programme modulation level between 0 and 100%. ‘Modulation’ is a term rooted in AM radio broadcasting, and it refers to the strength of the audio signal being broadcast: 0% modulation means that the carrier is present but conveying no audio signal, while 100% modulation means it’s carrying as much audio amplitude as is possible without overload. On an SVI/VU meter, the 100% modulation level aligns with 0VU (this is coincidental; it relates to the meter’s slow ballistics) and 0% is a little below the ‑20VU mark. In use, a steady 1kHz or 440Hz tone at the desired Operating Level (whatever that may be) should read 0VU, while a varying audio programme should stay below the 100% mark most of the time.

Studio Operating Level

Another important aspect of the VU is its default alignment level. With the original SVI’s variable attenuator set to 0dBm (ie. no attenuation applied), a steady tone of +4dBm at the input (yes, it’s dBm rather than dBu because this was designed for a 600Ω environment!) gave an indication of 0VU on the meter, and so this is the reference level for the moving‑coil meter itself. The SVI was widely employed in broadcasting and, later, in American music studios, and it was calibrated to the relevant system operating level, whatever that happened to be. By the 1960s and ’70s, though, multitrack recording was becoming popular and the associated equipment needed lots and lots of audio meters for the mixing consoles and the tape machines. The PPM was far too expensive to meet that need, but a simpler, more affordable version of the SVI meter might — if only that expensive attenuator could be omitted. So that’s what the manufacturers did. Dropping the attenuator meant the meter sensitivity couldn’t (easily) be adjusted, but it was thought not to matter in the context they would be used: if you wanted to record ‘hot’ you just had to put up with the meter being pinned to the right.

As I’ve explained, the basic meter’s inherent sensitivity gave a 0VU reading for an input level of +4dBm. This became the de facto console operating level simply because it was convenient. As American recording and signal processing equipment spread around the world with VU meters, so too did this ‘standard’ +4dBm studio operating level. By the end of the 1970s, the 600Ω matched‑impedance interconnecting format had faded away, to be replaced with matched‑voltage interfacing. But the same reference voltage (1.228V RMS) remained, so today there’s an almost universal association between 0VU on the meter and a +4dBu standard operating level.

The Virtually Useless BBC!

In a parallel universe, given a different design of moving‑coil meter, 0VU could just as easily have been specified for a signal level of 1V RMS or ‑10dBV, or any other entirely arbitrary value determined purely by the mechanics of an available moving‑coil meter. And this is a subtle, yet critically important distinction that lies at the heart of the BBC’s misunderstanding.

A lot of engineers still mistakenly believe that 0VU is defined as +4dBu...

A lot of engineers still mistakenly believe that 0VU is defined as +4dBu, but this is absolutely not the case! 0VU does equate to +4dBu in most commercial studios and in equipment designed for use in that environment, but that’s only because it was an easy alignment to adopt, given the native sensitivity of the VU meters originally employed in such equipment. 0VU is actually defined as the system Operating Level, whatever that may be for the equipment and system being metered. For example, 0VU is often aligned at ‑2dBu in parts of France, to 0dBu in most European broadcast companies, to ‑20dBFS in a SMPTE‑calibrated digital system, and to ‑18dBFS in an EBU‑calibrated digital system. All of these are perfectly legitimate.

Fortunately, this wide variety of VU calibration standards is easily accommodated because almost all modern VU meter implementations employ active electronics to drive the VU meter — in effect, the original SVI’s passive matched‑impedance attenuator has been replaced by active adjustable buffer circuitry that makes adjusting the meter’s sensitivity to suit any desired Operating Level very straightforward in most cases.

Now that you’re armed with an understanding of how 0VU is supposed to be aligned to the local Operating Level, consider what would happen if it were mis‑calibrated 4dB too high. That’s exactly what the BBC did, institutionally, when accepting commercial tape recorders and other equipment factory‑calibrated such that 0VU = +4dBu. The BBC’s standard studio Operating Level was 0dBu, and in that situation the standard VU meter constantly under‑reads and, because of the small and compressed range of the meter, the needles barely move at all. So it’s no wonder that confused BBC operators decided that the VU was “virtually useless” compared with the wonderful in‑house BBC PPM (scaled, somewhat mysteriously, from 1 to 7).

The real problem, then, was the BBC’s inept alignment regime rather than the VU meter itself. When aligned correctly to the BBC’s 0dBu Operating Level, the VU meter instantly provides useful, meaningful indications of perceived volume, just as intended. So if you come across anyone who tells you the VU is “virtually useless,” perhaps give them a Paddington Bear‑style ‘hard stare’ and tell them not to be so silly!

When aligned correctly to the BBC’s 0dBu Operating Level, the VU meter instantly provides useful, meaningful indications of perceived volume, just as intended.

VUs In Modern Productions

That’s enough of ancient history and embarrassing misunderstandings — what you really need to know is how useful the VU meter might be in a modern computer‑based studio. Today, we’re spoiled for choice, with many different meter types available that can focus the user’s attention on different aspects of that signal. For example, broadcast PPMs focus mostly on (quasi) peaks. True Peak meters focus on the absolute level of transient peaks when a discrete digital signal is reconstructed as a continuous waveform. The BS.1770 Integrated Loudness meter indicates the perceived loudness over an entire song or programme... and so on. (Speaking of which, it’s no accident that the Momentary option in the BS.1770 Loudness Metering suite has a very similar specification to that of the original SVI.)

A VU meter is far simpler than all of those. It’s a basic form of RMS meter that conveys a rough and ready impression of the instantaneous volume measured, effectively, over the last third of a second. Also, it really only works properly if the average signal level is close to 0VU. But while that might sound like a disadvantage, in practice it can be very beneficial because the numbers on the scale are largely irrelevant. Really, it’s the angle of the needle that conveys the important signal level information and this is translated subconsciously and instantly, so interpreting it doesn’t use lots of mental processing (unlike bar‑graph meters or numerical readouts). Consequently, mental attention isn’t diverted from concentrating consistently on the sound in order to process the eyes — and that’s what this business is all about!

If the signal falls too low, the needle drops quickly to the left, and if it’s too hot the needle will soon be ‘pinned’ to the right. In between, it’s only really the change from black to red at 0VU that matters. For clean signals, vertical is generally about right, 30 degrees over to the right (“into the red”) is too hot, and 30 degrees to the left is too cold. Of course, it’s common today to ‘drive’ equipment into distortion for effect, but if you start by getting the signal to the normal operating levels and add gain from there, you’ll generally find it easier to hit that distortion sweet spot. Of course, if you tend to use a piece of gear only for such effects, the ‘proper’ way to do this would be to calibrate the VU meter so that 0VU is aligned with that sweet spot!

Okay, so the VU meter might not be a one‑stop solution for every query concerning signal levels, but it does provide a very good instant impression of the average programme level/volume and everyone knows instinctively when the meter needle is bouncing around in the right area. I’ve never had to explain to anyone how to interpret a VU meter because it’s inherently so obvious! The meter’s simple scale and relatively fast reaction time also mean that calibrating the input and output levels within a system, whether a digital, analogue or hybrid one, is unbelievably fast. Source a steady tone and adjust the level to read 0VU, with remarkable precision and zero ambiguity. It’s much easier than with a bar‑graph meter, for example.

Meter Calibration

As you’ll have gathered by now, the many benefits of the VU really only apply if it is calibrated accurately to the system’s Operating Level or desired mixing level, and this means that some form of calibrated input level adjustment facility is an absolute necessity. Thankfully, most VU meter plug‑ins have a way of adjusting the (digital) sensitivity over a range of, say, ‑12 to ‑20 dBFS but, sadly, most hardware VU meters — and especially the cheaper ones — do not. Nevertheless, my preference is for hardware meters where possible, because they don’t take up valuable screen space, and if you wire them to a monitor controller’s record output then one meter can be used for all your audio sources, even when the computer is switched off or doing something else. (Of course, big VU meters can also look very cool in the studio!)

The challenge is to find a hardware VU meter with a calibrated adjustable input attenuator, as well as having the correct VU meter ballistics — the needle rise and fall times are critical to the accuracy and usefulness of the display, and just putting a VU scale on any old moving‑coil meter won’t do for mixing applications! Having scoured the audio manufacturers’ product catalogues, I’m currently only aware of two hardware units that meet these requirements in both respects. Crookwood Audio Engineering (https://crookwood.com/vu-meters/2u-stereo-vu-meter) offer three VU meter variations using excellent SIFAM movements, all with a switchable attenuator covering a 15dB range. Alternatively, Japanese manufacturers Hayakumo (https://en.hayakumo.tokyo) offer their Foreno VU meters fitted with NHK‑approved Fuso meter movements, along with a switchable 15dB attenuation range. (There may be other meters out there that meet the requirements, but if so I’ve not yet found them.)

Using VU Meters When Mixing

If tracking through an analogue console, the factory‑set alignment of 0VU = +4dBu is a good option that optimises headroom and signal‑to‑noise performance. If working entirely in‑the‑box in a DAW, we might choose to track with a nominal operating level of ‑20dBFS (the American SMPTE standard) or ‑18dBFS (the European EBU standard), and it’s easy to select the desired calibration in most VU meter plug‑ins, thus keeping signal levels in the right ball‑park. When mixing or mastering, though, it’s often desirable to work with a higher overall mix reference level, and this is really where the adjustable meter calibration becomes critical.

For example, if mixing for a streaming service the desired Loudness might be ‑14LUFS, and you could dial that into a loudness meter and work just with that. However, the constantly varying LUFS numbers aren’t as easy or fast to interpret as a simple VU meter, and I find that simply by adjusting the VU meter’s sensitivity by the appropriate amount, I can tell instantly when I’m mixing in the right ball‑park for that target loudness. It’s also much easier to check if the chorus is sufficiently louder than the verse, if the snare drum is balanced to everything else, and whether the vocal needs to be pushed up or down a smidgen — just from the experience of looking at different material on VU meters.

So, the pertinent question is: what’s the right VU alignment for mixing? Obviously, it depends on what your target level is but a simple approach is to run 30 seconds of pink noise through the mixer (whether a physical one or your DAW) with both a VU meter and an Integrated Loudness meter registering the output level. Adjust the level of pink noise through the mixer until the Integrated Loudness meter indicates the desired target level (‑14LUFS, say). With the pink‑noise now at the target level, adjust the VU meter’s alignment to place the needle close to the ‑2VU mark (ie. vertical).

Once calibrated in this way, it should be relatively easy to keep the mix levels very near the desired target level just by glancing at the VU meter. Naturally, this will take a little practice and experience, and you may need to fine‑tune the exact alignment for your particular mixing techniques. But this solution should work well and most people will find it much easier and less stressful than focusing on a loudness meter.

So, despite being 83 years old, the VU isn’t ‘virtually useless’ at all: it’s still a very practical, highly appealing, and valuable tool for every audio mix engineer.

Quasi‑PPM

The PPM — or rather QPPM! — is a meter design that pre‑dates the SVI, but QPPM meters are still widely used today. They’re arguably more accurate than VUs for gauging signal levels in broadcast, but they were far more expensive in the 1930s and ’40s, and their behaviour and scale don’t bear the same resemblance to human perceptions of loudness.

The PPM — or rather QPPM! — is a meter design that pre‑dates the SVI, but QPPM meters are still widely used today. They’re arguably more accurate than VUs for gauging signal levels in broadcast, but they were far more expensive in the 1930s and ’40s, and their behaviour and scale don’t bear the same resemblance to human perceptions of loudness.

Despite their name, none of the European peak‑programme meters (PPMs) developed in the 1930s measured what we’d now call ‘true peak’ levels, so they’re more accurately know as ‘quasi‑peak meters’ (QPPMs). During their design it was found that very loud but brief transients were of little technical risk to the analogue transmitters or tape recorders, and that (analogue) transient distortion lasting less than a millisecond or two is inaudible (the generated harmonic overtones are beyond human hearing). Also, registering such brief transients on the meter generally resulted in the broadcast operators setting average levels unacceptably low, reducing transmission ranges and degrading the signal‑to‑noise ratio. So a little under‑reading of transient levels was (and in analogue systems still is) seen as a positive benefit rather than a negative, and none of these PPMs respond to the very fastest transients; most dramatically under‑read peaks shorter than 10ms or so.

The same is not true in digital systems, because even the briefest transient overload results in aliasing artefacts that have an unnatural harmonic content and so are easily detected by the listener. This is why True Peak (TP) metering is now mandatory for services adhering to the Loudness Normalisation standards, and true peaks may not be higher than ‑1dBTP, thus guaranteeing no transient overloads whatsoever.

K‑System Metering

Mastering engineer Bob Katz created the K‑System, which is, broadly speaking, a digital equivalent of the VU meter with several refinements, not least catering for three different alignment levels.Photo: Picture Mantaraya36 (Creative Commons 30)

Mastering engineer Bob Katz created the K‑System, which is, broadly speaking, a digital equivalent of the VU meter with several refinements, not least catering for three different alignment levels.Photo: Picture Mantaraya36 (Creative Commons 30)

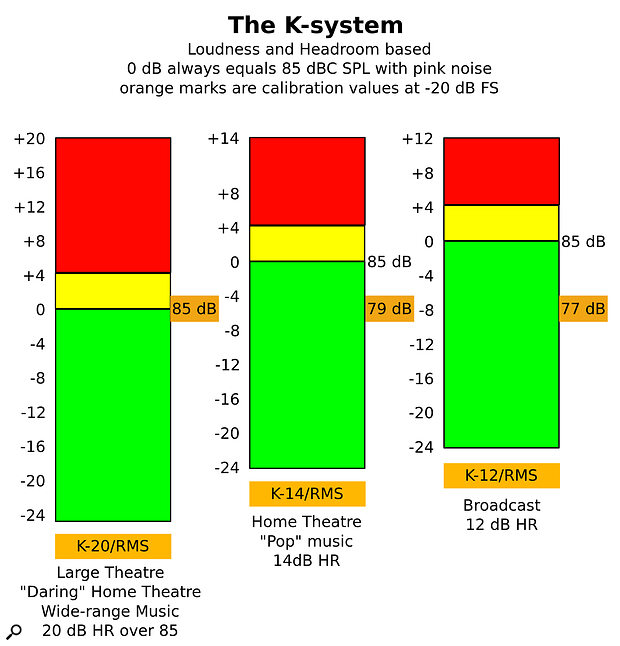

In 1999, mastering engineer Bob Katz conceived the K‑System Meter, in effect a digital replacement for the SVI. I won’t go into all its design goals and benefits here, but two key aspects are directly relevant to this article. First, it establishes a defined operating level in the digital environment. Second, there are three alignment options to cater for alternative desired mix levels. On the down side, it usually employs a bar‑graph rather than an angled pointer (as explained in the main text, I find the latter easier to use). The K‑20 option is equivalent to a standard analogue VU, with 0VU at +4dBu and 20dB of headroom, and is intended for tracking wide‑range music. K‑14 and K‑12 move the mix reference level higher, with correspondingly reduced headroom and dynamic range, much the same as mixing for ‑14 or ‑12 LUFS for streaming.

Metering & Headroom

In the days of analogue audio systems, all of the available metering (VU, PPM, Nordic, DIN etc.) inherently only provided a view through a small window of the audio system’s total dynamic range. This was intentional, and encouraged users to optimise signal levels around the operating level highlighted in the meter, which essentially hid the available safety headroom margin. When Sony introduced their first professional digital audio recording systems, the PCM‑1600 and 1610 CD mastering processors, their intended operating level was indicated by a ‘0’ on the bar‑graph meters, with 20dB of headroom above, exactly in line with analogue practices. But, unlike analogue meters, the full headroom margin was actually shown because the use of pre‑emphasis made it depressingly easy to accidentally overload the system!

The Sony PCM‑1630 was the first digital device to align the meter’s ‘zero point’ with digital full scale — and since then, digital meters have tended not to have headroom built in.Photo: Akakage1962 (Creative Commons 30)

The Sony PCM‑1630 was the first digital device to align the meter’s ‘zero point’ with digital full scale — and since then, digital meters have tended not to have headroom built in.Photo: Akakage1962 (Creative Commons 30)

Oddly, when the improved PCM‑1630 model was launched, Sony re‑scaled the digital bar‑graph meter to have ‘0’ at the top (the clipping level). The intended operating level was then indicated with a user‑adjustable LED marker which could be set anywhere between ‑10 and ‑20 dBFS. Every digital device ever since has used that same digital metering paradigm, showing the entire headroom margin with a vast metered dynamic range, yet without any explicit operating level reference point! So it’s no wonder that users tended to peak close to 0dBFS, with all the associated hassles: the meter scaling has inherently encouraged them to do so.

On more modern digital devices with sample peak meters that support personalised meter colours, I always configure my meters to show green up to ‑20 or ‑18 dBFS, yellow from there to ‑10dBFS, and red above ‑10dBFS, and it’s remarkable how such a simple scheme automatically encourages correctly optimised recording levels with decent headroom margins.

Finally, it’s interesting to note that with the passage into 32‑bit floating‑point recording, the available digital meters (which still stop at 0dBFS) no longer show the considerable (safety) headroom margin hidden above. Just like ye olde analogue meters! What goes around, comes around...