Standard test signals can be incredibly useful when configuring and aligning audio equipment — and SOS's Technical Editor Hugh Robjohns has created a set of properly calibrated test files just for you! Read on to find out how to use them and where to find them.

Audio test signals can be produced by proper audio test equipment, generated by audio editing software or DAW platforms, or found as pre‑made audio files online, from a wide range of sources. In this article I’m going to describe a selection of bespoke, specially created test signals and explain how to use them to align audio systems or check that things are working correctly.

First, though, a brief warning: if misused, test signals can potentially be dangerous to your ears, your loudspeakers, and even to equipment being tested (particularly sensitive equipment like mic preamps). So, always be cautious and careful when using these test signals, particularly in respect of monitor speaker or headphone volumes, and especially when using the full‑level and high‑frequency test signals. Use of these test signals is entirely at your own risk: neither Sound On Sound Ltd nor the author can accept any responsibility whatsoever for any harm to persons or equipment!

The majority of test signals described here are 24‑bit WAV files created using Adobe Audition, and they are available to download as three ZIP files from the online resource page at https://sosm.ag/sos-audio-test-files. There are three sub‑folders, each containing identical audio signals at one of three different sample rates (44.1, 48 and 96 kHz) to suit common DAW project configurations. A few test signal files are not available in all three formats, simply because some are not relevant or appropriate at certain sample rates.

This table lists the included audio test files, their purposes, levels, frequencies and formats. Download the ZIP files to get your hands on them!

This table lists the included audio test files, their purposes, levels, frequencies and formats. Download the ZIP files to get your hands on them!

Without further ado, let me take you through what these files are and what you can use them for.

Operating Level Sine Tones

Probably the most basic test signal, but also an incredibly useful one, is a 1kHz sine wave tone at the nominal ‘operating level’. A sine wave is a pure signal that contains only a single fundamental frequency, and a system’s operating level is the default recording level (0VU or +4dBu in analogue terms), which is well above the system noise floor yet still leaves adequate headroom to cope with transient peaks.

In the digital audio world, there are two standard operating levels. The most common in professional and project studios is ‑20dBFS (SMPTE RP155 standard), while European broadcasters use ‑18dBFS (EBU R49). The latter has been included here, as it often works better with semi‑pro analogue equipment than the SMPTE standard.

Sometimes, though, it’s more appropriate to work with an alternative (and usually higher) operating level, for example if mastering within a peak‑normalised environment such as for a CD. As it happens, the EBU also specify a CD Exchange tone level (EBU RP89) for broadcasters working with commercial CDs. This sets a reference level at ‑12dBFS, so I’ve included a test signal at that level in the folders for convenience. For any other personalised local reference level requirements, the amplitude of one of these standard test tones can be altered simply by introducing a known level change using a fader, plug‑in or native gain process.

System calibration using any form of audio metering is always performed using a sine‑wave test tone. Audio programme material (music or speech) and pink noise are simply not appropriate for this application because different metering systems, and even different meter designs of the same nominal type, inherently have substantially different dynamic responses and may be measuring different things (eg. quasi‑peak or RMS). Consequently, different meter types often register quite different levels, even on the same source material. In contrast, a sine‑wave tone provides a known, stable, consistent signal level that will always read accurately on all meter types.

When connecting multiple devices, it is important that the equipment input and output levels are adjusted to maintain a consistent headroom margin and low noise floor through all stages of the signal path. A standard operating level test tone allows this very easily, and the process starts with playing a sine tone at the appropriate operating level and sample rate from your DAW. In most cases, a ‑20dBFS tone is the easiest to work with, since virtually all digital meter systems have a suitable mark corresponding to ‑20dBFS.

Both mono and stereo operating level test tones are provided in the folder, and for general signal path checking the stereo file is often the easiest one to use. That’s because a mono channel’s pan‑pot attenuates the signal level reaching each side of the stereo output, with the amount of attenuation being defined by the DAW’s current ‘pan law’. In most cases the default pan law is ‑6dB (so that if summed back to mono the level would read the same as the original mono source file), but alternative pan‑law values including ‑4.5dB, ‑3dB, or even 0dB may be employed or available. There are good reasons why these different options exist, but one effect is that a ‑20dBFS reference tone played from a mono channel is likely to read lower on the left and right masters (eg. ‑26dBFS), and this can be confusing when you’re trying to calibrate your gear!

Obviously, it’s possible to compensate for the pan‑law attenuation by raising the source channel fader by 6dB (or whatever the pan‑law attenuation is), thus ensuring the stereo output meters read the required ‑20dBFS. The aim is simply to make sure the DAW outputs are at the appropriate operating level so that you can then check the signal level through external analogue equipment connected to the DAW, or even through internal plug‑ins. However, as I said previously, using a stereo file avoids the need for compensation and is thus considerably easier and quicker to use.

In the analogue world, the standard operating level is normally indicated by the zero mark on a VU meter. The SMPTE (RP155) standard equates a ‑20dBFS digital tone with an analogue signal voltage of +4dBu, but in most cases the actual analogue signal voltage is irrelevant – the important thing is that the outboard equipment registers the tone at 0VU on its own meters, and that is achieved by adjusting the device’s input level controls as necessary. Similarly, device output level controls (or interface input levels) can be adjusted so that the return signal into the DAW also registers ‑20dBFS on its input meters.

It’s worth noting that the SMPTE alignment means a full digital level (0dBFS) equates to a whopping +24dBu in the analogue world. That’s almost 35V peak to peak, and while professional equipment is designed to handle that kind of signal level much semi‑pro equipment doesn’t have sufficient headroom and might clip at much lower levels. For that reason it may be more appropriate to employ the EBU digital operating level of ‑18dBFS. This is associated with a lower analogue operating level of 0dBu (instead of +4dBu) and, as a result, the EBU alignment sets a peak level which is 6dB lower than the SMPTE requirement (0dBFS = +18dBu). Most semi‑pro equipment can accommodate that and will sound rather better. For added real‑world context, Mackie’s analogue consoles are aligned such that the meter zero equals 0dBu, so they’re easy to align to the EBU format. Similarly, RME’s interfaces all support the EBU alignment, but only the very latest high‑end models can meet the SMPTE spec.

Although a 1kHz tone is the international standard for level testing, other tone frequencies can be useful. For example, a 440Hz tone is handy for reference tuning, and a 3kHz tone is a better choice for detecting the clicks and glitches that occur if a digital system’s clocking isn’t configured correctly. I have included tones at both of these frequencies within the test file folders (both at ‑20dBFS).

Real‑world testing of music recordings has found reconstructed sample peaks reaching as much as +9dB or so above the highest registered sample peak.

Sample & True Peak Meter Test

Having mentioned metering systems already, there’s an important distinction to note when it comes to digital meters. Most DAWs and digital hardware are equipped with ‘sample peak’ meters, which work by analysing a block of audio samples, registering the highest sample amplitude value occurring within that block and indicating that level on the meter display. Although a very simple technology, it provides a perfectly adequate indication of signal levels, and in the case of sine‑wave test tones it’s totally accurate too. But when it comes to time‑varying signals like music or noise, the sample peak amplitude doesn’t accurately correspond to the true amplitude of the original or reconstructed sound wave — this is due to something we call inter‑sample peaks. Real‑world testing of music recordings has found reconstructed sample peaks reaching as much as 9dB or so above the highest registered sample peak, and +3dB inter‑sample peaks are remarkably common. While this potential metering inaccuracy is of no real concern if recording and mixing with a sensible headroom margin (which I’d recommend you do, for this reason and others), it is a serious problem in a mastering environment, especially if trying to get the signal level as close to 0dBFS as possible (as per the dreaded Loudness Wars!). The issue is that a sample peak meter might indicate the signal at or just below 0dBFS, which will be perfectly fine within the digital environment, yet the reconstructed analogue waveform might peak well over 0dBFS and a D‑A converter obviously can’t recreate that — this results in peak transient distortion on playback devices, and it happens a lot!

In such level‑critical applications a ‘true peak’ meter, such as the type included within BS1770 (R128) Loudness Metering applications, should always be used. A true peak meter works by oversampling the digital signal to calculate many intermediate samples that follow the waveform amplitude in between the original samples more accurately, allowing the true amplitude of the reconstructed waveform to be estimated much more precisely. Most true peak meters use 4x oversampling, and this reduces the maximum potential inter‑sample peak error to less than 1dB. This is why the loudness normalisation standards insist that the maximum true peak value must never exceed ‑1dBFS; it maintains a worst‑case safety headroom margin to account for potential meter under‑reads. It’s impossible to identify whether a digital meter is a sample peak type or a true peak type with only a simple sine‑wave test tone, although the giveaway clue is often that a true peak meter has calibration marks above 0dBFS.

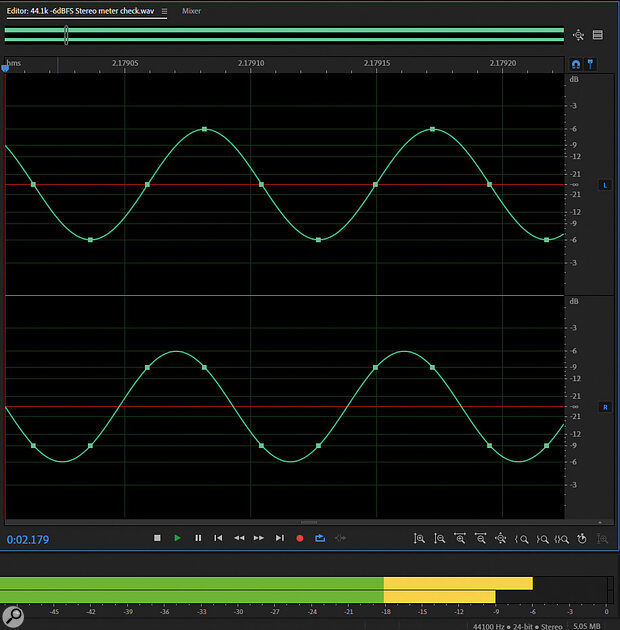

Included in the SOS Audio Test Files is a specially created stereo meter test file which instantly identifies the type of meter. This stereo meter test file employs a high‑pitched tone on both channels, and in both the frequency is exactly a quarter of the sample rate. This relationship ensures that there are precisely four samples per wavelength. For the 44.1kHz file the tone is at 11.025kHz, while the 48kHz version is 12kHz. Given the critical relationship between tone and sample rate, the metering test files are only available at these two sample rates. At the 96kHz sample rate, the tone would be 24kHz and that could damage speaker tweeters without anyone hearing it — so I’ve not created it! On the left channel of the stereo meter test file the audio tone is locked to the phase of the word clock, to ensure that sample instances fall exactly at the zero crossing, positive peak, zero crossing (again) and negative peak of the reconstructed waveform (see Figure 1 — sample instances are indicated by square green blocks on the waveform). The right channel carries the same tone, but phase‑shifted by 45 degrees relative to the sample clock. This results in two samples 3dB down from the positive peak, and two samples 3dB up from the negative peak.

Figure 1: A tone frequency at a quarter of the sample rate, resulting in four sample instances per wavelength (green blocks). The left‑channel tone (top) is synchronous with the sample rate, resulting in samples at the zero crossings and the waveform’s positive and negative peaks. The right‑channel tone is phase‑shifted by 45 degrees, resulting in samples 3dB off the waveform peaks. When displayed on a sample peak meter, the right channel registers 3dB lower than the left (as shown here), even though both signals really have exactly the same peak amplitude when reconstructed.

Figure 1: A tone frequency at a quarter of the sample rate, resulting in four sample instances per wavelength (green blocks). The left‑channel tone (top) is synchronous with the sample rate, resulting in samples at the zero crossings and the waveform’s positive and negative peaks. The right‑channel tone is phase‑shifted by 45 degrees, resulting in samples 3dB off the waveform peaks. When displayed on a sample peak meter, the right channel registers 3dB lower than the left (as shown here), even though both signals really have exactly the same peak amplitude when reconstructed.

Clearly, the reconstructed waveform amplitude is exactly the same on both channels, and a true peak meter (and all analogue meters) will therefore register the same signal level on both channels. However, a sample peak meter will indicate the right channel 3dB lower in level than the left. So, if the left and right meters register the same level you’re looking at a true peak meter or an analogue meter, and if the right channel is lower than the left you are viewing a sample peak meter. If the latter, beware of peaking too close to 0dBFS because the actual (reconstructed) signal level will definitely be higher than it looks!

In addition to stereo meter test files at the two sample rate options, I’ve provided two different signal levels. The ‑20dBFS version should be speaker‑safe but may be difficult to read on some compact meters, while the ‑6dBFS version will be much easier to read on most meters but could damage speakers and/or ears if replayed at high level. I strongly recommend muting speakers for these tests — no one ever needs 12kHz tones in their ears!

Phase Meter Test

I’ve also included a test for phase meters and audio vectorscopes (Goniometers). This stereo test signal comprises a constant stereo 1kHz tone at the standard ‑20dBFS, but the phase of the right channel is shifted continuously over 180 degrees relative to the left channel. As a result, the two channels drift in and out of phase with each other over 10 seconds, and this can be seen clearly on a phase meter or audio vectorscope.

The pointer or marker on a simple phase meter will move smoothly from +1 through zero to ‑1 and then back again, while a vectorscope display will start as a thin vertical line, open out into a full circle, collapse into a horizontal line, and then return again to the vertical line. For old‑school analogue equipment, traditional left‑right meters will show a constant level throughout the cycle, while any sum (Mid) and difference (Sides) meters will rise and fall in opposition to each other: as the sum (M) meter falls the difference meter (S) rises, and vice versa

Since this test uses a sine‑wave tone, the relative phases of the two channels are notoriously difficult to detect by ear, so don’t be surprised if when listening on speakers you can’t tell when the two channels are in and out of phase. For an aural phase check, I’ve recorded a spoken word channel and phase ident file, which is much easier to judge by ear. This starts with a dual‑mono, phantom‑centre signal, then identifies each channel in turn and ends with the two channels in opposite polarity, with the monologue describing each stage of the test. The opposite‑polarity section should sound very unpleasant and unnatural, almost like your ears are being sucked out, with the voice not having a clear location. The better the listening room acoustics (particularly in terms of early reflections around the listening area) the more dramatic and better defined this test will be.

Channel Ident Tones

Identifying the left and right channels in a stereo system brings me neatly on to ‘stereo ident’ tones. These are used to confirm that a stereo source is being recorded or received with the channels in the right orientation. Broadcasters and professional studios commonly employ, for this purpose, a dedicated stereo ident tone that combines the functions of both channel identification and operating level line‑up.

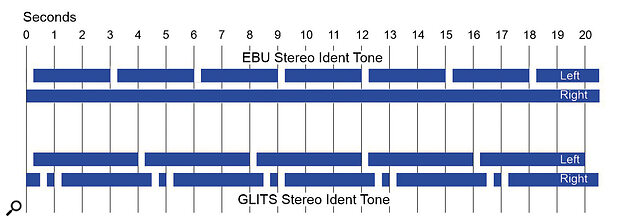

The EBU defined a stereo ident tone (EBU R 49‑1999) that uses a standard 1kHz sine wave on both channels, to allow relative phase to be checked, at the standard European broadcast operating level of ‑18dBFS. To identify the correct channel order, the left channel has a quarter‑second (250ms) interruption every three seconds (see Figure 2). A mono sum of this stereo tone should create a tone at the correct ‑18dBFS level, but with brief level dips of ‑6dB every three seconds. If one channel of the sum arrives polarity‑inverted, the mono sum would instead produce a 250ms pip at ‑24dBFS every three seconds!

Figure 2: Timelines showing the interrupted tone arrangements for EBU tone (top) and GLITS tone (bottom). In both cases the interruptions last 250ms (with 250ms pauses in the GLITS version), and there’s a repetition rate of 3 seconds for the EBU format and 4 seconds for GLITS.

Figure 2: Timelines showing the interrupted tone arrangements for EBU tone (top) and GLITS tone (bottom). In both cases the interruptions last 250ms (with 250ms pauses in the GLITS version), and there’s a repetition rate of 3 seconds for the EBU format and 4 seconds for GLITS.

Although the EBU stereo tone is widely employed, there’s an inherent ambiguity, since if the left channel were lost the right channel would sound exactly the same as a standard mono test tone, with no indication that it belonged to a stereo pair. In the late 1980s Graham Haines, a BBC TV Senior Sound Supervisor, developed an unequivocal version to avoid this potential confusion, and it became known as GLITS (Graham’s Leg Identification Tone System, ‘leg’ being the BBC term for an audio channel). GLITS uses a standard 1kHz tone at ‑18dBFS (although different tone frequencies have been used in different applications) and retains the EBU’s single 250ms interruption to the left channel. This is complemented by two subsequent interruptions at quarter‑second intervals in the right channel. The entire sequence is repeated every four seconds to further differentiate it from the EBU stereo ident tone. The benefits are that the left and right channels are identified explicitly, and if only one channel arrives at the destination it’s obvious that it belongs to a stereo pair. A mono sum has three ‑6dB level dips at quarter‑second intervals.

Identifying channels correctly in a multi‑channel surround sound system is even more critical, of course, and the film and TV industries have since developed various schemes for that purpose. One of the most comprehensive and widely employed ident signals used across the European TV industry builds on the ideas of GLITS, and is known as BLITS. See the ‘BLITS’ box for more on that.

Sweep Tones

It’s often useful to have a test signal known as a ‘sweep tone’, that changes in level or frequency in a specific way. I’ve included such a signal that starts at ‑30dBFS (10dB below standard operating level) and rises progressively all the way up to 0dBFS. Digital equipment will handle this test signal without any difficulty. The louder stages, though, may prove challenging for some analogue equipment and loudspeakers, so this sweep tone is useful for identifying if any analogue signal paths are struggling to cope with high peak levels.

As I mentioned earlier, most professional analogue equipment will clip around +24dBu, but traditionally the Maximum Permitted Level (PML) of music and speech, as measured on analogue meters, is normally only 8 or 9 dB above the operating level. Obviously, ultra‑fast peak transients won’t register on analogue meters but they are kept safe within the remaining 12dB of headroom (that’s why it’s there!).

Thanks to the so‑called Loudness Wars of the last 30‑40 years, standard practice has been to master digital audio to peak near 0dBFS, so if a digital system’s converters are aligned for ‑20dBFS = +4dBu = 0VU then commercial music peak replay levels will sit consistently at or near +24dBu. Again, professional audio equipment should be able to handle that without complaint, but a lot of budget equipment is likely to struggle at such elevated levels, and this can result in the sound becoming ‘hard’ or ‘strained’ — or even obviously distorted.

Running the level sweep test tone enables you to listen to (and if necessary measure) the sound of analogue equipment as the level gets progressively louder. This tone should sound pure and sweet at all levels. Any harmonic or anharmonic distortions, or any sense of hardness or compression, indicate an analogue signal path that can’t cope well with such high analogue signal levels. In such cases, the DAW interface output level should be reduced if possible, so that 0dBFS equates to a lower analogue level of, say, +18dBu (the EBU standard alignment). This will put much less strain on the analogue electronics.

Another kind of sweep tone changes frequency over time and is useful for checking system bandwidth, or as a quick (albeit crude) test of frequency response. The flatness or otherwise of the frequency response can be roughly judged simply by looking at the meter indications during the sweep or by looking at the squareness of the waveform graphic in a DAW. The frequency sweep test file spans the full 10‑octave audio range from 20Hz to 20kHz (and up to 40kHz in the 96kHz file). When played, meters should remain at ‑20dBFS solidly throughout the test, and if recorded into a DAW the waveform block should be rectangular without sloping or any bumps/dips. Any deviations indicate a non‑flat frequency response.

In any non‑linear audio system — a system that involves some element of distortion — two closely spaced high‑frequency tones will intermodulate, typically resulting in sum and difference artefacts. To test for intermodulation problems, I’ve created a test file comprising sine‑wave tones at a level of ‑12dBFS and with frequencies of 11 and 12 kHz. A high‑quality audio system should pass these as a clean, high‑pitched whistle, but any non‑linearity in the system will cause these two tones to intermodulate, resulting in distortion products, appearing primarily at 1kHz and its harmonics. So, if you hear a lower‑pitched tone when playing this file something is awry! Please beware, once again, that high‑level high‑frequency tones aren’t much liked by tweeters, so do take care when auditioning this test signal.

Acoustic Calibration

We’ve explored various ways of aligning and checking audio equipment with electrical signals. However, another common calibration requirement is when setting up loudspeaker monitoring for stereo or surround‑sound setups, and when aligning subwoofers. For this, we need test signals that can be assessed acoustically.

A standard test for this purpose is to have a pink‑noise signal reproduced by each speaker in turn. Pink noise is a very natural‑sounding form of noise, with equal energy in each octave band. However, as noise is a constantly varying signal, standard level meters generally do a rubbish job of indicating the true RMS level, which is what we need to know for calibration purposes. For that reason, I’ve created and carefully tested these files using precision RMS metering to guarantee the intended level of ‑20dBFS — so do not be concerned if your DAW’s meters show something other than ‑20dBFS!

To establish a reference acoustic monitoring level (a process I described in detail in an article in SOS May 2014: https://sosm.ag/monitor-reference-levels), it’s critically important to first align the DAW replay channel (with the speakers dimmed or muted) using the ‑20dBFS operating level tone I described at the start of this article. That ensures a unity‑gain path from DAW replay channel to the stereo outputs. The tone file can then be substituted for the noise file without making any other changes to the gain structure of the DAW — it will, therefore, inherently replay at the correct RMS level. Do not be tempted to tweak the levels based on your DAW’s meters!

Several versions of pink‑noise file are provided. The first contains a full audio bandwidth signal from 20Hz to 20kHz. However, it’s often the case when performing acoustic alignments that low‑frequency standing waves or modes in the room affect measurements at low frequencies, while reflections from studio furniture and hardware strongly affect the measurement at high frequencies. To help reduce measurement errors and give more consistent results when aligning loudspeakers, a popular alternative test signal is band‑limited pink‑noise — that is pink noise that has been filtered to remove content below 500Hz and above 2kHz. This minimises any room‑mode anomalies and local reflections.

Obviously, subwoofers don’t generate signals above 200Hz or so, and I’ve therefore created a dedicated pink‑noise signal band‑pass filtered between 20 and 80 Hz for aligning subwoofers. All of these pink‑noise signals generate an RMS level of ‑20dBFS, which is suitable for calibrating an acoustic reference listening level.

Although it’s always best to align speakers individually and then fine‑tune individual speaker sensitivities to centre phantom images, a stereo version of the full‑bandwidth file is also included. This has a completely non‑correlated noise source in each channel. The RMS noise level on each channel is ‑23dBFS and with both channels running they should sum acoustically to measure approximately ‑20dBFS on an SPL meter (the local acoustic environment will affect the accuracy of that summing).

When installing a subwoofer the first step is to set the subwoofer’s polarity or phase alignment to match that of the satellites at the listening position. To that end, a steady 85Hz tone at ‑20dBFS is provided which should be played over both the subwoofer and satellites simultaneously. The polarity or phase control on the subwoofer is adjusted to make the tone sound as loud as possible at the listening position. If the tone generated by the sats and sub arrive at the listening position in phase their signals will add constructively, gaining in amplitude. If they arrive in different phases they will partially cancel, reducing the volume.

Once phase/time‑aligned, the subwoofer volume needs to be calibrated. This is performed using the 40‑80 Hz band‑limited pink‑noise signal. An additional alignment available with some subwoofers is the nature of the crossover to the satellite speakers — how well the subwoofer output blends with the satellite speakers. Crossover misalignments result in a dip or peak in level at frequencies either side of the nominal crossover point, and this can be revealed using a low‑frequency sweep tone. I’ve provided one spanning 20‑200 Hz at ‑20dBFS. Most subwoofer crossovers are set between 80 and 120Hz so this spans that whole region nicely. Ideally, the sweep should sound smooth and consistent in level all the way through. If not, adjust the crossover controls to fine‑tune the response.

Of course, bumps and dips in the low‑frequency response are commonly due to room‑mode resonances, where the wavelengths of sound become similar to the room dimensions and reflections from the room boundaries interfere with the direct sound from the speakers resulting in constructive additions (peaks) and destructive cancellations (dips) in the response at the listening position. The low‑frequency sweep tone mentioned above can help to identify these room modes, but I find a more useful and relevant test signal is a series of scales of staccato musical bass notes — what’s sometimes described as a ‘bass staircase test’. Varying note volumes indicate problematic room modes (and their centre frequencies), but room‑mode resonances also smear the sound energy over time, which is revealed by specific notes being sustained rather than stopping cleanly. Two bass step tone files are provided: one steps upwards, the other downwards. I usually play both of these back‑to‑back. They are in three chromatic octaves (in semitone steps), each C-C: the first one (in round numbers) runs from 32 to 65Hz, the second 65-130Hz, and the third 130-260Hz.

When working with multiple speakers in a PA situation, it’s often necessary to time‑align speakers at different distances from the stage. A repeating impulse click signal is useful for helping to dial in a delay to provide compensation for the acoustic sound propagation, and I have included two signals for that purpose. One is a virtual impulse consisting of little more than a single cycle of 1kHz tone, and the other is a brief burst of white noise. Both peak to approximately ‑1dBFS and both have 1 second of silence before and after the signal so that it can be looped without risk of truncation. The tone impulse is best for checking latency through converters and DAW interfaces or as a general timing signal, but I find the noise burst better for time‑aligning delay speakers in a PA situation.

Asymmetrical Tones

It is sometimes helpful to check whether signals passing through a signal processing device, mixer, interface, or loudspeaker retain their original polarity, or become inverted. To help establish this, I have included a set of intentionally-asymmetrical test tones at different frequencies, all with true peak levels of -20dBTP. The asymmetrical nature of these tones often results in slightly low readings on digital sample peak meters, typically displaying around -21dBFS.

Asymmetrical Signal correct.To use these files, play a selected test tone through the device to be tested, and either record the output in a DAW to view using its waveform display, or view directly on an oscilloscope (real or virtual).

Asymmetrical Signal correct.To use these files, play a selected test tone through the device to be tested, and either record the output in a DAW to view using its waveform display, or view directly on an oscilloscope (real or virtual).

If the signal is passed without a polarity inversion the equipment is said to maintain ‘absolute polarity’ and the waveform display will resemble a bumpy plain with regular high mountain peaks, as shown opposite.

The alternative condition, where signal polarity is inverted, will display as a high mountain plateau with regular deep valleys!

Asymmetrical Signal inverted.Each of the five asymmetrical files has a different fundamental frequency to allow testing of individual loudspeaker drivers in multi-way loudspeaker systems. For general equipment testing I’d recommend using the 250Hz or 1kHz tones, simply because these tend to show up well on DAWs without too much display zooming. The 80Hz tone is helpful for subwoofers, while the 80 or 250Hz tones can be used on bass drivers, depending on their low-frequency extension. The 3kHz and 7kHz tones are helpful for tweeters, and either the 1kHz or 3kHz tones can be used for midrange units, depending on the cross-over frequency. As always, beware sending high-frequency tones at high level into a loudspeaker’s tweeters!

Asymmetrical Signal inverted.Each of the five asymmetrical files has a different fundamental frequency to allow testing of individual loudspeaker drivers in multi-way loudspeaker systems. For general equipment testing I’d recommend using the 250Hz or 1kHz tones, simply because these tend to show up well on DAWs without too much display zooming. The 80Hz tone is helpful for subwoofers, while the 80 or 250Hz tones can be used on bass drivers, depending on their low-frequency extension. The 3kHz and 7kHz tones are helpful for tweeters, and either the 1kHz or 3kHz tones can be used for midrange units, depending on the cross-over frequency. As always, beware sending high-frequency tones at high level into a loudspeaker’s tweeters!

Summary

In conclusion, there are a great many different recognised test signals for different applications and situations, but I hope this subset of bespoke, carefully curated audio files should prove useful for day‑to‑day calibration and reference, and for system testing and alignment. Finally, please do heed my warnings about replay levels. To protect your loudspeakers and ears, it’s always better to start at a low level and increase it when you’re confident it is safe to do so!

BLITS

Figure 3: The structure of BLITS, showing the signals carried on each channel during the complete sequence, which is divided into three sections: multi‑channel ident, stereo ident, and multi‑channel phase alignment.

Figure 3: The structure of BLITS, showing the signals carried on each channel during the complete sequence, which is divided into three sections: multi‑channel ident, stereo ident, and multi‑channel phase alignment.

When multi‑channel (5.1) broadcasting started, a simple channel identification system was needed, providing both aural confirmation and, arguably more importantly, visual confirmation on multi‑channel metering systems from DK‑Technologies and RTW (amongst others). Two Senior Sound Supervisors at Sky Broadcasting devised a system which was subsequently adopted by the EBU (EBU Tech 3304). Developed by Martin Black and Keith Lane, they named their system BLITS (Black and Lane Ident Tones for Surround).

BLITS identifies each channel in a 5.1 array in a distinctively musical way based on the key of A which both aids channel recognition and reduces listener fatigue. The entire sequence is divided into three sections, with the first stage comprising sequential musical tones on each of the six channels. These tones follow the standard broadcast channel order (L, R, C, LFE, Ls, Rs) rather than the standard film order (L, C, R, Rs, Ls — a clockwise circle). The filmic arrangement might be easier to follow acoustically, but is horrendous to observe on a 5.1 channel broadcast level meter, whereas BLITS displays with each meter bar‑graph rising and falling in turn from left to right, making channel order errors extremely obvious.

Each channel’s ident tone is at ‑18dBFS and lasts 600ms, with a 200ms gap between adjacent channel tones. The specific tone frequencies are 880Hz (A5) on the front left and right channels, 1320Hz (E6) on the centre channel, 82.5Hz (E2) on the LFE channel, and 660Hz (E5) on the rear left and right surround channels.

The middle section of BLITS provides a 1kHz stereo ident tone at ‑18dBFS, following in the EBU theme with a continuous tone on the right channel and an interrupted tone on the left channel. To differentiate it from both standard EBU and GLITS stereo tones, BLITS has four 300ms interruptions, starting after 1 second, with 2 seconds of steady tone at the end. These first two sections are also used to confirm stereo fold‑down levels and channel routing.

Bringing the BLITS sequence to a close is a section comprising 3 seconds of 2kHz tone on all six channels (including the LFE channel) at ‑24dBFS. The idea of this part is to confirm phase relationships between the channels in a form that suits typical multi‑channel metering systems. Including gaps between the three sections, the entire BLITS signal lasts 13.4 seconds before repeating. As this test file is strictly intended for use with surround‑sound TV applications, it is only available in the 48kHz ZIP folder.