As the nails are being hammered firmly into the coffin of competitive loudness processing, we consider the implications for those who make, mix and master music.

In a surprising announcement made at last Autumn's AES convention in New York, the well-known American mastering engineer Bob Katz declared in a press release that "The loudness wars are over.” That's quite a provocative statement — but while the reality is probably not quite as straightforward as Katz would have us believe (especially outside the USA), there are good grounds to think he may be proved right over the next few years. In essence, the idea is that if all music is played back at the same perceived volume, there's no longer an incentive for mix or mastering engineers to compete in these 'loudness wars'. Katz's declaration of victory is rooted in the recent adoption by the audio and broadcast industries of a new standard measure of loudness and, more recently still, the inclusion of automatic loudness-normalisation facilities in both broadcast and consumer playback systems.

Mastering engineer Bob Katz, who has long campaigned for the end of hyper-compression in mastering, and who recently declared the loudness wars to have been won — by the right side!In this article, I'll explain what the new standards entail, and explore what the practical implications of all this will be for the way artists, mixing and mastering engineers — from bedroom producers publishing their tracks online to full-time music-industry and broadcast professionals — create and shape music in the years to come. Some new technologies are involved and some new terminology too, so I'll also explore those elements, as well as suggesting ways of moving forward in the brave new world of loudness normalisation.

Mastering engineer Bob Katz, who has long campaigned for the end of hyper-compression in mastering, and who recently declared the loudness wars to have been won — by the right side!In this article, I'll explain what the new standards entail, and explore what the practical implications of all this will be for the way artists, mixing and mastering engineers — from bedroom producers publishing their tracks online to full-time music-industry and broadcast professionals — create and shape music in the years to come. Some new technologies are involved and some new terminology too, so I'll also explore those elements, as well as suggesting ways of moving forward in the brave new world of loudness normalisation.

The Loudness Wars

Anyone who has contemplated putting their own music onto a CD or online, even if only for family and friends, will be all too aware of the 'Loudness Wars'. This long-standing practice of competing to make one record sound loud in relation to others is usually claimed to be the consequence of an observation made in the 1950s that people tended to play the louder-cut records in jukeboxes more often. Thankfully, there's a physical limit to how loud a vinyl record can be cut without making it unplayable, so even the loudest-cut records managed to retain quite reasonable dynamics. Unfortunately, digital recording removed such constraints — a CD, for example, is playable regardless of the amplitude of the encoded audio data — and that simple technical freedom facilitated the 'war' that has been raging with (arguably) ever more musically destructive power over the last 30 years.

The audible consequence has been that the 'volume' of pop, rock and other music recorded and released on commercial CDs has risen steadily since the late 1980s, with a corresponding reduction in dynamics and, in many cases, a trend towards a more aggressive and fatiguing sound character — all in an attempt to make each track as loud as or louder than the perceived competition. It's bewildering to think that the audio format that offered the greatest dynamic range potential ever made available to the consumer is now routinely used to store music deliberately processed with the least possible dynamic range in the history of recorded music!

If Bob Katz is proved right, this current fashion to 'hyper-compress' music may well be relaxed or even reversed in the months and years to come, and the application of compression and limiting will revert to being a purely artistic and musical decision, rather than being an essential process in making a competitive product.

The key to Katz's claim is an ongoing industry shift into a 'loudness normalisation realm', in which the replay level of individual tracks is adjusted automatically to ensure they all have the same overall perceived loudness. This already happens to some extent on commercial broadcast radio, for example, but the new technology makes it practical to employ it in Internet music-streaming services and, crucially, personal music players too. Within a loudness-normalisation environment, it becomes impossible to make any one track appear to sound louder, overall, than any other, so mastering to maximise loudness inherently becomes completely futile.

A second aspect to this paradigm shift is that the kind of hyper-compressed material which the loudness wars encourage ends up sounding very flat, lifeless and even boring when compared with tracks which retain some musical dynamics — and the loudness-normalisation world really encourages and accommodates the use of musical dynamics.

So, in loudness-normalised environments — which is likely to include the vast majority of music replay systems within the next year or two — the use of heavy compression and limiting to make tracks sound loud will no longer work. Each track is balanced automatically to have the same overall loudness as every other track, and hyper-compressed material actually ends up sounding flat, weak and uninteresting compared with more naturally dynamic material.

Katz argues that the universal adoption of the loudness-normalisation paradigm in the consumer market will inevitably create a strong disincentive for the use of hyper-compression, and instead encourage music creators to once again mix and master their music to retain musical dynamics and transients. If this proves to be the case then the loudness wars may indeed be over, at last... but it will be a while before the last skirmishes are fought!

The Way Things Are

The current trend is all about trying to make a CD or download sound as loud as possible compared with other commercial material. Yet listening loudness is easily determined by the user via the volume control — so this really is just about competitive loudness, which is largely destructive and damaging.

In the all-analogue days, mixing consoles, tape machines, vinyl discs and other consumer replay media all employed a nominal 'reference level' — essentially the average programme signal level. Above this reference level, an unmetered space called 'headroom' was able to accommodate musical peaks without clipping. This arrangement allowed different material to be recorded and played with similar average loudness levels, whilst retaining the ability to accommodate musical dynamics too.

With the move into digital audio, the converters in early CD mastering and playback systems weren't as good as they really should have been, and to maximise audio quality, the signal levels had to peak close to 0dBFS (ie. the maximum digital peak level). Consequently, the 'reference level' effectively became the clipping level, and the notion of headroom fell by the wayside, becoming this war's first casualty!

Music producers (in the broadest sense of the term) quickly realised that they could employ techniques to squeeze as much of the audio signal as possible up towards the digital system's maximum peak level, simply because the CD format didn't complain like vinyl cutters did — and now many people seem to think that this is the primary role of a mastering engineer! The typical way that a track is made to sound loud on a CD (or as a download) is by employing heavy limiting and compression, often combined with some spectral shaping, to increase the average energy level and minimise the crest factor, or peak-to-average ratio. These techniques work because the way we perceive loudness is essentially based on the average energy level of a track; the higher the average energy level, the louder the track will sound. The technical term is 'peak normalisation': raising the level of the signal so that its peaks hit a defined maximum level. The flip side of this coin is that we lose the headroom margin, so there is no longer any room for musical dynamics. We can't raise the peak level any further, only the average level through limiting. In extreme cases, the material will become hyper-compressed.

Hyper-compression isn't an intrinsically bad thing: it has its place, just like any other musical effect, and has become an integral part of the sound character of certain musical genres. That's fair enough, because it's used in a musically informed way. But where it's being used purely to try to make a track louder than the competition, it can be quite damaging, either in the strict technical sense (through clipping, pumping and other distortions), or by compromising the musicality to some degree. The extent of the damage can vary from barely noticeable to blatant, depending on the skill of the mastering engineer and the requirements of the client. But, as a general principle, moving away from this long-established but characteristically destructive peak-normalisation practice would be a good thing from many perspectives. That's certainly what the TV industry felt a few years ago — and that's where the origins of the loudness-normalisation technology came from.

Consistent TV Loudness

This unassuming document lays out a standard measure of loudness that tests show to be a very close approximation to the human perception of loudness.At the heart of the loudness-normalisation movement lies something mysteriously called ITU-R BS.1770, which was developed in response to years of viewer complaints to television companies all around the world about overly loud adverts. (If there's anyone who hasn't noticed that phenomenon, I've yet to meet them!) So, at this juncture, I need to take you on a short diversion into the dominion of the broadcast industry…

This unassuming document lays out a standard measure of loudness that tests show to be a very close approximation to the human perception of loudness.At the heart of the loudness-normalisation movement lies something mysteriously called ITU-R BS.1770, which was developed in response to years of viewer complaints to television companies all around the world about overly loud adverts. (If there's anyone who hasn't noticed that phenomenon, I've yet to meet them!) So, at this juncture, I need to take you on a short diversion into the dominion of the broadcast industry…

Historically, trained broadcast sound operators worked in 'continuity suites' or 'master control' rooms, using their ears and audio skills (with guidance from their console meters) to balance and control the broadcast sound levels, instinctively matching the perceived loudness of different contributions and programmes. This was, therefore, a 'loudness normalised' environment, and it worked very well indeed. Consumer research has revealed that most people ignore modest loudness variations within a 'comfort zone' of 2.4dB above and 5.4dB below their preferred volume setting, which is easy to maintain with trained sound technicians. I can remember that when my father bought our first colour TV, way back in 1970, we almost never had to adjust the TV's volume control, such was the broadcast consistency of the time.

However, skilled staff are expensive and so the broadcasters gradually swapped staff for automated systems, and these simply played scheduled programmes directly to air. Audio level adjustments would only be made if the material exceeded a defined Permitted Maximum Level (PML). Of course, it didn't take the advertisers long to realise that they could exploit these systems by employing heavy compression and limiting to make their adverts sound much louder than other adverts or the enveloping programmes, while keeping peak levels at the PML limit. Their aim, of course, was to make people take more notice of their ads and thus (hopefully) increase sales.

The TV industry had, in effect, drifted into a peak-normalisation environment and, sadly, we're all familiar with the consequence, through the constant need to adjust the TV volume between programmes and adverts, as well as between different channels. The loudest adverts could easily be 4-8 dB louder — far more than the typical tolerance range — than the adjacent programming, and hence the flood of complaints to the broadcasters.

Electronic Ear

Various attempts over a decade or more to introduce industry self-regulation to address inconsistent TV loudness failed. America even went as far to legislate, with its Commercial Advertisement Loudness Mitigation (CALM) Act. Meanwhile, research was well underway to find a technical solution, and that culminated with the International Telecommunication Union's (ITU) release of ITU-R BS.1770. This is a technical recommendation which defines a 'loudness metering' algorithm (see side boxes) which provides a standardised, objective and reliable means of measuring, comparing and adjusting programme loudness.

Note that this is about loudness as perceived by human beings, and not simply 'level'. In other words, the ITU-R 'Loudness Meter' algorithm is essentially an 'electronic ear' that perceives audio loudness in much the same way as the average human listener, and it has proved to be very accurate and very reliable. The primary intention behind its development was to enable broadcast automation systems to measure the perceived loudness of supplied programmes and adverts before they were broadcast, so that their loudness levels could be matched in a similar way to that achieved by those trained continuity operators 30 years ago. Plus ça change!

The ITU-R BS.1770 standard was formally introduced back in 2006, and has been adopted by most of the world's HDTV broadcasters. There have been a few updates along the way, and regional broadcast standards bodies have issued their own application guidelines to suit local practices. For example, in Europe the EBU (European Broadcasting Union) version is called R-128, while the USA's variant is known as ATSC's A/85. In essence, though, they all do the same thing, work the same way, and have virtually identical specifications.

In my native UK, all high-definition TV programming on the BBC, ITV, Channel 4 and Sky now complies fully with the R-128 specifications through a voluntary agreement, and the benefits are quite audible. Adverts no longer deafen the audience, changing channels doesn't require an adjustment to the volume, and the viewer enjoys far more consistent programme levels generally. The introduction of loudness-normalisation has been a positive improvement to the TV viewing experience. (If you're still wondering what all this has to do with releasing music on CDs, stick with me — I am getting there, honest!) The BS.1770 recommendations have been implemented on HDTV channels first because they're all digital, and thus have vast dynamic-range capability, very low noise-floors, and absolute level calibration. This means they can accommodate sensible headroom margins without compromising sound quality, and a digital reference level means the same to everyone.

Headroom Margins

An important fact to note about loudness normalisation is that it is nothing more than a static volume adjustment for each programme, based on an assessment of the programme's qualitative loudness level, measured over its full duration. That last point is important. The loudness value is determined across the whole programme duration, whether it's a 30-second commercial or a two-hour feature film, and not moment to moment. Once the loudness value is determined, the replay level can be adjusted for that programme or advert, so that its loudness conforms to the defined 'target loudness level' of the broadcast system.

The programme's internal dynamics aren't changed in any way; it can still have quiet moments and loud explosions, if that's what the action calls for. Neither is the programme's spectral balance changed — there's no radio-style multi-band processing involved. Loudness normalisation just does what most listeners do instinctively with their remote control; it adjusts the replay level to a comfortable volume and maintains that automatically between different programmes or channels.

However, news programmes or chat shows typically have a fairly small dynamic range, whereas a feature film might have a massive dynamic range. The chosen target loudness level has to accommodate both, and that means building in an adequate headroom margin. There's an old-fashioned concept! The BS.1770 recommendation calls for a target loudness level for HDTV of around -23LUFS (Loudness Units relative to Full Scale, see box), although different regional implementations vary slightly (for instance, the ATSC spec is 1LU lower) and programmes being mixed live are allowed to deviate slightly from the target loudness (typically up to ±1LU).

What this means in practice is that the typical volume of normal dialogue (which makes up the bulk of most programmes) will have roughly the same perceived loudness, whether in a news bulletin or a feature film, and that will typically be about -23LUFS. However, while the signal peaks in a news programme might reach only 4dB higher than the average dialogue level, the occasional explosions in a feature film might reach a whopping 20dB higher. The beauty of the loudness-normalisation paradigm is that it doesn't mind, because the target loudness level has been chosen to provide sufficient headroom to cope with such peaks. Importantly, the listener perceives both programmes as having the same overall loudness, despite their very different dynamic ranges.

So, the TV world, finally getting its house in order, is delivering programming with far more consistent overall loudness. Hurrah! But you're still wondering what that's got to do with the way you mix your music. We're getting closer to that, but our detour must first lead us through the world of broadcast radio and Internet streaming services…

Radio Play

TV isn't the only broadcast sector that has a loudness problem. Reflecting the 'jukebox theory' I mentioned earlier, popular music radio stations have long been trying to make their output sound louder than their competitors', in the hope that listeners would choose 'the loudest station on the dial'. Like the CD, though, analogue radio transmitters have a finite maximum level capability, and so complex, automatic, multi-band compression and limiting techniques are routinely employed to maximise broadcast loudness.

So, here we have another example of a peak-normalisation paradigm, but in this case, the broadcast processing generically known as Optimod (although that's a proprietary brand of the Orban company) alters the source music's spectral balance and dynamics, and often does so quite dramatically. This methodology also causes a volume disparity when listeners retune from more conservatively processed classical music or speech stations. Try switching from BBC Radio 4 to Radio 1 to see what I mean!

As the industry moves further into digital radio broadcasting and Internet streaming, with hundreds of stations accessible at the touch of a button, there's a growing consumer pressure to adopt the loudness-normalisation model instead, and the various audio industry bodies are currently working to ratify a standard. At the time of writing, the optimum target loudness value for broadcast radio is still being debated (although it's likely to end up somewhere between -15 and -23LUFS), and I'm not aware of any broadcast radio station that has taken the leap into loudness-normalisation yet. They're probably all awaiting the release of an industry-wide 'standard' to guarantee a level playing field!

Many popular streaming and consumer music playback services, including iTunes, have already adopted loudness normalisation. In fact, Spotify has used a version of it from day one.The Internet music streaming service Spotify has been using a form of loudness normalisation from the day it was launched, and Apple have recently made the same leap of faith with their new iTunes Radio music streaming service (which at the time of writing is only available in the USA). Consistent loudness is maintained, regardless of the musical genre being streamed, by using iTunes' Sound Check function (see box), and although Apple haven't stated publicly what target loudness value its Sound Check function currently imposes, Bob Katz claims it to be an entirely credible -16.5 LUFS. The adoption of a loudness-normalised paradigm in such a widely used music streaming service is going to be very influential, and could potentially have a massive influence not just on the rest of the music streaming industry, but on the music creators as well, simply because the consumer will become used to loudness-normalised material and the dynamic range capabilities presented in that paradigm. This is the catalyst for Katz's recent press release claiming the loudness war is over.

Many popular streaming and consumer music playback services, including iTunes, have already adopted loudness normalisation. In fact, Spotify has used a version of it from day one.The Internet music streaming service Spotify has been using a form of loudness normalisation from the day it was launched, and Apple have recently made the same leap of faith with their new iTunes Radio music streaming service (which at the time of writing is only available in the USA). Consistent loudness is maintained, regardless of the musical genre being streamed, by using iTunes' Sound Check function (see box), and although Apple haven't stated publicly what target loudness value its Sound Check function currently imposes, Bob Katz claims it to be an entirely credible -16.5 LUFS. The adoption of a loudness-normalised paradigm in such a widely used music streaming service is going to be very influential, and could potentially have a massive influence not just on the rest of the music streaming industry, but on the music creators as well, simply because the consumer will become used to loudness-normalised material and the dynamic range capabilities presented in that paradigm. This is the catalyst for Katz's recent press release claiming the loudness war is over.

Music Loudness

So, after meandering around the broadcast industry's working practices, we arrive at the crux of the whole loudness-normalisation subject. A significant proportion of domestic music consumption is via broadcast radio and Internet streaming, and both of those outlets are adopting loudness normalisation. Most popular personal music players also have loudness-normalisation options, like the Sound Check function on Apple's iPods and iPhones, and on computers when using their iTunes playback software, to maintain consistent loudness when music is played in shuffle or playlist modes. Loudness normalisation means there is no advantage in producing 'loud' CDs or downloads, because the perceived loudness will be the same for everything. The really sobering aspect of loudness normalisation is that very heavily compressed music ends up sounding dull and feeble compared with more naturally dynamic material.

Before the panic sets in, I think it's important to appreciate that we're not facing an overnight step change to music production techniques as we know them! Moreover, no-one is forcing anybody to change the way they mix and master their music; if you like and want to hyper-compress your tracks, you still can. However, you also now have the option to employ musical dynamics without worrying about the CD 'not being loud enough'.

Assuming loudness normalisation catches on — and there are certainly some areas where it may not, such as in the club-music scene — the evolution of production and mastering styles to relax hyper-compression and embrace dynamics will be a gradual process. It will take time for people to become familiar with the new metering technology, and working practices will evolve and spread slowly through the industry. Consumers' acceptance and expectations will also develop slowly, and a new generation of music players may be required before loudness normalisation becomes completely practical.

In that respect, this 'loudness revolution' is really no different from the many other radical changes we've already witnessed — and survived — in the music industry, such as high-fidelity microgroove records replacing shellac 78s in the 1950s, or the introduction of stereo in the '60s, of multitracking and overdubbing in the '70s, of synthesizers in the '80s, of music sequencers in the '90s, and of the Digital Audio Workstation at the turn of this century. All of these technologies (and more besides) have both shaped the way music has been made, and influenced the way it actually sounds. The loudness-normalisation paradigm will impart its own influence on the sound of music too, the most important part of which will be to reinvigorate the creative use of dynamics.

The important thing for SOS readers right now is to be aware of the forces driving these potential changes to the long-established working practices, to understand the technology and terminology involved, to recognise the various benefits and pitfalls, and to ponder upon the creative opportunities that this changing paradigm presents.

The Effect Of Loudness Normalisation

The most pressing question on the mind of most readers right now is probably "How will loudness normalisation affect the sound of my mixes?” The answer is that, unlike Optimod-style broadcast processors, it won't change the sound you create at all; it will only change the volume. Note, however, that this change in volume can dramatically affect your perception of a track! In a peak-normalisation domain, the perceived loudness of a hyper-compressed track counteracts any sense of dynamic constriction. But when that loudness is taken out of the equation in a loudness-normalised environment, the dynamic constriction often becomes glaringly obvious and undesirable.

I mentioned earlier how loudness-normalised systems typically employ a relatively low loudness target level and have a relatively large headroom margin. That headroom margin is there to accommodate dynamic material, and it can be used to creative advantage. While the average energy level of an entire track determines its loudness, it's quite permissible to employ a large peak-to-average ratio to introduce dynamics and transients without affecting the overall loudness. Consequently, tracks with large crest ratios will inherently sound far more punchy, dynamic, and musically and aurally interesting than heavily squashed tracks. The differences aren't usually subtle, either! Loudness normalisation really punishes hyper-compressed music, revealing it to be the solid 'audio brick' that it so often is, while music with natural dynamics and transients shines like a beacon by comparison.

These two screen grabs show two different tracks side by side. As you can see from the top screen, where both tracks have been peak-normalised, the track on the right has been treated to significant loudness processing. The second screen shows the same two tracks matched for loudness as defined by the new standards — and the audible differences are as startling as the visual ones!

These two screen grabs show two different tracks side by side. As you can see from the top screen, where both tracks have been peak-normalised, the track on the right has been treated to significant loudness processing. The second screen shows the same two tracks matched for loudness as defined by the new standards — and the audible differences are as startling as the visual ones!  The two diagrams here hopefully illustrate these points better than my words. These are screen grabs from Cockos Reaper comparing two stereo tracks ripped directly from commercial CDs. The one on the left (coloured here in red) is Chris de Burgh's 'Devil's Eye', taken from an early 1980s CD release, while the one on the right (in green) is Grand Prix's 'Samurai', taken from a much more recent, remastered 'greatest hits' album. I apologise for my (lack of) musical taste, but these tracks highlight the issues nicely.

The two diagrams here hopefully illustrate these points better than my words. These are screen grabs from Cockos Reaper comparing two stereo tracks ripped directly from commercial CDs. The one on the left (coloured here in red) is Chris de Burgh's 'Devil's Eye', taken from an early 1980s CD release, while the one on the right (in green) is Grand Prix's 'Samurai', taken from a much more recent, remastered 'greatest hits' album. I apologise for my (lack of) musical taste, but these tracks highlight the issues nicely.

As you can see, the Chris de Burgh track isn't heavily limited or compressed at all, and it exhibits quite wide-ranging musical dynamics building and falling throughout the track. Using a BS.1770-compatible loudness meter I measured the True Peak level at -1.4dBTP, and the Integrated Loudness measured -18.8LUFS for the entire track. In contrast, the Grand Prix track has a true peak level of +0.5dBTP (in other words, some inter-sample clipping is present!) and an Integrated Loudness of -7.8LUFS, which is 11dB louder than the de Burgh track. This loudness disparity is painfully obvious if you play the two tracks back-to-back. Interestingly, I also have the original CD album containing that Grand Prix track (I was a closet head-banger back in the day!) and that version measures 0dBTP and -9.6LUFS. Clearly, the 'greatest hits' re-release suffered some further destructive compression and peak limiting during its re-mastering!

The lower screen grab shows what happens to these same two tracks in a loudness-normalised environment. In this case I employed a target loudness of -23LUFS — the HDTV standard, and a little lower than the current iTunes Radio setting. Now, the incredibly loud Grand Prix track (in green) has been attenuated to bring its loudness in line with the target value, with the result that its peak level is now just -13.7dBTP, with virtually no variation other than for the intro and a break towards the end. The Chris de Burgh track needed less attenuation to match the loudness target and, importantly, its more dynamic nature means its transient peak level reaches -4.5dBTP, a full 9dB higher than the Grand Prix track, despite both having equal overall loudness.

So, the track that was remastered to sound amazingly loud and 'in your face' has actually come out sounding flat, overly compressed and aurally uninteresting compared with the far more dynamic, but originally 11LU quieter, track. Not surprisingly, it's the Chris de Burgh track which comes across as the more interesting and musical, and is a more pleasurable listening experience. I'd encourage anyone to try this sort of comparison for themselves: hearing is believing.

Conflicting Mastering

As you can probably imagine, during the music industry's transition from a peak-normalisation workflow to one driven by loudness-normalised consumer outlets, there will be conflicting mastering requirements. Should a track be mastered to sound as loud as last year's music when played on CD? Or should it be mixed and mastered to be a little more dynamic so that it fares better when played on loudness-normalised radio and streaming services? Decisions, decisions!

It's not uncommon at the moment for chart artists (or their labels) to release loud CD and download masters for the general public, along with separate, less squashed, radio-play versions for the broadcasters that will better survive current radio processing technologies. Where the material is released on vinyl as well, another even less compressed master is often produced too, simply because vinyl can't cope with a lot of heavy compression and limiting.

I suspect a similar multi-release arrangement will permeate the mastering industry for a few years to come, as loudness normalisation gradually becomes de rigueur, perhaps with separate mastered versions optimised for loudness-normalised and peak-normalised destinations. I can also foresee some artists and labels revisiting their back-catalogue material and having it remastered to sound better in loudness-normalised environments. I can't wait!

Practical Mixing With Loudness In Mind

As far as mixing is concerned, there's no need to change anything about the way you approach or balance your mixes; all of your existing skills still apply. The mix is whatever the music calls for, just as it has always been, whether that means dynamically varied or constrained. Some musical genres involve building the mix from the ground up deliberately to sound dense and powerful, and if that is what is appropriate to the work in hand then carry on in the same way. However, mixing a track to sound loud, purely for the sake of competing in the 'loudness wars', will clearly be a futile effort if the track is destined for loudness-normalised (ie. most) outlets. The practice of emphasising the mid-range energy of a mix, or curtailing the low end, which is commonly employed to maximise the perceived loudness, will be counter-productive.

Since loudness-normalised replay systems enjoy a reasonable headroom margin, the opportunity is provided to make use of that by allowing occasional loud transients, build-ups and break-downs. All of our senses respond to change, so transients are perceived as exciting and interesting. Consequently, mixes will have more impact and interest if you deliberately make use of transients such as emphatic drum breaks and general dynamic changes. I'll stress what I said earlier: the headroom is there to be employed to creative advantage! Just remember that loudness is determined by the average level — so occasional loud bits are fine. Continuous loud bits will give a higher Integrated Loudness value.

Referencing is the one area where some changes to working practices will be required. Ideally, reference tracks should be assessed for loudness and then auditioned at the same Target Loudness level as the mix-in-progress. That way, you won't feel obliged to compete in the loudness war, and you can concentrate on matching tonality and character. Of course, most back-catalogue reference tracks will have been produced to sound loud and so will be dynamically fairly flat. In comparison with that kind of reference a dynamic mix-in-progress will inevitably sound more sonically interesting. Most mix engineers and consumers will find that very refreshing!

To work in a loudness-normalised environment, I would urge the use of a BS-1770 compatible meter plug-in inserted in the stereo mix channel, and set up with an appropriate target level. In the absence of a recognised music industry standard at the moment, I suggest that the iTunes Sound Check level of around -16LUFS would be appropriate. The absolute target level is not particularly critical — it's matched loudness with your reference material that's the aim here, along with a reasonable headroom margin to accommodate wanted transients and dynamics.

The aim while mixing is to achieve an Integrated Loudness value for the entire track which is as close as possible to that target value, with the True Peak level not exceeding -1dBTP. If you already track and mix with a sensible headroom margin — as you should — this shouldn't be too different from your normal practice.

The difficulty most have when first starting to work with a loudness meter is in trying to hit the target level straight away. This is not necessary: the only loudness value that matters is the one showing when you get to the very end of the track. Most songs build gradually to a crescendo at the end, so initially you would expect the Integrated Loudness value to start low and gradually creep up as the mix progresses. At the end of the day, the meter is measuring loudness in a very similar way to our own perception, so if it sounds dynamically balanced to your ears the meter generally agrees! Also, don't obsess about hitting the target level precisely — that's the role of the consumer's replay system — just try to get it within a couple of LU.

The biggest workflow difference is what happens after you profess yourself happy with the final mix. Currently the next stage for most people would be a 'mastering pass' where the headroom is stripped out and the track made to sound as loud as the reference mixes. Clearly, that competitive loudness processing will no longer be necessary or appropriate — at least not as far as radio, streaming and download consumers are concerned. Instead, the mastering process can focus on optimising the spectral balance and achieving a consistent sound character across related tracks — or to put it another way, genuine polish rather than heavy sanding!

Round Up

There's no doubt that large parts of the music industry are embracing loudness normalisation, and although it will take time to become the standard way of working everywhere, it looks certain that this change will become universal. In a loudness-normalised environment the current style of peak-normalised material doesn't fare well at all: the perceived loudness benefit is lost, and the sonically and musically destructive consequences of hyper-compression are clearly exposed.

Instead, loudness normalisation positively encourages the use of dynamics and transients. Tracks are made punchy by being dynamic rather than just loud, and compression and limiting become musical effects rather than essential competitive processing. Headroom is restored, inter-sample clipping is banished, and the digital environment finally achieves the sonic quality and dynamic range of which it is capable.

No-one likes change, and long-held working practices will take time to overcome, but loudness normalisation is win-win both from the perspectives of musical and sonic quality. There will inevitably be some short-term practical difficulties with monitoring levels in some consumer personal music players, and until all the DAW manufacturers incorporate BS.1770 compliant meters, some third-party plug-ins may be required. Overall, though, the benefits far outweigh the difficulties, and I urge you to familiarise yourself with the new metering and the creative opportunities that Loudness Normalisation presents.

The New Normal: ITU-R BS.1770

In July 2006, the ITU published a paper with the catalogue number ITU-R BS.1770. The '-R' indicates that it is a 'recommendation' paper, and the 'BS' prefix means it falls within its Broadcast service (Sound) series. The code numbers don't mean much to anyone, of course, but the paper's title is considerably more informative: 'Algorithms To Measure Audio Programme Loudness And True Peak Audio Level'.

The original document has been superseded three times so far, with refinements being determined by the PLOWD group, which comprises 240 creative and technical expert members, including independent practitioners and members of a number of professional bodies and manufacturers. BS.1770-1 was released in September 2007, BS.1770-2 in March 2011, and the current edition BS.1770-3 in August 2012. All four versions are available from: http://www.itu.int/rec/R-REC-BS.1770 if you're interested in the evolution, but only BS.1770-3 is relevant today. The various updates reflect small evolutions of the loudness algorithm at the heart of the recommendation, mainly after feedback from the EBU and other interested parties. The current version of the algorithm is more sensitive to isolated loud peaks, and places less weight on low-level background sounds compared with the original. A 'loudness range' calculation to indicate the perceived dynamic range was also incorporated. Obviously, this means that any attempts to fool the system with clever mix trickery can be accounted for in future updates.

The bulk of BS1770-3 is concerned with defining a reliable, objective measurement of the subjective loudness of a piece of audio material, such as a TV programme, film, or advertisement. The aim is to allow material from disparate sources to be compared impartially (and automatically, if required), so that the subjective loudness can be matched between them with a high degree of consistency. The recommendation also details, in an appendix, the requirement and methodology for True Peak metering in the digital domain, specifically to avoid missing inter-sample peaks and thus inadvertent clipping in subsequent signal processing or format conversion.

Loudness Metering Algorithm

This diagram works for both stereo and surround signals.

This diagram works for both stereo and surround signals.  The loudness-metering algorithm detailed in the latest BS.1770-3 (and in EBU Tech Doc 3341) document involves four distinct stages: response filtering, average-power calculation, channel weighting and summation.

The loudness-metering algorithm detailed in the latest BS.1770-3 (and in EBU Tech Doc 3341) document involves four distinct stages: response filtering, average-power calculation, channel weighting and summation.

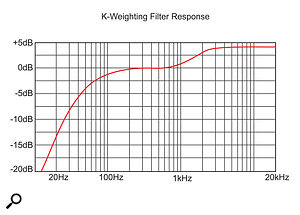

The response filtering stage is called 'K-weighting' and it combines a boosting high-shelf equaliser with a high-pass filter. The shelf equaliser provides 4dB boost above about 2kHz and is intended to replicate the acoustic effects of the head. The high-pass filter simulates our reduced sensitivity to low frequencies when it comes to assessing loudness, with a 12dB/octave filter turning over at roughly 100Hz.

After this simple filtering, the next stage determines the average signal power using a mean-square calculation (ie. the average of the squared values of sample amplitudes). The power is averaged over a 400ms measuring period which is updated every 100ms, and the result may then be adjusted or 'weighted' before being summed with any other related audio channels. In a stereo system, the average power levels for the left and right channels are simply added together. In a surround system, the front three channels are added together, and the two rear channels are raised in level by 1.5dB relative to the front channels before they're added to the combination. This makes the metering slightly more sensitive to the rear-channel energy, which correlates more closely to human perception. In surround systems the LFE (low-frequency effects) contribution is ignored completely as far as loudness assessments are concerned.

Following this channel summation, the loudness value is given as a logarithmically scaled number in Loudness Units relative to digital full scale — so it will always be a negative number. The EBU prefer to use the absolute units of LUFS, while the ATSC in America use LKFS (loudness, K-weighted, relative to full scale), but the calculations are identical and the two terms are really interchangeable.

Where a relative (rather than absolute) loudness scale is more appropriate or convenient, the deviation in loudness from a given Target Loudness level can be given in Loudness Units or LU. For example, if the target level is -23LUFS but the material actually measures -20LUFS, its loudness offset would be described as +3LU.

As you can see, the K-filter that's applied to the signal removes much of the bass energy below 100Hz, as this doesn't usually affect our perception of loudness.Normally, the loudness value of any pre-recorded material can be measured and the replay level adjusted to ensure precise conformation with the Target Loudness, but where exact loudness normalisation is not practical — such as for live broadcasts — the current EBU R128 HDTV broadcast standard calls for a loudness deviation of no more than ±1LU.

As you can see, the K-filter that's applied to the signal removes much of the bass energy below 100Hz, as this doesn't usually affect our perception of loudness.Normally, the loudness value of any pre-recorded material can be measured and the replay level adjusted to ensure precise conformation with the Target Loudness, but where exact loudness normalisation is not practical — such as for live broadcasts — the current EBU R128 HDTV broadcast standard calls for a loudness deviation of no more than ±1LU.

Gating & LRA: Although the basic principles of the loudness algorithm are fairly straightforward, there's some added complexity to make sure that quiet periods don't drag the average loudness value down unfairly. For a start, if the signal level lies below -70LUFS it plays no part in the loudness measurement process. This makes sure that silent sections at the start and end of a programme don't affect the loudness value.

Secondly, if the audio level falls 10dB below the current programme loudness value it's also ignored, so that pauses in dialogue, or quiet sections between action sequences, are effectively ignored. In this way the loudness algorithm focuses only on the 'foreground' sounds.

Another facet of the loudness algorithm is the calculation of a 'Loudness Range' or LRA figure, which essentially indicates by how much the programme dynamics are being controlled. The LRA value is derived by analysing a continuously sliding three-second loudness assessment window, with any values more than 20LU below the current programme loudness level gated out. Again, this ensures that the LRA figure reflects the range of foreground audio signals, and ignores low-level background noise and the system noise floor.

The distribution of these short-term loudness levels is further quantified by setting 10 and 95 percentile limits so that the LRA figure is actually the difference between the loudness levels at the 10 and 95 percent points in the total measured range. The lower 10 percent limit prevents, for example, the fade-out of a music track from dominating LRA value, while the upper 95 percent limit ensures that a single, unusually loud, sound, such as a gunshot, cannot on its own be responsible for a large LRA value. A typical action feature film might have an LRA of 25, while most normal TV programmes would be closer to 10, and compressed pop music could have an LRA value of 3 or even less.

True Peak Metering

A key element of the BS.1770 recommendation is a True Peak meter to evaluate the actual peak level of the reconstructed audio waveform. This is important because, although the replay level of material is determined by its loudness, and a generous headroom margin is usually allowed, the physical medium still has a finite clipping level which must be protected

Critically, the True Peak meter algorithm recognises inter-sample peaks which are often missed by the simple sample-peak meters typically provided in DAW software and digital hardware. Sample-peak meters display only the highest amplitude value of a group of consecutive samples, and the scale is calibrated in dBFS (decibels below full scale), with 0dBFS at the top. To differentiate a True Peak meter reading from a conventional sample-peak meter, the new scale is marked in dBTP (decibels, true peak), and typically goes up to +3 or +6dBTP. The simpler Loudness meter plug-ins often don't show a True Peak meter at all, instead providing just an overload light which illuminates when the signal exceeds a defined threshold — usually -1dBTP.

To detect inter-sample peaks a True Peak meter employs a relatively high oversampling ratio which allows it to more fully reconstruct the continuous audio waveform. BS.1770 calls for a minimum oversampling ratio of 4x, which gives a maximum possible under-read error of slightly less than 0.7dB. For that reason, the EBU recommends setting the permitted maximum level (PML) to -1dBTP, thus guaranteeing that the signal can never cause an overload or clipping in any following digital processing equipment or converters. (The ATSC A/85 recommendation sets a slightly more conservative PML of -2dBTP.)

Not surprisingly, the higher the oversampling ratio, the smaller the possible inter-sample under-read. An 8x oversampling ratio gives a maximum error of slightly less than 0.2dB, and 16x oversampling is accurate to within 0.05dB. However, higher oversampling ratios involve a greater processing overhead, and in practical terms the benefit is negligible in most cases. So the BS.1770 recommendation is for a PML of -1dBTP based on the more manageable 4x ratio, which is a very pragmatic solution adopted by the vast majority of loudness meter plug-in manufacturers.

Typical Loudness Meter Displays

We are accustomed to working with level meters that share very recognisable display characteristics. For example, VU meters are instantly familiar, with their typically yellow background scaled with 100 percent modulation under 0VU, with the red section of the scale stopping usually at +3dB. Quasi-peak meters are also quite recognisable, with their typically black background and white markings — especially the BBC's PPM, scaled simply from 1 to 7. Similarly, all digital sample meters are bar-graphs, and scaled with 0dBFS at the top, going down to -50dBFS or more, and often with an expanded range over the top 6dB or so.

However, none of the loudness meter recommendation documents or regional implementation standards dictate what a loudness meter should look like — they only specify how the numbers should be calculated! I personally think this is a major failing of the standards bodies, because the natural consequence is that every single manufacturer that produces a BS.1770-compliant loudness meter does so with its own unique layout and graphics. Not surprisingly, the resulting variation confuses the heck out of most users, and acts to discourage the uptake of loudness metering because no-one is quite sure which version to buy! The good news, though, is that all loudness meters calculate the displayed numbers in exactly the same way, and they all show the same things, just in different places and in different ways.

The single most important parameter is the 'Programme Loudness' or 'Integrated Loudness' (I) value, which is the overall loudness of the entire programme item. This parameter is usually emphasised in the meter display somehow, often by being larger than any other number or in a prime location. The Integrated Loudness value should equal the Target Loudness value by the end of the programme.

The Integrated Loudness value is always associated with a start/stop/reset timer function, which determines the duration of the measurement. The system should be reset and started before the start of the programme material, and stopped at the end, either manually or automatically by being linked to the DAW transport commands in some systems. The actual pre-roll start and post-roll stop times before and after the programme aren't critical because of the -70LUFS gate which ensures the programme loudness value isn't affected by silences.

In addition to the Integrated Loudness value, most loudness meters display five other parameters one way or another — most commonly as simple numeric values in boxes somewhere, but sometimes with bar-graphs. The first two are usually labelled M and S. The M value is 'Momentary' and based on a sliding 400ms window updated every 100ms, so that it gives an indication of the instantaneous loudness. This is intended to aid initial level-setting when starting a mix, and in many meters the M value is displayed as a bar-graph, where it behaves in a very similar way to a standard VU meter.

The S value is the 'Short-Term' or 'Sliding' loudness value, which is based on a sliding three-second window. When mixing material on the fly, the S value is the one to keep an eye on as it responds reasonably quickly to mixing adjustments and provides a good indication of where the mix loudness is in relation to the target value, moment to moment.

The True Peak Level may also be displayed, either on a bar-graph or as a numeric value, and the normal maximum permitted value is -1dBTP (-2dBTP for ATSC A/85 installations). Anything higher than that indicates the presence of inter-sample peaks, which will cause internal overloads and clipping in any signal digital processing that involves oversampling, and that includes all modern D-A converters, sample-rate converters, lossy data-reduction codecs such as MP3 and AAC, and most EQ and dynamics plug-ins. Some meters don't display the actual True Peak value, but illuminate a warning indicator if the signal exceeds a preset PML threshold.

The final parameter, which not all meters provide, is the loudness range or LRA value. Currently there is no prescribed acceptable range, but a typical action feature film might exhibit an LRA of around 25dB, while a typical TV programme might be about 10dB. The LRA of music varies widely depending on the style and genre, but I would suggest somewhere between 3 and 15dB would be acceptable for most pop music styles, and a little wider for classical and jazz, etc. To provide some points of reference, Chris de Burgh's 'Devil's Eye' used in the earlier illustration has a pretty big LRA of 14.4dB, while the main 'brick' body of the Grand Prix 'Samurai' track has an LRA of just 1.5dB.

Most loudness meter systems also include some form of 'history' chart. Often this is shown as a horizontally scrolling graph of loudness levels, sometimes overlaying the Integrated, Short-term and Momentary loudness values on the Target Loudness threshold. An alternative method, used by TC Electronic, is to display the history as a rotating 'radar display'.

Since no two manufacturers display the loudness and related data values in exactly the same way, the choice of meter comes down to entirely personal preferences of manufacturer and graphical presentation aesthetics. The only technical issues to consider are conformity with the latest ITU specifications (currently BS.1770-3) and any regional requirements such as EBU R-128, or ATSC A/85, the latter affecting the display units and any preset Target Loudness and True Peak limits.

If you wish to check the accuracy and conformity of your chosen loudness meter, Prism Sound and Qualis Audio have jointly created an excellent and comprehensive suite of test signals, which are available on both of their websites (see 'Further Reading' box).

Many commercial loudness meter plug-ins include a facility to assess the loudness of files in the background and faster than real time. It may also be possible to work in a batch mode to assess a large group of files.

iTunes Sound Check

Apple's Sound Check facility was introduced with iTunes 3, way back in 2002, and it's included in all current iOS devices — iPods, iPhones, iPads and so on. The idea behind Sound Check is essentially to avoid the annoying (and potentially even dangerous) volume changes that can occur if your playlist moves from, say, a quiet jazz ballad into a full-on screaming metal track! It works in a broadly similar way to the ITU-R's BS.1770 algorithm but — as is Apple's usual policy — precise details of the system's inner workings are a closely guarded secret. Although Apple's algorithm appears to be not quite as precise or accurate as the BS.1770 algorithm, it is certainly close enough to give very usable results and benefits.

When Sound Check is activated for the first time, via a tick box in the Playback section of the Preferences dialogue in iTunes, the entire iTunes library is scanned and the loudness of each individual song or audio track measured and logged. This can take quite a while, depending on the size of your music library — expect somewhere in the region of 50-100 tracks scanned per minute. Once scanned, the loudness data is stored in the 'normalisation information' tag of the ID3 metadata associated with the individual MP3, AAC, AIFF or ALAC (Apple Lossless) files. Loudness metadata can also be stored in the iTunes XML database for supported file formats that don't include compatible metadata.

When a track is subsequently replayed with the Sound Check function switched on (enabled in most iOS devices via the Settings menu), the stored normalisation information is used to offset the internal replay level for that specific track, such that it ends up at broadly the same perceived loudness as every other track. The system is easy to use, easy to set up, and is generally very effective, particularly for anyone who uses the 'shuffle mode' with an eclectic music library, or who creates varied playlists!

Album Mode

While Sound Check's loudness normalisation is ideal when listening to disparate tracks, to maintain reasonably consistent listening levels, it's not so good if you want to listen to a complete musical work where the relative loudness of sequential musical sections is an important factor in the overall experience. This applies to any musical genre where deliberate contrasts of quiet segments between louder sections are aesthetically important — such as in complete classical symphonies, concept prog-rock albums, or any albums containing a diverse selection of material.

Up until recently, if Sound Check was switched on but you chose to listen to a complete album, any intended loudness variances between tracks would be removed, destroying the intended effect and much of the musical enjoyment! Happily, Apple have now corrected this weakness: the latest version of iTunes now has an unannounced but very welcome Album Mode which intelligently applies loudness correction depending on how you have chosen to listen to your music.

If Sound Check is turned on with tracks played with the iTunes shuffle mode, via a playlist, or through the iTunes search screens for artist or genre and so on, loudness normalisation is applied and the relative loudness of each successive track remains pretty consistent. In contrast, if music is selected through the iTunes album listings, the player assumes you want to enjoy the tracks with their original relative levels, and so the Sound Check mode is temporarily modified. This is a useful step forward, but unfortunately it also means that switching between album selection and artist or track selection results in quite a major listening level shift because the latter is loudness normalised while the former isn't. So this isn't quite a perfect solution yet, but it's much better than the original options, and for the first time it is now quite acceptable to leave Sound Check turned on. In fact there are industry calls for Apple to activate Sound Check by default in future software updates.

Not Loud Enough!

A problem which is a little harder to resolve is that of inadequate playback volume when Sound Check is enabled. Clearly, for loudness normalisation to be effective, the Target Loudness level has to be low enough to accommodate the peaks of the most dynamic material likely to be encountered. Apple appear to have chosen a target level of around -16LUFS, and this inevitably means that the playback volume for highly compressed material will be considerably lower than its native level when auditioned with Sound Check switched off — in practice, as much as 12dB lower, which represents quite considerable attenuation.

The obvious answer is simply to increase the device's playback volume control to restore a comfortable listening level when Sound Check is enabled. Unfortunately, though, this isn't always possible, particularly for Apple device users in Europe where 'hearing safety' concerns led Apple and other manufacturers to restrict the maximum playback volume in iPods, iPhones, iPads and even laptop computers! Hopefully future firmware updates will enable increased playback gain when the Sound Check function is active, but until then the only pragmatic solutions are to invest in much more sensitive headphones/earphones, or to employ an external headphone amplifier of some kind. Both involve additional expense and will therefore dissuade many consumers from using the Sound Check facility, at least in the short term, which is regrettable.

Glossary: Loudness Terminology

Crest Factor: A measure of a signal waveform amplitude, indicating the ratio of the peak to average value. A crest factor of 1 indicates no peaks (ie. a DC signal), while a sine wave has a crest factor of 1.414 and a triangle wave 1.732.

dBTP: True peak level in decibels, with reference to digital full scale. The maximum value of the reconstructed audio signal waveform, recognising any inter-sample peaks.

I, or Integrated Loudness. The loudness value indicated on a loudness meter, which is integrated across the entire programme duration. Also known as Programme Loudness. This value should equal the Target Loudness value by the end of the item.

LKFS: Loudness, K-weighted, with reference to digital full scale. ATSC preferred term for the absolute loudness value. (See LUFS).

Loudness Normalisation: A working practice in which an arbitrary loudness level defines the reference point, and the loudness of individual signals is assessed so that they can then be adjusted to match loudness on replay. This approach imposes a headroom margin and thus encourages dynamic variations. (See also Peak Normalisation).

LRA: Loudness Range. The distribution of loudness within a programme (an indicator of the programme's perceived dynamic range).

LU: Loudness Units. A relative loudness value from the Target Loudness level.

LUFS: Loudness Units with reference to digital full scale. The EBU preferred term for the absolute loudness value. (See LKFS)

M, or Momentary Loudness: An instantaneous loudness value shown on a loudness meter. Assessed from a 400ms time window updated every 100ms. Used only for initial level setting.

Peak Normalisation: A practice whereby the highest peaks of different programmes are aligned to a defined reference level, which encourages engineers to use dynamic range compression to increase perceived loudness.

Programme: An individual audio programme, advert, music track, and so on.

Programme Loudness: The Integrated Loudness over the duration of the programme item, in LUFS. Also shown as the 'I' value on loudness meters.

Target Loudness Level: The intended loudness-normalisation level.

TP, or True Peak: The maximum peak value of the reconstructed audio waveform (which includes any inter-sample peaks).

Sample Peak: The typical digital sample-peak meter system which indicates the highest amplitude value represented within a batch of digital audio samples. It is typically lower than the True Peak value of the reconstructed analogue waveform, because it ignores any inter-sample peaks.

S, or Sliding or Short-term Loudness: A short-term loudness value shown on a loudness meter, assessed from a continuously updating three-second time window. Used for moment-by-moment mix adjustments.

Further Reading

- ITU-R BS.1770 Documents:

- ATSC A/85 Standards Document:

www.atsc.org/cms/standards/A_85-2013.pdf

- EBU R128 Standards Document:

- EBU Tech Documents (Loudness Metering EBU mode):

tech.ebu.ch/docs/tech/tech3341.pdf

- EBU Tech Documents (Loudness Range):

tech.ebu.ch/docs/tech/tech3342.pdf

- Prism Sound/Qualis Audio Loudness Meter Test Files:

http://resources.prismsound.com/tm/Loudness_Meter_Test_Signals.zip

www.qualisaudio.com/documents/TechNote-2-WaveFiles-5-12-2011.zip

- Qualis Audio Loudness Documents:

www.qualisaudio.com/documents/TechNote-1-5-31-2011.pdf

www.qualisaudio.com/documents/TechNote-2-5-31-2011.pdf

- Music Loudness Alliance: