If you want your soundcard to be connected to a sequencer for recording and playback, to an editor to tweak the sounds, and to a MIDI monitor for testing, then you will need a knowledge of multi‑client nodes.

If you want your soundcard to be connected to a sequencer for recording and playback, to an editor to tweak the sounds, and to a MIDI monitor for testing, then you will need a knowledge of multi‑client nodes.

Attaching several different applications to a single MIDI or Audio device can be very useful — once you've worked out how to do it! Martin Walker guides you through the procedure and points out the varied uses of the multi‑client approach.

I still get a steady trickle of emails concerning problems with multiple‑client MIDI drivers. Multi‑client capability is often needed for both MIDI inputs and outputs, to allow you to access them from several applications simultaneously. Given the number of soundcards with extensive synthesizers or samplers on board, it seems only natural that the supplied MIDI drivers should let you connect your sequencer for recording and playback, at the same time as a separate editor for loading, saving, or changing sounds. After all, many soundcards come complete with suitable editing software, so it would seem a foregone conclusion that the developers would ensure multi‑client capability. So why do the developers keep bundling multi‑client overlay utilities like Hubi's Loopback or Herman Seib's MultiMid with their soundcards? Surely if multi‑client utilities can be written by talented individuals and released as freeware, then soundcard developers can manage the same feat?

You may wonder why I'm grumbling, since these freeware overlay utilities are so freely available. Well, my reason is the same as in every email I receive on this subject: it's just so confusing. Depending on what you want to achieve, you have to overlay both existing driver inputs and outputs to provide them with multi‑client capability, and then, when selecting sources and destinations inside your software applications, you have to ignore the original MIDI ports in favour of the overlay. Sometimes if you choose the original port when an overlay is connected, you can crash your PC, so it pays to be careful.

As if this weren't enough, some applications like Cubase VST open up every available active MIDI device when first launched. To avoid problems you have to go into the SetupMME utility and deactivate the original ports, leaving only the overlays in the active list. Thankfully this does reduce confusion, since once inside Cubase VST, only the overlays appear, and you can also use SetupMME to give them names that actually relate to the connected MIDI devices. But why should we have to do all this? It isn't as if we're wanting to do anything unusual, after all.

The Audio Connection

Hubi's MIDI Cable utility is used in conjunction with his Loopback utility to give multi‑client capability to those MIDI ports that don't have it. Here there are four extra nodes (labelled LB1 to LB4), and currently the Sw1000 #1 Synthesiser has been given multi‑client MIDI capability courtesy of LB1.

Hubi's MIDI Cable utility is used in conjunction with his Loopback utility to give multi‑client capability to those MIDI ports that don't have it. Here there are four extra nodes (labelled LB1 to LB4), and currently the Sw1000 #1 Synthesiser has been given multi‑client MIDI capability courtesy of LB1.

With the proliferation of software synths, those of us with multiple‑output soundcards face a similar situation with audio drivers. If you want to allocate some output channels to your audio sequencer, and others to a software synth, you also need audio drivers that have multi‑client capability. Although Windows can view soundcard audio ports as multi‑channel devices, most soundcard drivers are still written to be seen by applications as a series of stereo pairs (normally labelled Output 1/2, Output 3/4, and so on), and these are regarded by Windows as completely separate devices.

Whether or not each stereo pair can be accessed by a different music application depends on how the soundcard driver is written. Since this hasn't until recently been particularly important, few manufacturers trumpet this information, and it's often not clear whether your drivers have multi‑client capability until you try it out. It's becoming increasingly obvious that multi‑client capability is important to the way many PC musicians want to work, for both MIDI and audio. So, let's look further into what you can do, what you can't, and how you overcome some of the possible conflicts when putting theory into practice.

Setting Up Hubi's Loopback

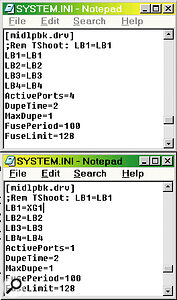

You can change various settings in Hubi's Loopback by editing the system.ini file (see page 141). Here the default entries are shown in the upper window, while in the lower one I have reduced the number of active nodes to one, as well as renaming it as a more meaningful XG1 for the example described.

You can change various settings in Hubi's Loopback by editing the system.ini file (see page 141). Here the default entries are shown in the upper window, while in the lower one I have reduced the number of active nodes to one, as well as renaming it as a more meaningful XG1 for the example described.

As I mentioned in the introduction, the most common requirement for multi‑client MIDI ports is the ability to use a sequencer and synth editor simultaneously. Once you need to run two applications simultaneously, both need to be able to access the MIDI output port, and you will then soon find out if you have multi‑client capability — without it, an error message will appear. Some applications simply report a rather cryptic Windows error message such as 'MMSYSTEM004 The Specified Device is already in use. Wait until it is free, and then try again', but most are more helpful, giving a message such as 'MIDI device already allocated'.

If you don't get an error message, you're in business. If you do, however — and many people will — you need a multi‑client overlay utility. Essentially, what these utilities do is provide a software MIDI merge facility which merges the outputs from your separate applications into a single stream of MIDI data. The most popular seems to be Hubi's Loopback, so I will use this in my examples. If you haven't already got this useful utility, there is a link to it from Synth Zone's software utilities page (www.synthzone.com/utilities.htm).

Hubi's Loopback consists of four 'nodes', normally labelled LB1, LB2, LB3, and LB4. These are designed to 'sit on top' of an existing MIDI input or output, and each node can handle up to four simultaneous In clients and up to 10 simultaneous Out clients. After you have installed the utility you should see these additional entries in your list of MIDI inputs and outputs.

It's at this point that many people get confused, since to use any of the nodes you often (but not always) need to connect them to the appropriate MIDI input or output using a separate utility called Hubi's MIDI Cable. This can be found inside the same folder as the Loopback device (its filename is Hwmdcabl.exe). Multiple instances of this can be run, and each one appears on your Taskbar. Essentially, each one is a MIDI thru device. A practical example will make this clearer.

Let's make my Yamaha SW1000XG soundcard synth multi‑client. To do this, run Hwmdcabl.exe, and then right‑click on its Taskbar icon; a text window will appear (see screenshot on page 142). The left‑hand column contains various commands (I'll come to these in a moment), while the central and right‑hand columns show every MIDI Input and Output currently installed in your PC. In my example, you would click on Input 4 to select the first input node, and output 4 to connect this to the 'SW1000 #1 Synthesiser'. From now on you ignore the SW1000 #1 port inside MIDI applications, and use the LB1 instead. If you want the same capability for MIDI channels 17 to 32, you run a second MIDI Cable, but this time choose LB2 as the input, 'SW1000 #2 Synthesiser' as the output, and use LB2 inside your MIDI applications.

Hubi Tweaks

Soundscape's Mixtreme PCI card offers one of the most flexible multi‑client environments around.

Soundscape's Mixtreme PCI card offers one of the most flexible multi‑client environments around.

Those of you running Windows 95 may already be panicking at the thought of adding four more inputs and outputs to your current list, for fear of exceeding the 11‑device MIDI limit. Thankfully Hubi has provided the means to reduce the number of nodes from the default four: you can do this by editing the appropriate text entries in the System.ini file (you can find this in your Windows folder, but the quickest way to view it is to click on the Run... command on the Start menu and type system.ini). You will find various options under the heading '[midlpbk]'. 'ActivePorts=N' can be set anywhere between 1 and 4, while to help you remember what you connect to what, the node names can also be edited. I have relabelled LB1 as XG1, by changing the line that currently reads 'LB1=LB1' to 'LB1=XG1'. The new values will take effect when you next boot.

When the MIDI Cable utility is closed (either directly by you, or automatically when you next shut down your PC), its current input and output configuration is automatically saved in the Win.ini file (also in the Windows folder). This means that the next time you launch it the same settings apply. However, if you want to run more than one MIDI Cable, this doesn't work, so a second method can be used — adding parameters to a Command Line. Those familiar with DOS will be well used to this technique, but to save you typing in suitable lines of text, Hubi has added a semi‑automatic method. It does require that you create a Windows Shortcut, but if you do this and place it in the StartUp folder it also has the advantage of running automatically every time you reboot.

To create a Shortcut to the Hwmdcabl.exe utility in your Startup folder, open Windows Explorer, and then the Windows\StartMenu\Programs\Startup folder. Then, right‑click in the files pane, and select New Shortcut. Then, use the Browse button in the small window that appears to locate the Hwmdcable.exe program and follow the instructions. When you have finished, right‑click on the new shortcut and select Properties. Now, open the MIDI Cable text window and, once you have selected an input and output click on the 'Cmd to clipboard' command in the left hand column. Then, return to the opened Properties window of your shortcut, and use the Ctrl‑V key combination to paste the Clipboard contents into the box marked Target. This should now contain an entry along the lines 'C:\MIDI\UTILITY\HUBI\HWMDCABL.EXE OUT=4 IN=4' where the first part points to the utility file, and the final parts are commands to set the output to 4, and the input to 4. It sounds complicated, but is fairly straightforward, and only needs to be done once. Now, every time you switch on your PC, this same connection will be made automatically (and you can duplicate the shortcut up to four times if you need more autoload multi‑client cables for your setup).

Real‑Time Recording Of Edits

Yamaha's SW1000XG card offers six stereo busses, each of which can be assigned to different software applications and processed using the card's built‑in DSP.

Yamaha's SW1000XG card offers six stereo busses, each of which can be assigned to different software applications and processed using the card's built‑in DSP.

Now that you have multi‑client capability, there are two main uses. The first, running a synth editor alongside a sequencer, has already been discussed. For many people, however, a far more exciting option is being able to record the output of the synth editor as it sends SysEx data to your synth to (for instance) sweep the filter frequency, or change effect settings. To do this, we need to send the output from the synth editor into the input of the sequencer — but then to hear the changes in real time we also need to send the output of the same sequencer track into the synth itself.

It's actually possible to do this using just the nodes, without recourse to MIDI Cables. Here's how you do it; I'll use XGedit95, the SW1000XG #1 and Cubase VST for my example, but you can substitute your own editor, synth and sequencer.

Open the Steinberg SetupMME utility, make sure that you set LB1 active in the Inputs, and then close it (you don't need any of the 'LB' devices enabled in the list of outputs, so these can be disabled). Then open Cubase VST, and you should find that LB1 is in the list of MIDI inputs in the MIDI Setup window — make sure that it's ticked. Next you will need to open up the Cubase MIDI Filter window and ensure that the Sysex Thru box is unticked, so that your edits pass through Cubase and are sent on the SW1000 #1 output. To record the SysEx data in Cubase you will also need to untick its Sysex Record box in the same filter window.

Now launch XGedit95, and in its Setup MIDI box select LB1 as MIDI Out A. Now, when you alter any parameter on the first 16 channels inside XGedit95, the SysEx data goes to LB1, which is also connected as a valid Cubase input, so the same data appears inside Cubase, where it is immediately sent Thru to the SW1000 #1 output to be auditioned. Once you start recording in Cubase, you can start twirling knobs to your heart's content in XGedit95, and every single movement will be recorded for posterity. You can either set up the mod wheel on your keyboard to control filter frequency in XGedit95 and record the filter sweeps as you play the part, or (which is often better) record the part first and then record the SysEx on a separate Cubase track.

It's worth noting that even if your MIDI device has multi‑client capability you will still need a node to perform this feat, since you need a way to send the output from one piece of software into the input of another. The only alternative would be to route the output from your software synth editor to a physical MIDI output socket, and then patch a MIDI cable between this and a MIDI input socket that is available to your sequencer.

Cakewalk users should also be aware that versions up to and including 8.03 could not send SysEx data thru to the output, so if you try recording your edits no changes would be heard from your synth. This has been cured in Cakewalk 8.04 — you need to tick the 'Echo System Exclusive' box on the MIDI page of Global Options.

Potential Problem Areas

If you want to select DirectSound drivers for use with a software synth such as Rebirth, you may see multiple entries. For instance, although the two entries for the AWE64G are fairly clear (one is the standard MME driver, and the other the DirectSound version), others such as the SW1000 show emulated DirectSound drivers, and these are best avoided.

If you want to select DirectSound drivers for use with a software synth such as Rebirth, you may see multiple entries. For instance, although the two entries for the AWE64G are fairly clear (one is the standard MME driver, and the other the DirectSound version), others such as the SW1000 show emulated DirectSound drivers, and these are best avoided.

Sending masses of SysEx data can, however, cause some otherwise perfectly behaved devices to throw a wobbly, and with certain combinations of gear you might get problems. In fact, there is a more fundamental problem when you are recording real‑time SysEx performance data. Each SysEx command consists of a string of data bytes of variable length, and a string is sent to your MIDI device each time a software knob is changed to a new value.

Unfortunately, some synth commands need to be sent in a certain predetermined order, and in the case of a few commands (like resets) you must wait a certain number of milliseconds for them to take effect before sending any subsequent commands. For instance, my DB50XG manual tells me that when the XG System On string is received, 50mS is required to execute the message — any subsequent command sent to an XG device before this interval is up would be ignored. A more common example is the Program Change message. On many synths if you insert one of these during a song you have to wait a short time before playing any notes, to make sure that the new sound has 'taken'.

Normally the software synth editor will make sure that these delays are dealt with automatically, and when you record controller moves into your sequencer the order and timing of commands will adhere to all the rules for that particular MIDI device. However, if you attempt to record your real‑time moves in several passes then some of the SysEx data may end up in the wrong order, or be sent at the wrong time, causing some commands to be ignored, or even worse, scrambled. Sadly this can also happen in Cubase Mixermaps, Logic Environments, and Cakewalk Studioware panels, all of which send SysEx data based on the position of the control in the display, rather than the order and timing with which the synth should receive it to operate properly.

If the vast majority of multi‑channel soundcard drivers appear to Windows as a set of stereo pairs, why wouldn't you be able to do just what you like with each pair?

You might have no problems, but if you start experiencing strange sounds emerging from your MIDI device or, more commonly, changes being ignored, this could be the reason. For real‑time movement of controls that require SysEx data rather than MIDI controllers, the safest recourse is to attempt to record them in a single pass using a dedicated editor that is aware of the correct ordering system. One possible long‑term solution to this problem is discussed in the SysEx Solutions box on page 142.

One final point is worth making before I move on to audio matters. Multi‑client capability doesn't mean that an infinite number of MIDI applications can be connected to a port: there will be a maximum number beyond which you once again receive a 'MIDI device already allocated' error. In the case of Hubi's Loopback each node can have up to four In‑clients and 10 Out‑clients — you are hardly ever going to exceed these limits. However, while the latest Yamaha drivers for the SW1000XG (version 2.1.2) have multi‑client MIDI Outs, they can only cope with a maximum of two clients, as I found when I attempted to run both XG‑Wizard and XGedit95 alongside Cubase.

Merging Audio Clients

Having explained the ins and outs of multi‑client MIDI, let's now turn to multi‑client audio. Once again this causes a lot of confusion, partly because the one term is often used to refer to two distinct mechanisms. Following on from multi‑client MIDI, you might expect that multi‑client audio would be the ability to merge the audio output from two applications to run on a single stereo soundcard output. However, most soundcard manufacturers are actually referring to the ability for the individual stereo output pairs of multi‑channel soundcards to be allocated to different applications, which is rather different.

Many users of multi‑channel soundcards assume that this ability is automatically available — after all, if the vast majority of multi‑channel soundcard drivers appear to Windows as a set of stereo pairs, why wouldn't you be able to do just what you like with each pair? The reality with many drivers was that every stereo pair was grabbed by the first application that accessed them, leaving subsequent applications with a message reading 'Device could not be opened. Maybe card is already allocated by another application or this device does not support full duplex mode.' So, now that this feature is so desirable, manufacturers tend to make sure that they tell people when their drivers are capable of it.

In fact, 'merged audio' multi‑client capability is also available in many cases. ASIO drivers can only be used by a single application, and this normally applies to MME drivers as well. However, there is a shareware utility called VAC (Virtual Audio Cable) distributed by Ntonyx (www.ntonyx.com) which is the MME audio equivalent of Hubi's Loopback, letting you send the output of multiple applications into the input of another, or a single output into several inputs.

The third type of Windows sound driver is Microsoft's DirectSound, which not only provides software manufacturers with more direct access to the soundcard (for lower latency than MME drivers), but also contains mixing functions. When using DirectSound, multiple audio streams are held in system RAM for a short time before being mixed and sent on to the soundcard. Each WAV file being played is sent to a Secondary Buffer, and each one can have a different sample rate or bit‑depth (though sadly 20‑ and 24‑bit modes are not supported).

DirectSound then mixes these together into a single Primary Buffer, the sample rate and bit depth of which determine the final output format. During this process volume, pan position, frequency shifting and 3D processing can also take place. You don't normally get access to all these features (although, ironically, some cheaper consumer cards like the SB Live! let you do it). However, you can certainly run a sequencer at 44.1kHz and a software synth at 32kHz or 48kHz, and play them both simultaneously through a basic stereo soundcard when using DirectSound drivers.

Before you rush off to start trying this out, it's important that you have 'native' rather than 'emulated' DirectSound drivers. If you have software capable of using DirectSound drivers (they will be in the list of available audio devices), you may find an entry labelled 'DirectSound (emulated)'. If you select this then all the DirectSound functions will be carried out by Microsoft's software emulation, which takes considerably longer than with Native DirectSound drivers, where the functions are accelerated by the soundcard itself. In simple terms, unless you have true DirectSound drivers for your soundcard, you will get high latency and therefore very sluggish performance. Some applications (like Cubase and Reality) only show Native DirectSound drivers in their list, which makes your choice easier. Others (like Reaktor and Rebirth) show every available option (see screenshot opposite), while strangely the current version 1.2.1 of Bitheadz's Unity DS1 doesn't show the options, instead providing a single 'DirectSound' button which selects the default DirectSound driver.

I tried out a few experiments with the AWE64 Gold and Yamaha SW1000XG (Beta version) DirectSound drivers. With Cubase VST running with its DirectX driver option I managed to get both my AWE64 Gold and SW1000XG cards in turn running simultaneously using the same output pair with NI's Reaktor or Propellerheads's Rebirth (used in stand‑alone rather than Rewire mode). I even managed to get all three running together, although my Pentium II 300MHz processor started to buckle under the strain. However, whenever I launched Reality, the audio output was immediately muted in Cubase. It would seem that some applications don't like co‑existing with DirectSound drivers, but the possibility in principle of multi‑client merging was certainly proved.

Multiple Audio Clients

As I've already mentioned, many multi‑channel soundcards support multi‑client allocation, where individual stereo output pairs are each used by a different application. There is a short list of cards with such drivers in the Multi‑Client Allocation box (see below right).

This approach has the huge advantage of leaving the audio signals separate for further EQ and effects to be added to individual channels, either from external hardware, or by using the DSP resources built in to some cards. The Yamaha SW1000XG, for example, has six pairs of audio channels, each of which can be used by a different application, and have reverb, chorus, and variation effects added from the wide selection available, as well as two insertion effects, without consuming any of your main CPU processing power. Unfortunately there is no individual EQ for audio channels, but you can get round this by patching in a 3‑band mono or 2‑band stereo EQ as an insertion effect.

Cards with onboard software‑based DSP systems, like Soundscape's Mixtreme or the Creamware Pulsar, are even more flexible, letting you use the available DSP power in any way you wish. The former, for instance, allows you to run different applications on each of its eight stereo pairs, and add whatever configuration of EQ and effects you need to each and every one using its DSP power. In addition, you can internally route any of its outputs back into recording inputs of other applications for further processing in real‑time, or mix them internally down to stereo and use a single stereo D‑A converter for output monitoring.

Summary

Running multiple MIDI or audio applications is becoming less of a dream and more of a necessity for many PC musicians using software synths and samplers, and thankfully more and more soundcard developers are listening to what their users want and releasing multi‑client drivers. The ability to split the resources of a multi‑channel soundcard between several applications provides great versatility, and lets you make the most of your investment. With other standards like Yamaha's MIDI Plugin and Propellerhead's Rewire gaining acceptance, having to accept compromises may soon become a thing of the past. Let's hope so.

SysEx Solutions

As discussed in the main text, SysEx commands sometimes need to adhere to certain timing and order protocols to work properly, and most software sequencers can't possibly know all the rules for every MIDI device. This can lead to strange problems when recording and editing SysEx data that can be very difficult to track down.

Yamaha have recognised this problem for some time, and have been discussing a solution with most of the major sequencer developers. The MIDI Plugin standard is a way for synth editors to be integrated into the sequencer environment, so that as far as the MIDI device is concerned, it is only dealing with a single application, and multi‑client drivers aren't needed at all. However, the more important aspect is that as part of the MIDI Plugin code, the timing rules would be known for each MIDI device. For example, a version of XGedit could be launched directly from the Cubase VST menu, and all SysEx edits made could be recorded into Cubase, which would be fully aware of the timing rules for XG commands. If subsequent edits and additions were made to SysEx data (such as real‑time control movements), these could be merged into any existing SysEx data stream without destroying the original timing requirements. Other MIDI synth manufacturers would have easy ways to hook into the MIDI Plugin code so that their own rules could be added.

This would not only remove any possibility of getting scrambled SysEx messages, but finally remove the need for most people to have to directly edit SysEx data at all, since a software control would always be available to perform the same feat. Yamaha tell me that MIDI Plugin facilities should start to appear early in 2000.

Multi‑Client Allocation

It can sometimes be difficult to determine which multiple‑output soundcards allow multi‑client distribution of their inputs and outputs. All of the following now have drivers capable of multi‑client audio allocation, and although this is probably not an exhaustive list, it will get you started: Aark 20/20, Emu APS, Echo Layla, Gina, Darla, Darla24, Soundscape Mixtreme, Terratec EWS64 series and EWS88MT, and the Yamaha SW1000XG.

One thing for Cubase VST owners to watch out for is that it always grabs channels 1 and 2 automatically, so additional applications like software synths and samplers must be allocated to higher numbered channels. It's also worth noting that the different driver types can normally be mixed freely. For instance, you ought to be able (for instance) to allocate ASIO outputs 1/2 and 3/4 inside Cubase VST for multi‑track audio playback, outputs 5/6 using MME drivers inside Reality, and outputs 7/8 using DirectSound drivers in Reaktor. As long as you don't try to re‑use the same output (except for multiple DirectSound applications) things should work OK, although you may have to experiment a little, since there seem to be few guarantees with soundcard drivers. Some, for instance, will insist on all applications running at the same sample rate.