For the newcomer, mixing a multitrack recording can seem overwhelmingly complicated. The key is never to lose sight of the basic principles...

For me, the two most enjoyable parts of the entire recording process are the 'getting the sounds right' stage, where I'm choosing and setting up microphones, and the final mixing stage. The bit in between is often mostly about staying focused, watching levels and making tea! Of course, mixing never takes place without a recording stage, and what you record will have a huge influence on how smoothly, or otherwise, the mixing session is going to proceed. A well played, well arranged piece of music is always going to be a lot easier to mix than a session where nobody has thought very much about the arrangements and the sounds being used.

Mixing is a huge subject, and in this issue of Sound On Sound, we're hoping to have something to say about it both to beginners and more experienced engineers. In this article, I'll be looking at the basic skills you need to know in order to get a mix together, and drawing on advice from some of the biggest names in the business. In this month's Mix Rescue, meanwhile (see pages 44-53), Mike Senior introduces some of the more advanced techniques that are used in modern rock music.

Where Do I Start?

Whether you're using a hardware recorder or a computer-based DAW, your first job is to play through the recorded material, soloing individual tracks to check there are no problems such as clicks, pops, buzzes or overload distortion. If you still use analogue tape (we know where you live!), you may also want to check for dropouts. While doing this, you'll need to create a track sheet if one doesn't already exist, listing what parts are on what tracks. If you did the original recording, you may have done this already, though in the case of a DAW, the arrange window usually serves well enough as a virtual track sheet if you remember to label the tracks. If the track uses software instruments, it makes sense to freeze them, or bounce them to audio, once you've verified the sound is OK, as that frees up CPU power for the plug-ins you may need while mixing.

Mixing EQ Cookbook

Vocals

Most voices have little below 100Hz so use low cut to remove unwanted bass frequencies.

- Boxy at 200 to 400 Hz.

- Nasal at 800Hz to 1.5kHz.

- Penetrating at 2 to 4 kHz.

- Airy at 7 to 12 kHz.

Electric Guitar

Cut below 80Hz to reduce unnecessary bassy cabinet boom.

- Muddy at 150 to 300 Hz.

- Biting at 800Hz to 3kHz.

- Fizzy at 5 to 10 kHz.

Bass Guitar

- Deep bass at 50 to 100 Hz.

- Character at 200 to 400 Hz.

- Hard at 1 to 2 kHz.

- Rattly/fret noise at 2 to 7 kHz.

Acoustic Guitar

- Boomy at 80 to 150 Hz.

- Boxy at 150 to 300 Hz.

- Hard at 800Hz to 1.5kHz.

- Presence at 2.5 to 4 kHz.

- Bright/scratchy at 4 to 8 kHz.

- Airy above 8 kHz.

Drums

- Kick drum weight at 70 to 100 Hz.

- Kick drum click at 3 to 5 kHz.

- Boomy below 120Hz.

- Boxy at 150 to 300 Hz.

- Snare definition 1 to 3 kHz.

- Stick impact at 2 to 4.5 kHz.

- Cymbal sizzle at 5 to 12 kHz.

My next step is to mute or delete any unwanted sections, such as the chair squeaking before the acoustic guitar starts or the finger noise on the electric guitar before the first note is played. Where a real drum kit is part of the mix, either gate the tom mics or use your waveform editor to physically cut out all the space between tom hits. It's usually easy to identify the 'real' hits in the waveform display even when there is a lot of spill, and if you're unsure, you can always audition the section just to confirm you're not cutting something you should be keeping. Toms tend to resonate all the time, so this stage is important. Any gated drum track tends to sound very unnatural in isolation as the spill comes and goes, but once the overheads and other close mics are added in, you'll find you can't hear the gates or edits at all.

Divide And Conquer

Now that modern DAWs are capable of recording huge numbers of tracks, modern productions seem to want to use them all. You might find your mix initially seems unmanageable, but you can make life much easier by separating key elements of the mix into logical subgroups that can be controlled from a single fader. The obvious example is the drum kit, which may have as many as a dozen mics around it or multiple tracks of supplementary samples, and you clearly don't want to have to move a dozen faders every time you wish to adjust the overall drum kit level. There are two ways to do this in a typical DAW, one of which is to group the faders so that when you move one, the others move proportionally. The other way is to create an audio subgroup and route all your drums via that group, just as you would on a typical analogue studio console. If you do this in a DAW, remember to ensure that there is plug-in delay compensation for the groups as well as the individual tracks.

If you wish to add some form of global processing to the drums (I often use Noveltech's Character enhancer plug-in and maybe some overall compression), the subgroup option may be best, but keep in mind that if you have a reverb unit or plug-in being fed from sends on the individual drum tracks, this will need to be returned to the same group, otherwise the reverb level won't change when you change the overall drum part level. If you don't plan to use any global drum processing, the fader-grouping option is simpler, as you don't have to do anything special with your effects sends, and the drums can share the same reverb that you use on everything else if you want them to. Of course, once the faders are grouped, they will all move together, so if you need to make a subsequent balance change within the drum kit, you need to know the key command that disengages the currently selected fader from the group while you adjust it.

Other obvious candidates for grouping are backing vocals, additional percussion, keyboard pads and any doubled guitar parts. With any luck, you'll be able to get your unwieldy mix down to eight main faders or fewer. Some or all of these might require stereo groups, of course.

The subgroup mixing process is simply to balance the components within each group, so that you can then balance the groups with each other. I often start out with everything in mono (panned centre) so that I don't rely too heavily on stereo spread to keep the sounds separate. I would also recommend that you don't go to town on processing and EQing individual tracks at this stage, as the requirements are invariably different when all the faders are up. Another very important tip here is not to set the track levels too high, otherwise you'll run out of headroom while mixing. A track level peaking at -10dB should be fine, and DAW mixes always sound cleaner to me if you do leave plenty of headroom.

A good pair of headphones can be invaluable, both when checking tracks for clicks and pops, and for ensuring that your mix will work on iPods and Walkmans.It is interesting to note that different engineers have different approaches to setting up the initial balance. Some, including me, build a mix from the rhythm section up, while others push up all the faders and then start to balance all the parts with each other. I think the latter way takes a lot of experience, so if you're not an old hand at mixing then perhaps the building approach is more logical. For pop, rock and dance mixes, starting with the bass and drums makes perfect sense, and in my own projects I'll often bring in the vocals next to see what space is left for the other mid-range instruments. On the other hand, if it is an acoustic instrument ensemble with relatively few parts, I'll probably set up each instrument to be the same nominal level and then tweak the balance from there.

A good pair of headphones can be invaluable, both when checking tracks for clicks and pops, and for ensuring that your mix will work on iPods and Walkmans.It is interesting to note that different engineers have different approaches to setting up the initial balance. Some, including me, build a mix from the rhythm section up, while others push up all the faders and then start to balance all the parts with each other. I think the latter way takes a lot of experience, so if you're not an old hand at mixing then perhaps the building approach is more logical. For pop, rock and dance mixes, starting with the bass and drums makes perfect sense, and in my own projects I'll often bring in the vocals next to see what space is left for the other mid-range instruments. On the other hand, if it is an acoustic instrument ensemble with relatively few parts, I'll probably set up each instrument to be the same nominal level and then tweak the balance from there.

If the mix starts to sound good as soon as you push up the faders, you can be pretty certain the mixing process won't be too arduous. If it sounds messy from the start, then you may have some remedial work ahead. If you've read any of my previous articles touching upon the subject of mix balance, you'll probably already know that I recommend you listen to your mix from outside the room with the door open, as this somehow makes it more obvious if any element is too loud or too quiet. I find the same is true if you turn the monitors down quite low — if the vocals are too low in the mix, or the kick drum EQ is wrong, this will quickly reveal it! Do the same trick with commercial records and see how their balance sounds to you. Mixing is an art and as such has a degree of subjective leeway, but if something is clearly wrong, this trick will give you the best chance to hear it. It also helps to check both your mix and your commercial references on headphones, as a lot of people listen to their music on iPods and other portable players. Furthermore, headphones help you focus on little details such as clicks or patches of distortion that you might miss on speakers.

Once you have a reasonable initial balance, listen to the mix and also compare it with some commercial material in the same genre to see how the balance stands up; only once you've identified potential problems should you resort to compression or EQ.

Getting It Right At Source (1): Vocals

It can't be said often enough that a good mix begins at the recording stage, and it's a million times better to get things right at source than to try to fix them at the mix. Being in time and in tune is a good start, but the sounds you record also have to be right for the song (although those generated by software instruments or MIDI'd synths and samplers can always be changed at the last minute if necessary). In my experience, just about any instrument or voice can be made difficult to mix by problems generated at the recording stage.

A pop shield is essential for most vocalists.Now that we have 24-bit recording on every product above toyshop level, there's really no need to compress while recording unless you have a particularly good-sounding outboard compressor and are confident that you can use it correctly. If you over-compress while recording, it can be difficult or impossible to compensate for in the mix. Just leave enough headroom to make sure that there is no danger of clipping — something like 10 or 12 dB would be safe, and it will often make mixing easier too.

A pop shield is essential for most vocalists.Now that we have 24-bit recording on every product above toyshop level, there's really no need to compress while recording unless you have a particularly good-sounding outboard compressor and are confident that you can use it correctly. If you over-compress while recording, it can be difficult or impossible to compensate for in the mix. Just leave enough headroom to make sure that there is no danger of clipping — something like 10 or 12 dB would be safe, and it will often make mixing easier too.

Vocals are probably the most important part of any conventional song, and many dance records too. Pick the wrong mic or the wrong recording environment and you can end up with a terrible sound. Often the environment is more of an issue than the choice of mic, so the first step when recording at home is to create an acoustically dead zone around the mic and singer using blankets, duvets, sleeping bags — or even proper acoustic treatment! You may also find that putting something like an SE Reflexion Filter behind the microphone cuts down on room coloration, and in conjunction with absorbers behind and to the sides of the singer, you should be able to capture a decent performance in just about any room.

Always use a pop filter with whatever mic you use, even if it is the SM58 you gig with, as popping can't be easily removed once recorded. Most mics will give good results in certain applications, so the trick is to match the character of the mic to the sound of the vocalist. For example, a dull or soft voice might benefit from a mic with plenty of presence, whereas a thin or shrill voice can be made to sound richer using a warm-sounding tube mic or possibly even a live dynamic mic.

Level And Dynamics

Compression is a valuable mixing tool, but it should only be applied where there is a clear need either to stabilise levels or to add thickness and character. If the track was miked, compression will tend to make the room character more noticeable, so it can be counterproductive to use too much, especially if your recording was made in the type of room most home recordists have to work in. Compressing the drum overheads when you've made the recording in a great-sounding live room can really enhance the room's contribution to the sound, but if you struggled to record the kit in your bedroom or garage, excess compression will add a roomy character that probably doesn't flatter the kit sound. Far better to keep the sound as dry as possible by using absorbers and then add a good convolution room ambience afterwards.

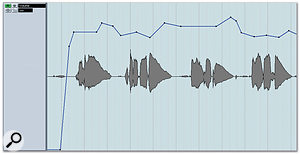

Especially on very dynamic sources such as vocals, you can often achieve more transparent level control by using volume automation instead of, or as well as, compression.Compression also brings up background noise, such as clothing rustles in pauses, and in the case of acoustic instruments, players' breathing. It's frequently the case that the less EQ and compression you use, the more natural and open the mix sounds, so it's often a good idea to use track level automation to smooth out the largest level discrepancies instead of lowering the compression threshold or boosting the ratio. This is particularly true of vocals. You can also use track level automation to duck loud backing parts to make more space for the vocals — often a dB or two of gain change is all that's needed to give the mix more space. As rule, though, don't change the drum and bass levels once you have your balance, as this will make the mix sound unnatural. Dynamics processing, like reverb, is subject to changes in fashion, and obvious compression that would be out of place in some musical styles can be a feature of others.

Especially on very dynamic sources such as vocals, you can often achieve more transparent level control by using volume automation instead of, or as well as, compression.Compression also brings up background noise, such as clothing rustles in pauses, and in the case of acoustic instruments, players' breathing. It's frequently the case that the less EQ and compression you use, the more natural and open the mix sounds, so it's often a good idea to use track level automation to smooth out the largest level discrepancies instead of lowering the compression threshold or boosting the ratio. This is particularly true of vocals. You can also use track level automation to duck loud backing parts to make more space for the vocals — often a dB or two of gain change is all that's needed to give the mix more space. As rule, though, don't change the drum and bass levels once you have your balance, as this will make the mix sound unnatural. Dynamics processing, like reverb, is subject to changes in fashion, and obvious compression that would be out of place in some musical styles can be a feature of others.

Vocals and acoustic guitar will probably need some degree of compression to keep the level consistent and to add density to the overall sound, but don't overdo the gain reduction — you may need as little as a couple of dBs, especially if you've also used level automation to deal with the worst excesses. Even where you want a hard-compressed sound for artistic reasons, you're unlikely to need more than 10dB of gain reduction on the loudest peaks.

Getting It Right At Source (2): Guitars

Electric guitars deserve a feature-length article all on their own, but one of the most common mistakes is to use too much distortion on a continuous rhythm part. The resulting broad spectrum of noise soaks up all the space in your mix and makes it hard to find a good balance. If you can arrange to record a clean DI feed at the same time as the miked amp or DI'd amp simulator, that can be processed later on to replace or augment your original guitar part if things don't work out. If you're miking an amp, spend a while trying different mics and mic positions to ensure that you have the best possible sound at source.

Bass guitars are also trickier than they may seem. If you use a straightforward DI box, you may end up with a sound that seems great when you hear it on its own, but it simply disappears when the rest of the mix is playing. In pop and rock music, bass guitars benefit from a bit of dirt, so a miked amp or an amp simulator will probably give a more usable sound than a straight DI. In fact a lot of what you hear from a bass guitar on a record is often really lower-mid, not just bass, which is why you can still hear the bass line on a small transistor radio that probably doesn't reproduce frequencies much lower than 150Hz or so.

Spectral Spread And EQ

Getting the right balance is also a matter of thinking about how you want the different instruments and voices to work together. With most types of music, you have rhythm, melody and chordal parts, with drums and bass guitar providing the low end in conventional bands. These elements are spread across the audio spectrum, so you have kick drums, bass guitars and so on at the very low end, voices and guitars somewhere in the middle, and cymbals at the top. Some instruments cover a wide range, such as the acoustic guitar, which can produce a lot of high-frequency harmonics. A triangle, by contrast, has a fairly restricted frequency spectrum.

Try not to reach for EQ unless there's a definite need for it.The conventional tools for adjusting the spectral balance of a mix and its individual elements are parametric EQs and filters. Like compression, EQ is something that shouldn't be applied automatically; it should be used only where you have a clear aim in mind, whether that aim is to fix a problem, sweeten a sound or introduce a creative effect. In most cases, problems are best addressed by cutting frequencies that you don't want, rather than boosting the ones you want more of. Where you must use boost, it sounds most natural if you use a fairly wide bandwidth and use absolutely no more than necessary. Cuts can be made much narrower and deeper without sounding unnatural.

Try not to reach for EQ unless there's a definite need for it.The conventional tools for adjusting the spectral balance of a mix and its individual elements are parametric EQs and filters. Like compression, EQ is something that shouldn't be applied automatically; it should be used only where you have a clear aim in mind, whether that aim is to fix a problem, sweeten a sound or introduce a creative effect. In most cases, problems are best addressed by cutting frequencies that you don't want, rather than boosting the ones you want more of. Where you must use boost, it sounds most natural if you use a fairly wide bandwidth and use absolutely no more than necessary. Cuts can be made much narrower and deeper without sounding unnatural.

As a rule, those instruments that occupy only a narrow part of the spectrum are easiest to place in a mix, as they don't obscure other parts. Rich synth pads and distorted guitars, on the other hand, cover a lot of spectral real estate and so are harder to place effectively. Bear in mind that distortion (of whatever kind) adds higher-frequency harmonics to the basic sound, so a distorted bass guitar will have harmonics across the mid-range, and a distorted electric guitar will have harmonics that reach into the higher end of the mid-range before the frequency response of the guitar speaker or speaker simulator rolls it off.

The mid-range is the most vulnerable part of the audio spectrum, where our ears are very sensitive and where many different instruments tend to overlap in the frequency domain. You will often find that some of these broad-spectrum sounds can be squeezed into a narrower part of the spectrum using high and low cut filters to trim away unwanted very low or very high frequencies. For example, a miked acoustic guitar with no EQ produces quite a lot of low-mid that sounds great in isolation, but might cloud a more complex pop mix. Taking out some of the low end makes the guitar sit better in the mix without spoiling its perceived character, even though you might think it now sounds thin in isolation. To some extent, a mix is an illusion and it is about what you believe you hear rather than what you actually hear.

It's often the case that filtering out the low frequencies on some of the elements in a mix can make the overall sound clearer, without noticeably affecting the sound of those instruments in the mix.Where the mid-range is overpowering the rest of the mix, there's scope for cutting frequencies between 150Hz and 500Hz to take out any boxy congestion. By contrast, bass guitars often need a little boost in this area, especially if they've been DI'd, as that's the part of their spectrum that contains their sonic character. If a part of the mix, or the whole mix for that matter, needs a bit more high-end clarity, try combining a broad, gentle boost (+3dB) at around 12kHz with some subtle cutting in the 150 to 300 Hz region (perhaps just 1 or 2dB). You'll also find that some bass sounds can sometimes be made more manageable by actually taking out some of the very low end altogether using a high-pass filter; frequencies below 50Hz are rarely reproduced by domestic playback systems, yet they still take up headroom and can place unnecessary stress on loudspeakers. High and low cut filters are also ideal for narrowing the frequency range that a particular instrument occupies.

It's often the case that filtering out the low frequencies on some of the elements in a mix can make the overall sound clearer, without noticeably affecting the sound of those instruments in the mix.Where the mid-range is overpowering the rest of the mix, there's scope for cutting frequencies between 150Hz and 500Hz to take out any boxy congestion. By contrast, bass guitars often need a little boost in this area, especially if they've been DI'd, as that's the part of their spectrum that contains their sonic character. If a part of the mix, or the whole mix for that matter, needs a bit more high-end clarity, try combining a broad, gentle boost (+3dB) at around 12kHz with some subtle cutting in the 150 to 300 Hz region (perhaps just 1 or 2dB). You'll also find that some bass sounds can sometimes be made more manageable by actually taking out some of the very low end altogether using a high-pass filter; frequencies below 50Hz are rarely reproduced by domestic playback systems, yet they still take up headroom and can place unnecessary stress on loudspeakers. High and low cut filters are also ideal for narrowing the frequency range that a particular instrument occupies.

In addition to using filtering to narrow the space taken up by specific mix elements, you can also try creating more space between notes and phrases, for example, by muting or editing sustained guitar chords instead of just letting them decay naturally. Usually it is possible to arrive at a compromise solution combining EQ with cleaning up the arrangement to get all the parts sit in the mix without getting in the way of each other. Again, use your CD library as a reference to see what sounds work on other people's records. Once you analyse them in detail, you'll quite often find they are rather different to how you originally perceived them.

It pays to be aware that not all equalisers sound the same, so try out whatever you have to hand to see which gives the most musical sound. It's usually a good idea to clean up the unused low extremes of the frequency range for non-bass instruments anyway. It's surprising how much LF rubbish can be present in recordings; even if this cannot easily be heard on small monitors, removing it will often make the mix sound much more open and cleaner!

Getting It Right At Source (3): Drums

Drums are particularly problematic in small rooms. If the kit is tuned well and played well, the close mics will probably sound pretty good but the overhead sound suffers, especially in rooms with low ceilings. Putting some acoustic absorbers between the mics and the ceiling will reduce the amount of coloration, and in most cases, you can roll out some low end from the overhead mics after recording to take away the low-frequency mush that tends to accumulate in bad rooms.

Adding Reverb

Because rock and pop music tends to be recorded in fairly dead acoustic spaces, it is nearly always necessary to add artificial reverb, but today's styles use a lot less obvious reverb than those of a couple of decades ago. Convolution reverbs that 'sample' real spaces can sound exceptionally good on acoustic instruments, whereas we've got so used to classic digital reverbs and plates on vocals that we tend to consider that as being the 'right' sound. This is a subjective decision, though, so if you don't have the confidence to decide what works best, go back to commercial mixes in the same genre, and try to hear what kind of reverb they've used. As a rule, don't add reverb to bass sounds or kick drums, with the possible exception of very short ambience treatments, and don't let the reverb fill in all the spaces in your mix, because the spaces are every bit as important as the notes that surround them.

A useful rule of thumb is to set the reverb level where you think it needs to be, then just back it off another 3 or 4 dB or so. And always check the reverb level in both mono and stereo — there can be a big difference if the reverb is a particularly spacious one. In that case, you'll have to judge the best compromise between the mono mix sounding too dry and the stereo mix sounding too wet. If all else fails, change the reverb program! I find that for most reverb plug-ins, the right starting point is with the reverb set at around the equivalent of a 20 percent wet mix. You can fine-tune either way from there. Combining a longer reverb at a lower level with a louder but shorter reverb can also create a nice effect without flooding all your space with unwanted reverb. It is not uncommon to filter out some low end from a reverb to prevent it clouding that vulnerable low-mid range, and I'll often apply some low cut starting at 200Hz or so.

Getting It Right At Source (4): Separation

Poor separation between instruments is often the enemy of a smooth mix, and achieving separation is always difficult when musicians are playing together in the same small room. Spill not only makes a mix more difficult to balance, but such spill as you do pick up will include more room coloration as the source is further from the mic. Add to this the fact that cardioid mics tend to sound quite dull off-axis anyway, and you'll appreciate why too much spill ends up making the mix sound muddy, like a painting where the colours have been worked too much. (Of course if you do have a great-sounding room and the right mics, spill can help contribute to the character of a recording, and on many early records it did exactly that.)

There are two main ways to maintain separation between miked instruments that are playing at the same time: one is to put lots of space between them, the other is to use acoustic screens or multiple rooms to separate them. It is also important to consider what the null of a mic's polar pattern is pointed at as well as what the front of the mic is aimed at! In other words, use the null of the polar pattern to reject the sound you don't want, reducing the spill by placing the mic with that in mind. For example, cardioid mics are least sensitive directly behind them, hypercardioid mics least sensitive around 30 degrees off the their rear axis and figure-of-eight mics 90 degrees off their main axis.

Miked acoustic instruments and voices can usually be added after recording the main tracks, and for some styles of music, guitars and bass can be recorded via power-soaks or Pod-style amp simulators, in which case the player can probably sit in the control room alongside the engineer. It's also true that in a smallish room you generally get a bigger and better sound miking a small guitar combo than a large stack. All this may seem a bit far removed from the actual art of mixing but trust me, it's a bit like painting and decorating: the preparation is the most important part of the job.

Separation Via Panning

At this stage you can start to think about pan positions. Depending on how the pan controls work on your system, the subjective balance may change very slightly when you adjust them, so recheck the balance after you've decided on your final pan positions. Bass sounds and lead vocal tend to stay pinned to the centre of the mix; vocals because they are the centre of attention and that's where you expect them to be, and bass sounds because it makes sense to share this heavy load equally between both playback speakers. If you're planning to cut vinyl from your final mix, keeping the bass in the centre will help avoid cutting problems.

Combining different reverbs can give results that wouldn't be possible with a single one. This mix uses a large hall sound as a basic instrumental reverb, with an early-reflections 'ambience' patch operating almost as a loudness enhancer, and a plate reverb and short delay to add richness to the lead vocal.Stereo instruments, and I include drum kits in this category, can be panned to a suitable width, but ideally not entirely left and right as they will sound unnaturally wide. If you have a stereo piano and want to place it to one side of the mix, you could, for example, set the right channel's pan control fully clockwise and the left channel's to between 12 o'clock and two o'clock. Note that when you have a single pan control on a stereo channel in a DAW mixer, this won't do quite the same thing as a pair of offset mono pans. If you do as I've described with offset mono pan pots, the left and right piano tracks will be equally loud, but the left one will be panned centrally, making the piano as a whole sit somewhere between the centre and right of the stere field. A single balance pot, by contrast, will keep the two tracks fully left and right, but offset their relative levels, in this example reducing the level of the left channel. This is a small but often important distinction.

Combining different reverbs can give results that wouldn't be possible with a single one. This mix uses a large hall sound as a basic instrumental reverb, with an early-reflections 'ambience' patch operating almost as a loudness enhancer, and a plate reverb and short delay to add richness to the lead vocal.Stereo instruments, and I include drum kits in this category, can be panned to a suitable width, but ideally not entirely left and right as they will sound unnaturally wide. If you have a stereo piano and want to place it to one side of the mix, you could, for example, set the right channel's pan control fully clockwise and the left channel's to between 12 o'clock and two o'clock. Note that when you have a single pan control on a stereo channel in a DAW mixer, this won't do quite the same thing as a pair of offset mono pans. If you do as I've described with offset mono pan pots, the left and right piano tracks will be equally loud, but the left one will be panned centrally, making the piano as a whole sit somewhere between the centre and right of the stere field. A single balance pot, by contrast, will keep the two tracks fully left and right, but offset their relative levels, in this example reducing the level of the left channel. This is a small but often important distinction.

Backing vocals can also be spread out, as can doubled guitar parts; again, look to commercial records for guidance if you're not sure how far to go. If you're mixing typical band material, trying to emulate the approximate on-stage positions of the band members is a good way to start — but keep that bass in the middle no matter where the bass player normally stands! Often, stereo effects such as reverb and delay add a lot of width to a mix, even when most of the raw mix elements are panned close to the centre. Headphones tend to exaggerate the stereo imaging of a mix, so it's a good idea to check that it works on phones too.

Keeping Your Head

By now you may have listened to the song so many times that you're not quite sure what you're listening to any more, so burn off a test CD, play it on as many different systems as possible and make notes about what you do and don't like. Don't worry if it sounds quieter than commercial mixes, because the pumping up of loudness is generally done at the mastering stage, but try to ensure that you are achieving a similarly satisfying overall tonal balance. Mastering can also make a mix sound a bit more dense and airy, so don't worry if you're a bit short of the mark there, but you should be aiming to get as close as possible. Come back to the mix after a day or two, make changes according to your notes and then repeat the process. If at all possible, try to live with the mix for a few days before calling it finished, rather than trying to do a final mix after a busy all-day session!

Mixing Tips From The Pros

Alan Parsons (The Beatles, Pink Floyd, Alan Parsons Project)

Alan Parsons (The Beatles, Pink Floyd, Alan Parsons Project)

- I tend to regard mixing as a bit of a formality. I work hard to make tracks sound good as a monitor mix while recording. Of course sometimes a track comes alive at the mix stage, but normally the mix process is just a final tweak for me.

- Mixing is much easier in the DAW/hard disk recording world, where you can apply key effects and automate level changes as you go during the recording and overdub process. Then all you have to do is get a good instrument and spectral balance. I listen to a lot of other people's records at the end of a recording stint to assess fashions in spectral balance — ie. how much bass is fashionable and how bright are people mixing? I hate the level war, incidentally, and always go for dynamics rather than a squashed-up sound to make a track sound loud.

- I always know when a mix is right — I hate coming back, doing recalls and so on. But having said that, it always makes sense to run off a backing track or stems which can be revisited without much effort for raising or lowering key parts or vocals without having to repatch all the outboard gear. I never compress or limit a mix.

Pip Williams (Status Quo, Moody Blues, Elkie Brooks)

- I am of the school that likes to think of a mix as a performance, as much as the recording itself is a performance of the song. A DJ never has two gigs the same, even using the same records. He gives a performance.

- For me, the important thing is to get across the meaning of that band or artist. Should it be guitar-led? Should the vocals dominate or sit in the mix? Consequently, I consider mix planning as important as my pre-production planning for the actual recording sessions. For example, where do I want it to sound good? In a club, stadium, on radio, in the car?

- For me, it's important to push up a rough balance, then make a note of severe level peaks during the song. (In the early days, I once did a big orchestral mix and ran out of headroom as the track developed. I had to start again and ended up balancing the end first!)

- I hate relying too much on compression just to control levels. A bit of peak limiting is nearly always essential, however.

- Try pushing up the lead vocal and starting with that instead of the drums. A hugely successful female artist once said to me "Pip, I hope you're going to spend as much time on my vocal as on the drums!" Fair comment!

- If you're using a 'proper' desk, re-patch it so that if you're riding a lead vocal level, you're sitting in the centre of the monitors. Patch the effects returns in a tidy and logical fashion, for instance short reverbs, long reverbs, short delays, long delays and so on.

- If you're struggling to make a certain overdub sit in the track, chances are that the reason you're struggling is because it doesn't make it anyway!

- Take frequent short breaks and don't dwell too long on things that are probably insignificant anyway.

- Watch those powerful low mids. Often, taking a bit of the boxiness out (around 350-450 Hz) can free up the top and low end.

- Try to listen to a copy of the mix at home, while you're doing the cooking or ironing! Walk round the house. If the key elements are still audible, then the mix is well on the way. After all, that's how the ubiquitous Mrs Smith in Doncaster usually listens to music!

- I hear stuff on the radio that sounds 'over-automated'. In other words, every bit of on-the-fly excitement has been mixed out and it sounds safe and unspontaneous. Some of my best mixes have been manual, where you get the basics right and then just ride the faders. You may accidently push the guitar solo up too much and find that it's magic! Beatles tracks were full of lucky surprises like that.

Spike Stent (Oasis, Björk, Massive Attack, Spice Girls and many, many more)

Spike Stent (Oasis, Björk, Massive Attack, Spice Girls and many, many more)

- I hate having faders all over the place. During my initial passes of the material through the SSL I will be pushing all the faders up and down and try to come to some basic balance settings. You'll see me make pencil marks everywhere as I'm pushing things around to get a little bit of a vibe going. After that I'll basically go back to the top of the track, and I'll null all the faders on the board. I will do this for different sections of the song, like the verse or the chorus. So when all the faders are aligned in all sections of the song, it's easier for me to see where I'm going and what changes I'm making. It's helps me to get the right balance.

- I like to get things to sound in such a way that you can pick a sound and feel like you can grab it out of the speakers.

- I put the SSL Quad compressor across the stereo output buss of the mix. Basically what I do with every mix is put a GML EQ across the stereo buss of the SSL, and just lift the top end, above 10k, so I won't have to use as much high end from the SSL. The mid and high end of the SSL is a little harsh and cold. So I turn that down a bit, and then lift the high end with the Massenburg to make the mix sound warmer and add sheen. And I really get that SSL Quad compressor pumping. I'm not shy with it. I always set it the same way, 2:1, and off we go.

Tom Lord-Alge (Marilyn Manson, Hanson, Santana, Avril Lavigne and innumerable others)

Tom Lord-Alge (Marilyn Manson, Hanson, Santana, Avril Lavigne and innumerable others)

- I put all the faders up, and listen to the whole song once or twice; usually only once. By the end of the song I have a clear picture of where I'd like it to go. Then I begin working. The possibilities are endless, so it can help to put up records that you like, compare them whilst you're working and try to copy the sound. I've done that.

- I begin with the rhythm section, and then gradually bring in all the instruments. I start with the rhythm section, because I always want it to be very prominent. I come to the vocal last, and I'm not sure I agree with people who say that they leave a hole for the vocal in the backing track. I want the vocal and the instrumental track to be strong, and I don't want there to be any holes. Once I'm familiar enough with the vocal to know where it's singing and what its range is, I generally shut it off for a good portion of the mixing process. When I then bring the vocals back in, I may sometimes go back and tone down some aspects of the instrumentation. The vocal is the most important thing, it's the personality of the song. It's what listeners are going for, so it's important to make it commanding. But I certainly don't want the accompaniment to take a back seat.

- The main thing about compressors is to forget what they tell you and just turn the knobs until it sounds good to you. Don't look at the meter; throw out the book. For me, bass, drums and vocals are really important, and I like to make them sound pronounced and aggressive. I try all sorts of things; there's no rule against using 20 or 30 dB of compression. I may even put compressors in series.

Bob Clearmountain (Chic, Roxy Music, the Rolling Stones and almost everyone else!)

Bob Clearmountain (Chic, Roxy Music, the Rolling Stones and almost everyone else!)

- I usually try to put everything up at once and do a rough mix to listen to, just to see what's going on.

- With pop music, I tend to focus on the lyric and the lead vocal more than anything else, trying to get a sense of what the song is. That matters more than anything; more than what the drums or guitars are doing. I tend to start with the vocals, and then I might get into guitars and keyboards. I'll try to find effective pan settings for everything, thinking of it like a stage. Then I'll get a basic drum balance and build from there, but not really in any predetermined order. It all depends on the track.

- Once I've got a rough mix, then I'll go through it again and solo individual tracks until I get a really good idea of what's on each one and what the 'role' of each part is. I like to think of the instruments as characters, assessing what their contribution is, and what each thing adds to the song.

- I'll tend to use the automation to write extensive rides on the vocal faders to make sure that the vocal can always be heard, rather than using compression. I might compress for the sound, to get a certain kind of effect, but not to level it.

Steve Levine (Culture Club, Beach Boys, China Crisis, Gary Moore, Westworld)

Steve Levine (Culture Club, Beach Boys, China Crisis, Gary Moore, Westworld)

- I don't start with just the bass drum or kick drum. I listen to the whole thing just to get the feel. Spending three hours on a kick sound leads to insanity!

- Take regular breaks. Tired ears produce tired mixes.

- Listen to other people's stuff on your system — even if you have cheap monitors at least you will get a reference point. Some of my best mixes were done on Auratones! Mastered or commercial CDs will always sound louder, but not necessarily better than your stuff.

- Balance the tracks for the style of music you are recording, but always get the vocal (if it has one) sitting well with the rest of the instruments.

- Don't over-compress and EQ everything, and just because you have a million plug-ins, it doesn't mean you have to use them! Often less is more.

- Break the rules! Original ideas win out, so try experimenting with the arrangement and if all else fails, consider hiring a pro! It might just be worth it.

Andy Jackson (Pink Floyd, David Gilmour)

Andy Jackson (Pink Floyd, David Gilmour)

- Joe Meek said "If it sounds right, it is right." I think this can be applied to reverb in particular. A lot of modern sampler/synths come with reverb on everything, even bass guitar samples, which is about selling gear, not what works in a mix. Try setting the whole mix up with no reverb at all, then see what you need. It really doesn't matter if something sounds too dry when soloed — does it need it in the track? Similarly, if you remove the reverb from some element in the mix and you can't hear the difference, leave it off. It'll help if you've recorded any 'real' things (things recorded with microphones) with some space around them.

- Short delays can also create the impression of space without cluttering up the track with reverb. As an alternative, try a stereo harmoniser with a tiny bit of pitch change and 20-50 ms of delay — very useful.

Photo: Andy Sidden

Photo: Andy SiddenMidge Ure (Musician, composer, engineer)

- Mixing is totally subjective. In my case, sitting the vocal comfortably in the mix so it doesn't protrude but is still audible is the key to the mix. These days, mixing as you go seems to be the way forward instead of recording and mixing being two totally different processes. It also helps while recording to constantly 'tweak' the mix balance, so that when you come to record the vocals you have a very good representation of how the finished track will sound as you sing.

Michael Brook (Composer and world music producer)

- I get the best mixes using mix automation as it allows me to develop a mix and then come back to it as many times as I need to make adjustments.

- Taking elements out and trying to boil each section down to its essential elements is often a very effective way to improve a mix. In my experience, what you remove from a mix is absolutely as important as what you put in. This is even more relevant when there is a vocal involved.

- Soloing elements that may interfere, such as bass and kick drum, then making sure that they work together is often very important for achieving clarity. Bandwidth limiting by using high and low-pass filters can really help also in this area.

- Because I often work with uncommon musical styles, I don't start a mix with any particular instrument. I try to figure out what is the most important element and bring other components into the mix in descending order of priority.

- In my experience, mixing is always hard.

Louis Austin (Fleetwood Mac, Queen, Thin Lizzy, Leo Sayer, Deep Purple, Nazareth, Clannad, Alvin Lee, Slade, Judas Priest)

- Though I've now put engineering behind me, my method usually involved pushing up all the faders and then making a 15-30-second rough 'balance' to find out what was on the tape. Then I'd listen to the complete song at least a couple of times before focusing on the rhythm section: snare, kick, kit and bass guitar. This would be followed by the guitars and any other integral bits. Finally I'd move on to the remaining mix elements using just a rough vocal balance, then start thinking about effects, reverb, vocal sounds and so on. A fair amount of tinkering goes on here to get everything to sit well, as it affects what you did earlier.

- I spent anything from two to 10 hours or more perfecting fader moves and getting the vocals to work over the racket! This would include changing or fine-tuning effects.

Eddie Kramer (Jimi Hendrix, the Rolling Stones, Led Zeppelin, Traffic, Peter Frampton, Carly Simon, Joe Cocker, Johnny Winter, David Bowie, the Beatles, Bad Company)

Eddie Kramer (Jimi Hendrix, the Rolling Stones, Led Zeppelin, Traffic, Peter Frampton, Carly Simon, Joe Cocker, Johnny Winter, David Bowie, the Beatles, Bad Company)

- Do I simply push up all the faders and then start to balance from there? No! There are certain building blocks within a mix that to me are of prime importance. What does the style of the song tell you about the direction the mix should take? Is it folk, rock, R&B, pop, rap...?

- A typical mix will start with drums, then the bass, then the guitars, followed by keyboards, backing vocals, then the lead vocal. If I also tracked the song, I'll already be familiar with it, so the mix and final result is already formulated in my brain which makes it easier to deliver a mix. Don't forget: up is louder!

Dave Stewart (Musician and composer)

Dave Stewart (Musician and composer)

- Wherever possible I try to iron out discrepancies of level and tone when recording, rather than leaving it all to the mix.

- I was taught by the great engineer Tom Newman (of Tubular Bells fame) to start a mix by setting levels for 'anchor tracks' — drums, bass, basic guitars, keyboards and so on. Usually these are backing parts which stay at a fixed level throughout. I then mix the other tracks around these anchors. Obviously, the more anchor tracks you have, the easier it is to mix what's left!

- Most of the work in a mix concerns riding the vocal so that it 'sits' right and you can hear the words without obliterating the backing. If you're not using mix automation, this is very demanding and you might need a second person to take care of riding other tracks. Automation takes the strain out of mixing, but because you can edit every tiny detail, there's a danger of listening to the track too much and losing the freshness and simplicity of a 'live' mix.

- Here's a useful tip from my mate Ted Hayton: when you start mixing, turn the monitors up loud; that way, you won't end up with all your faders pushed full up!

Malcolm Toft (Head engineer at Trident Studios, mixed the Beatles track 'Hey Jude')

- I always start a mix from the rhythm section up, starting with the drums, then the bass, then rhythm guitar or piano and so on, adding the lead vocal last. After listening to instruments in isolation (especially drums, as I start with them), I will often change their sound, as they interact with other instruments as they are brought into the mix. That means your initial instrument sound is always only a guide, and will depend on the genre of music and the overall sound you are trying to achieve. I'm also 'old school' in that I like to record dry and then add reverb and effects as I mix, which I find is much more controllable. Often if you add reverb or compression before you've heard the entire mix, you'll want to modify it later on, and if it's 'printed' you can't do that.