Just some of the fascinating new instruments and controllers demonstrated at 2005's NIME conference (from top left): Dan Overholt's Overtone Violin, some of the Speak And Spell-based 'circuit-bent' instruments used by composer Giorgio Manganensi in a NIME concert performance, a trio of MIT's Beatbug controllers, the Bangarama headbanging controller from the University of Aachen, and Stanford University's tuba-based SCUBA system.

Photo: (from top left) Dan Overholt, Bernardo Escalona Espinosa, Gil Weinberg, Bernardo Escalona Espinosa, Juan Pablo Càceres

Just some of the fascinating new instruments and controllers demonstrated at 2005's NIME conference (from top left): Dan Overholt's Overtone Violin, some of the Speak And Spell-based 'circuit-bent' instruments used by composer Giorgio Manganensi in a NIME concert performance, a trio of MIT's Beatbug controllers, the Bangarama headbanging controller from the University of Aachen, and Stanford University's tuba-based SCUBA system.

Photo: (from top left) Dan Overholt, Bernardo Escalona Espinosa, Gil Weinberg, Bernardo Escalona Espinosa, Juan Pablo Càceres

The New Interfaces for Musical Expression (NIME) conference has been running for five years, and is a great place to see and discuss new ideas that may provide the musical controllers of the future. SOS was in Vancouver to learn more...

Where are the musical instruments of tomorrow going to come from? Surely, now that every computer and game console has more musical capabilities than an entire synth studio of 10 years ago, there should be a flood of new electronic instruments coming from factories worldwide. But making new instruments is risky: you have to design them, build them, and perhaps hardest of all, teach people how to play them — and not lose your shirt in the process.

Fortunately, there are plenty of people in universities and research facilities who are employing new, cheaper technologies, and are hard at work thinking up and building the next several generations of electronic music controllers. How do you find these people? One way is to go to their conference: New Interfaces for Musical Expression, or NIME. This year's NIME, held at the University of British Columbia in Vancouver, was the fifth, and it offered plenty to see for the musicians and scientists who showed up from all over the Americas, Europe, and Asia. There are some very creative people out there — and some of them are really out there.

Keynote Speakers

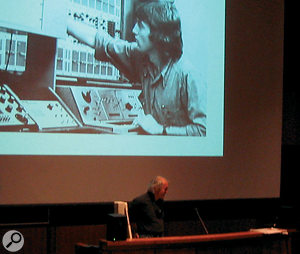

Music and video artist Bill Buxton on stage at NIME, 'then' (above) and 'now' (below).Photo: Bernardo Escalona Espinosa

Music and video artist Bill Buxton on stage at NIME, 'then' (above) and 'now' (below).Photo: Bernardo Escalona Espinosa

The tone of the conference was set by Bill Buxton, a Canadian who has been one of the pioneering forces in both computer music and video for the last 25 years, and who was one of the first to propose the concept of 'gesture controllers' as musical instruments at another conference in Vancouver over 20 years ago (see the 'Digicon 83' box below). At the start of his keynote speech he threw up a slide of an old Revox reel-to-reel tape deck and proclaimed, "This is the enemy."

The problem with electronic music concerts historically, he said, is that someone would walk onto a stage, push a button on a tape deck, and walk off. Today, people do the same thing with laptops. "If you're sitting at a computer and typing, why should I care?" he lamented. If we're going to a live performance, he opined, we want to see the performer doing something interesting to create the music. "The goal of a performance system," he stated, "should be to make your audience understand the correlation of gesture and sound."

Another keynote speaker was Don Buchla, who has been actively developing new electronic instruments for four decades. He presented a comprehensive history of electronic performance instruments, including many less well-known devices like the Sal-Mar Construction. Built in the late 1960s by composer Salvatore Martirano, it resembled a giant switchboard surrounded by 24 ear-level speakers, and had almost 300 switches so sensitive they could be operated by brushes.

Buchla also discussed a number of his own designs, some of which made it to market and some didn't. One of these was an EEG-to-control-voltage converter that responded to alpha waves. "I did a performance in Copenhagen," he recalled, "but while I was up on stage, I found I couldn't generate any alpha waves, so I didn't make a sound for 15 minutes. It was the longest silence I've ever heard."

The Tools That Make This Possible

As you may gather, there's a lot going on at the college level in the world of new electronic musical instruments, partly because the tools for building custom performance systems are continuing to become cheaper, more plentiful, and easier to use.

On the hardware side, there's now a host of different sensors that respond to various physical actions, developed for hi-tech industrial, security, and medical applications, but easily adaptable to musical ones. These include force-sensing resistors (FSRs) such as you would find in MIDI drum pads, 'flex sensors' that change resistance as you bend them, like the fingers in a Nintendo Power Glove, and piezoelectric elements, which send a voltage when they're struck, flicked, or vibrated. There are also tilt switches, airflow sensors, accelerometers, and infra-red and ultrasonic distance sensors. Slightly more expensive, but within the budgets of many education and research labs, are electromyography (EMG) sensors, which detect muscular tension. What's more, diagrams and tutorials for hooking these devices together are available in many locations on the Internet.

On the software side, most of the presenters used one of two musical 'toolkits'. Max, for Macintosh, is the older and more sophisticated of the two, and was written originally in the 1980s by American mathematician Miller Puckette. Max, now called Max/MSP, is a commercial program, frequently updated by and available from the San Francisco company Cycling 74 (one of the sponsors of this year's NIME). A few years ago, Puckette released a freeware program for Mac, Windows, Linux, and Irix called Pure Data, which is effectively a simplified version of Max, although without any fancy user-interface tools or formal tech support — but at a price even university students can afford!

Instrumental Innovation

The three-day conference was packed with stuff to do and see: there were three dozen papers and reports, five large interactive sound installations, and four demo rooms showing a wealth of gadgetry. There were also jam sessions, which bore more than a passing resemblance to the cantina scene in Star Wars: Episode IV, and each night there was a concert.

The presentations were primarily about new one-of-a-kind instruments and performance systems, as well as new ways of thinking about performance. Some of the instruments were variations on conventional instruments. For example, Dan Overholt of the University of California Santa Barbara showed his Overtone Violin, a six-string solid-body violin with optical pickups, several assignable knobs on the body, and a keypad, two sliders, and a joystick where the tuning pegs usually go. In addition, an ultrasonic sensor keeps track of the instrument's position in space. It's played with a normal violin bow, but players wear a glove containing ultrasonic and acceleration sensors which allow them to make sounds without ever touching the strings. The whole thing is connected to a wireless USB system so performers don't have to worry about tripping over cables.

Golan Levin of Carnegie Mellon University demonstrating his projector-based Manual Input Sessions project on stage.Photo: Bernardo Escalona EspinosaThen there was the Self-Contained Unified Bass Augmenter, or SCUBA, built by researchers at Stanford University. It starts with a tuba, adding buttons and pressure sensors to the valves. The sound of the instrument is picked up by a mic inside the bell, and sent through various signal processors like filters, distortion, and vibrato, which are user-configurable and controllable. The processed sound is then piped through four bell-mounted speakers and a subwoofer under the player's chair.

Golan Levin of Carnegie Mellon University demonstrating his projector-based Manual Input Sessions project on stage.Photo: Bernardo Escalona EspinosaThen there was the Self-Contained Unified Bass Augmenter, or SCUBA, built by researchers at Stanford University. It starts with a tuba, adding buttons and pressure sensors to the valves. The sound of the instrument is picked up by a mic inside the bell, and sent through various signal processors like filters, distortion, and vibrato, which are user-configurable and controllable. The processed sound is then piped through four bell-mounted speakers and a subwoofer under the player's chair.

A hotbed of development for new musical toys has been the Media Lab at Massachusetts Institute of Technology. One of the more well-known of these is the Beatbug, a hand-held gadget with piezo sensors that respond to tapping. A player can record a rhythmic pattern and then change it while it plays using two bendable 'ears'. An internal speaker makes players feel they are actually playing an instrument. But Beatbugs work best in packs, as Israeli-born Gil Weinberg, who is an MIT graduate and now director of a new music-technology program at Georgia Tech, demonstrated in a new system called 'iltur'. In this system, multiple Beatbugs are linked to a computer through Max/MSP software, and produce various sounds from modules in Propellerhead Reason. The Max program allows interaction and transformation of the input patterns, encouraging the players to bounce musical ideas off each other.

Some of the presentations used interfaces borrowed from completely different fields to produce musical sounds. Golan Levin, also recently of MIT and now at Carnegie Mellon University in Pittsburgh, Pennsylvania, described in his keynote speech his famous 'Dialtones: A Telesymphony'. In this project, some 200 members of the audience have their cell phones programmed with specific ringtones and are told where in the hall to sit. A computer performs the piece by dialling the phones' numbers in a pre-programmed order.

Describing himself as 'an artist whose medium is musical instruments' while admitting that he can't read or write a note of music, Levin told the audience, "Music engages you in a creative activity that tells you something about yourself, and is 'sticky' — you want to stay with it. So the best musical instrument is one that is easy to learn and takes a lifetime to master." He also made the point that, "The computer mouse is about the narrowest straw you can suck all human expression through."

As an example of a means of performing music that many non-musicians can master, Levin showed his 'Manual Input Sessions' project. Using an old-fashioned overhead projector and a video projector, the player of this system makes hand shadows on a screen, while a video camera analyses the image. When the fingers define a closed area, a bright rock-like object filling the area is projected, and when the fingers open, the rock 'drops' to the bottom of the screen. As it hits, it creates a musical sound whose pitch and timbre are proportional to the size and the speed of the falling rock. It's fascinating to watch.

Digicon 83

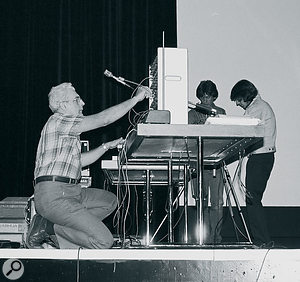

Bob Moog prepares to demonstrate the revolutionary new technology 'MIDI' in Vancouver, 1983.Photo: Paul D. Lehrman For me personally, Vancouver has long been a symbol of dramatic change in the music industry. I was there once before, in 1983, at a meeting billed as the First International Conference on the Digital Arts, or Digicon, which was one of the most important events of its time. Before computers had taken over NAMM and Musikmesse, Digicon demonstrated many of the developments that would revolutionise every part of our industry, including the Sound Droid, an early (and never released) digital multi-channel editing and mixing system, and the video-processing technology behind the first long-form movie CGI sequence, the Genesis Project sequence from the then-recent Star Trek II — The Wrath Of Khan.

Bob Moog prepares to demonstrate the revolutionary new technology 'MIDI' in Vancouver, 1983.Photo: Paul D. Lehrman For me personally, Vancouver has long been a symbol of dramatic change in the music industry. I was there once before, in 1983, at a meeting billed as the First International Conference on the Digital Arts, or Digicon, which was one of the most important events of its time. Before computers had taken over NAMM and Musikmesse, Digicon demonstrated many of the developments that would revolutionise every part of our industry, including the Sound Droid, an early (and never released) digital multi-channel editing and mixing system, and the video-processing technology behind the first long-form movie CGI sequence, the Genesis Project sequence from the then-recent Star Trek II — The Wrath Of Khan.

Who was there? Bob Moog, Herbie Hancock, Bill Buxton (who first articulated the concept of 'gesture controllers' at Digicon), Todd Rundgren, and pioneering computer composers Barry Truax and Herbert Brün. And what was there? The first Fairlight CMI with hard disk recording, PPG's Wave, and pre-release models of the Yamaha DX7 and DX9.

Oh yes: and a demonstration by Bob Moog, which caused jaws to drop in astonishment and delight all through the lecture hall, of a brand-new technology called 'MIDI'.

You can read a full report on Digicon 83 at http://paul-lehrman.com/digicon.

Music By Any Other Name

Some of the systems shown at NIME didn't require the 'player' to use any hardware at all. A group from ATR Intelligent Robotics in Kyoto, Japan, showed a system for creating music by changing one's facial expressions. A camera image of the face is divided into seven zones, and a computer continuously tracks changes in the image, triggering different MIDI notes in response.

And then there was 'Bangarama: Creating Music With Headbanging', from Germany. This extremely low-budget project uses a guitar-shaped plywood controller. Along the neck are 26 small rectangles of aluminum arranged in pairs, which are used to select from a group of pre-recorded samples, most of them guitar power chords. Players wrap aluminum foil around their fingers so that moving them along the neck closes one of the circuits. The headbanging part consists of a coin mounted on a metal seesaw-like contraption, which is attached to a Velcro strip on top of a baseball cap. When players swing their heads forward, the metal piece under the coin makes contact with another metal piece in front of it, closing a circuit, which triggers the sample. Moving your head back up breaks the circuit, and ends the note.

There was much, much more at NIME, but the last item I have room to mention here is McBlare, a MIDI-controlled bagpipe designed by Roger Dannenberg of Carnegie Mellon University as a humorous side-project. Based around a genuine set of bagpipes, McBlare incorporates a computer-controllable air compressor which will precisely match the breathing and arm pressure of a human piper, and a set of electrically controlled mechanical keys on the 'chanter' pipe. Under the control of an old Yamaha QY10 sequencer, McBlare could not only do a convincing imitation of a real piper, but could create frenetic, complex riffs no human could (or would ever want) to play. At the end of Dannenberg's perfomance, a wag in the audience (OK, it was me) asked him, "Why are bagpipers always walking?" and straight away, he responded correctly: "To get away from the sound!"

Glimpsing The Future

The NIME conference wasn't the biggest, or the longest, but it was certainly among the most informative conferences I've ever attended. As happens at any good meeting of like-minded creative types, I made new friends, heard new theories and concepts, saw some amazing performances, and most importantly, left with my head buzzing with things to try out in my own work. The NIME conference is not for everybody — but all of us who work with music and electronics will be hearing from the people who were there in years to come.

Controllers & Games

The market for new musical instruments is not an easy one to break into. Very few of the instruments shown at NIME will ever make it into commerical production. But there is a strong market for the ideas being presented, as one NIME speaker, Tina Blaine, a percussionist, vocalist, and inventor from Carnegie Mellon University, explained.

Blaine's presentation was called 'The Convergence of Alternate Controllers and Musical Interfaces in Interactive Entertainment', which translates to 'Everything you're doing, the game industry can use'. There are a number of categories of games that have interactive musical components, she explained, and they are growing all the time. The most common are beat-matching games, but there are also games that require one of a variety of specific actions by the player in response to musical cues. And there are other games that make music when players move their bodies or hands in free space, like Sony's Groove and Discovery's Motion Music Maker.

But Blaine's most salient point for NIME attendees was that none of the game manufacturers develop their own controllers: all of their technology is licensed from someone else. And who better to develop that technology than the musicians and scientists who are tinkering away in their labs trying to build the next generation of electronic musical instruments?