If your track is distorting on consumer playback devices, it might be that there are ‘true peaks’ in the file that the device can’t cope with — even if it sounds OK via your audio interface. Careful use of a true-peak meter and limiter can help you avoid this, but note that this isn’t the only possible cause of playback distortion.

If your track is distorting on consumer playback devices, it might be that there are ‘true peaks’ in the file that the device can’t cope with — even if it sounds OK via your audio interface. Careful use of a true-peak meter and limiter can help you avoid this, but note that this isn’t the only possible cause of playback distortion.

A client recently sent me a mastered album for some additional EQ tweaks as he wasn’t completely happy with the balance when playing back via an iPhone on his car stereo. He also noticed distortion at certain points. For various reasons I had to work with the mastered 16-bit/44.1kHz file as my source, and I immediately noticed that this mastered version was full of inter-sample peaks, which I assumed was the cause of the distortion my client heard.

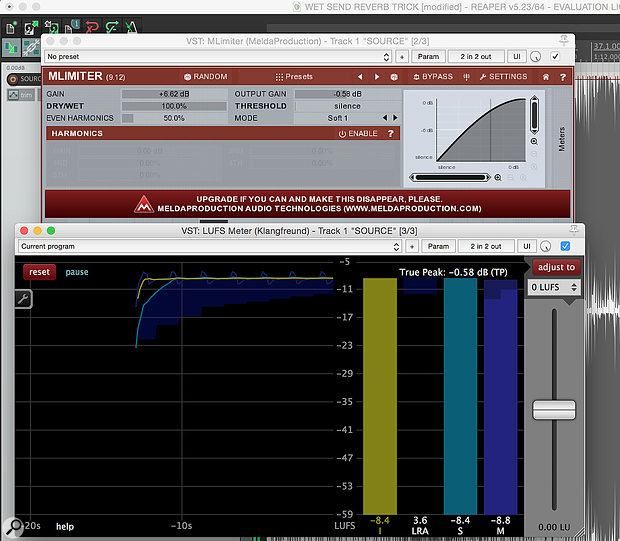

To allow myself room for my tweaking, I reduced the level of the original track, which I believe eliminates the inter-sample peaks, and then made a few EQ tweaks (mostly low cut at 35Hz) and a few other minor adjustments. I then used a limiter with a -1dBFS threshold and checked that there were no inter-sample peaks present in my re-mastered file.

However, apparently on playback via the iPhone and car speakers I’m told that my client experienced the distortion again, especially when volume was turned up. I asked him to play back various pro albums over the same system and he reported no distortion. Over my playback system the track sounds great, so do you have any ideas of what’s going on? This is a relatively dynamic solo acoustic guitar album, by the way.

SOS Forum post

Technical Editor Hugh Robjohns replies: It’s certainly the case that inter-sample peaks (ISPs) only occur during a conversion process when the audio waveform is being reconstructed — during D-A conversion, sample-rate conversion, lossy codec processing, and so forth. However, the digital data itself is not inherently corrupt, and reducing the level before conversion processing will avoid the problem.

In your case, loading the 16-bit source file into a 24-bit (or higher) project and knocking the level back by 6dB (or so) ensures that you avoid creating any ISPs during your subsequent EQ processing or conversion, so there shouldn’t be any peak distortion. Moreover, you don’t lose any low-level detail because of the ‘foot-room’ in the 24-bit (or higher) project, and when you remaster as a 16-bit file the re-dithering will retain that original low-level audio information, albeit theoretically with a small rise in the noise floor — although I very much doubt anyone will notice!

So, where is this distortion coming from if you have removed all the inter-sample peaks? Well, there are any number of possible sources, I’m afraid, but the clues all point to an issue with your client’s in-car system rather than the remastered file (especially since you appear to have already given it a thorough check on your own system). Possible candidates include an impedance mismatch between the iPhone output and car hi-fi connection (see my reply to the next Q&A for more on that), low battery in the iPhone, overloaded car hi-fi, or damaged or inadequate car hi-fi amp or speakers. My best guess, based on what you’ve said, is that the phone/car system is generally unable to cope with high dynamic range audio, and that the nature of the source material is particularly good at revealing peak transient distortion artifacts.

However, a couple of other possibilities remain worth investigation at your end. First, I would check for the presence of high levels of subsonic signals in the track. If present they will probably remain inaudible but may well cause headroom issues in the in-car replay system. Apply a third-order (18dB/oct) high-pass filter around 30Hz. If your client is listening to an MP3 version of the file a second possibility is that inter-sample peaks are being created within the MP3 codec and/or that the codec is struggling with the dynamic and spectrally sparse material and introducing its own distortion as a result — this is actually a surprisingly common occurrence. To check, you could ask him to use a WAV file instead, and/or remake the MP3 version using a slightly quieter version of the source track (remaster it with your peak limiter set to -3dBFS — the extra headroom should cure the problem if it’s in the codec).