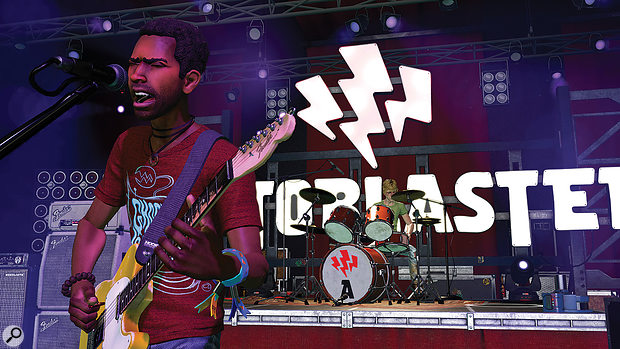

When it comes to making virtual reality convincing, sound has a vital role to play — especially when you’re creating a virtual rock gig!

Strapping on your headset and wireless plastic guitar controller, you step up to the virtual microphone. The virtual audience scream their approval as you turn to your virtual drummer and tear into the first number. And although it’s obvious that the virtual people jumping up and down in the front row aren’t real, that somehow doesn’t seem to matter: every subconscious cue is telling you you’re on stage in front of hundreds of fans.

This uncanny experience can be enjoyed by anyone with a powerful PC and an Oculus Rift headset, thanks to the latest in Harmonix’s Rock Band series of video games. The Rock Band game format is arguably the perfect vehicle for virtual reality: there’s no need for the player to walk around the virtual world, with all the pitfalls that can bring, yet it benefits hugely from the sense of ‘being there’. And central to creating that sense of realism is the work of Harmonix’s audio team, led by Steve Pardo.

This uncanny experience can be enjoyed by anyone with a powerful PC and an Oculus Rift headset, thanks to the latest in Harmonix’s Rock Band series of video games. The Rock Band game format is arguably the perfect vehicle for virtual reality: there’s no need for the player to walk around the virtual world, with all the pitfalls that can bring, yet it benefits hugely from the sense of ‘being there’. And central to creating that sense of realism is the work of Harmonix’s audio team, led by Steve Pardo.

“There is a whole host of performance challenges with developing for VR,” explains Pardo. “On Rock Band VR, our challenge was to get the guitar instrument latency as low as possible, which was challenging due to the nature of Bluetooth, the audio engine, and HDMI all working against it. Thankfully, our engineering team was able to reduce this latency to around 70ms, which for us was a huge triumph, and feels quite comfortable for a plastic guitar instrument.

“Hitting 90 frames per second, the standard frame rate for PC VR, ended up being the biggest performance challenge. We ended up with a unique audio mix treatment per computer performance setting in order to help achieve this on lower-spec’ed computers. For these, we had to be fairly conservative with the use of reverbs/HRTF/reflections, as well as maintaining a lower voice count (how many instances of each sound are playing at a given time) across the board. But thankfully, all computers that run VR are inherently quite powerful, so we on the audio team never felt technically limited and were able to achieve our creative goals.”

The ‘VR room’ at Harmonix’s development site.

The ‘VR room’ at Harmonix’s development site.

Rift Riffing

One thing that makes developing for the Oculus Rift easier is uniformity: whereas 2D gamers could be using any combination of screen resolution and audio playback system, all Oculus headsets use the same high-definition stereoscopic display and open-backed headphones. In fact, although third-party upgrades are available for the latter, Pardo says “I found that mixing using the built-in Oculus Rift open-back headphones — yes, the ones that come in the box — was the best way to mix our game, and allowed for me to focus on delivering the best auditory experience for 99 percent of the audience. It’s exactly what just about every single player is going to be using, so, why not? Knowing exactly what listening device most everyone is going to be using is not a luxury we audio designers have in most mediums.“

Creating the convincing and all-encompassing sound world of Rock Band VR required Steve and his team to knit together several different types of audio. First of all, of course, there’s the backing tracks themselves. As with previous Rock Band games, these are stems taken from original recordings of hit rock songs. “Thankfully, we have been able to use our tried-and-true methods for receiving songs and their respective stems for existing Rock Band games in Rock Band VR. This process involves direct communication with the artists and their representatives in order to facilitate getting those original stems for our use. We don’t ask for anything out of the ordinary from the artists: nothing unique for VR treatment.”

Second, there’s the environment that surrounds the player, with its reflective surfaces and lively crowd. Third, there’s the guitar parts that have to be generated in real time in response to the player’s input. Finally, there are also miscellaneous sounds and music that accompany things like menu browsing.

Steve Pardo led the audio team working on Rock Band VR. Virtual saxophone has yet to make an appearance!“Any sound event can be integrated in the game engine in two primary ways: 2D sounds and 3D sounds. A 2D sound plays back into your headphones or speaker without any pan or attenuation rules applied. A stereo sound will play back as you would expect: left channel through left speaker, right channel through right speaker. Menu sounds and music, for example, are traditionally implemented as 2D sounds.

Steve Pardo led the audio team working on Rock Band VR. Virtual saxophone has yet to make an appearance!“Any sound event can be integrated in the game engine in two primary ways: 2D sounds and 3D sounds. A 2D sound plays back into your headphones or speaker without any pan or attenuation rules applied. A stereo sound will play back as you would expect: left channel through left speaker, right channel through right speaker. Menu sounds and music, for example, are traditionally implemented as 2D sounds.

“A 3D sound allows for the sound to be placed and moved around the player in a 3D space in order to craft a more immersive sensory experience. The use of an audio emitter — a snippet of code placed on a game object that a single or multiple sound events are assigned to — allows for real-time panning, attenuation, EQ, and so on to be applied to the sounds through communication with the game engine.

“Each stem of the song is sent into a unique emitter in our game engine, allowing for a dynamic 3D mix. It’s exactly as you would expect in the venue: drum overheads are placed roughly on the crash and ride cymbal, bass guitar through the bass amp, vocals through the house mix, and so on. This is the first time we’ve attempted panning/attenuating the stems in real time depending on the player’s position, so you can move around the stage and hear different parts of the song up close. For me, hearing this in-game for the first time was a truly unforgettable experience.”

Engine Ears

The terms ‘game engine’ and ‘audio engine’ might be unfamiliar to those outside the world of games development. They refer to development tools that allow sound designers and others to integrate their work into the game. As Steve Pardo explains, “A game engine is the interface between the developer and the fundamental code base that runs the game. Almost everyone on a development team will interact with the game engine on a daily basis, hooking up art assets, animations, scripts, and, in our case, sounds.

“The audio engine, or audio middleware, is a robust tool that composers and sound designers use to implement their content in a more focussed, robust and familiar environment. In these tools, a sound designer builds soundbanks for use in the game engine that house all the game’s audio content into events that can then be modulated by game parameters. Through implementation within the game engine, a sound designer can craft a truly interactive and dynamic sound experience. And while a game can certainly have a great audio treatment without the use of middleware, driving interactive audio via an audio engine is certainly the preferred method in the game audio industry.

“We at Harmonix have a host of proprietary tools that are more focussed for dynamic, generative music with events based on music-time (bars and beats). We run most of our games using Forge, our in-house game engine, which has an incredible amount of audio and music technology built right in. Also, we have an incredibly robust sampling engine, Fusion, that runs outside or in parallel with the audio engine, allowing for super low-latency events to occur on a sample-accurate timeline.”

Inner Space

Oculus themselves also make available various development tools to aid the process, and Steve and his team made full use of these: “Oculus allow developers to utilise their Oculus Audio Spatializer in order to deliver 3D spatialised audio. This tool gives audio designers a number of fantastic tools that make VR audio truly shine, namely a built-in HRTF (head-related transfer function) which allows for binaural audio. We used this plug-in on most every sound source we could in Rock Band VR.

Developer Dan Chace models the Oculus Rift headset while at work on Rock Band VR at Harmonix HQ.

Developer Dan Chace models the Oculus Rift headset while at work on Rock Band VR at Harmonix HQ.

“The Oculus Audio Spatializer plug-in allows for traditional sound content to be rendered in 3D space in real time. In practical use, the sound designer would create their sounds as they would for a traditional game, and then use the game engine and, optionally, an audio engine to place and/or move the sounds in 3D space. The Oculus Audio Spatializer is placed on the sound event like you would a plug-in on a track.

“This allows for real-time panning, 3D spatialisation, and the use of the HRTF as the player moves around a given source. So, for example, if a car is moving from your left-hand side to your right, you could have a binaurally processed treatment of an engine loop moving in real time from your left to your right, recreating something that is truly lifelike. And with VR-specific tools like the Oculus Audio Spatializer, we can also drive near and late reflections for binaural rendering and room simulation.”

In The Club

So important was the latter aspect for simulating the experience of being on stage in a rock venue, though, that the Harmonix team ended up implementing their own tools. “Venue treatment was one of the most technical and time-consuming challenges; and while we could have relied on the Oculus Audio Spatializer, early on we came up with our own solution for late-reflection reverb for venues in Rock Band VR. In game, the player does not move off of the virtual stage, and is always stanced within one of the game’s pre-determined teleport spots. We were able to build a dynamic reverb emitter solution around the player that mimics real-world reflections and delay that is very lifelike for every venue.

“We have seven venues of various shapes and sizes, the smallest being a garage-rock club and the largest being a theatre. I’d say that each venue is at least partially inspired by real-life venues, including some of our favourite spots, past and present. But getting each venue to sound just right was super-challenging. If the mix sounded too good and too clear, it didn’t feel natural. So we ended up leaving a lot of overtones, low-end bass, and noise you’d hear in a truer ‘live’ mix. That actually gave the game significantly more presence, funnily enough.

“Every venue has unique reverb settings, emitter placement — ceiling, behind the drums, left wall, right wall, back of the room, and so on — and dynamic pre-delays in order to make all this happen. We ended up automating the volumes of every stem of every song for every individual venue so that no matter where you are and what you are playing it would sound realistic.

“For crowd, there are three close-range crowd emitters towards the front of the stage — one to your left, one to your right, and one right in front of you — and two more far-range crowd emitters behind them. Every crowd source is unique and mono, in order to steer clear of phasing issues. The crowd noise is strategically sent through the reverb emitters around the venue for a fuller, 360-degree room sound. Balancing music with crowd was a struggle, and due to the nature of VR, we found that traditional side-chain compression methods felt totally unnatural, which meant being more sensitive to volume/dynamic attenuation. It was a dance we were doing throughout production, fine-tuning the mix every day until ship.”

To recreate the experience of playing on stage, stems from original band recordings are placed on ‘emitters’ in the virtual environment. The drums stem, for instance, appears to come from the drum kit, while guitar and bass stems are positioned on their respective amplifiers.

To recreate the experience of playing on stage, stems from original band recordings are placed on ‘emitters’ in the virtual environment. The drums stem, for instance, appears to come from the drum kit, while guitar and bass stems are positioned on their respective amplifiers.

Down The Line

At present, VR platforms such as the Oculus Rift are still the province of hardcore gamers, and it will be a while yet before they are found in every household in the land. However, games like Rock Band VR make a powerful case for the technology, and Harmonix are clearly excited by the potential that virtual reality offers — and not only for gaming. “I think we are already seeing some amazing and innovative use cases with VR — as well as AR, augmented reality,” concludes Steve Pardo. “Besides Harmonix games like Rock Band VR and SingSpace, musicians are breaking new ground with VR music videos. DJs are performing live sets using apps like The Wave. Musicians are composing and performing in apps like SoundStage. There is absolutely momentum happening in this space, and it’s certainly not just within the context of games.

“The technology will continue to mature, and I think we’ll be seeing more and more unique use cases for the medium, experiences we can’t even imagine. The platforms will continue to evolve to be smaller, more personal, more comfortable, and more tuned to everyday use. It’s hard to say where we’ll be in 10 years, but I think we will see more products that refine this experience and continue to work their way into the mainstream.”

Virtual Reality, Real Guitars

At the core of the Rock Band playing experience is the game’s dedicated guitar controller. The guitar part that you ‘play’ is intelligently generated in response to user input, automatically following the song’s chord sequence but varying playing style according to the shape you make with your left hand on the ‘’ and the way in which you attack the right-hand ‘strum bar’.

“The generative guitar for Rock Band VR was undoubtedly the most significant assignment on the audio team,” says Steve Pardo. “It all came from a prototype of mine early on in development that influenced the outcome of the entire game design. The back end relies on a code base migrated over from Rock Band 4, the Freestyle Guitar system, and we pretty much took it apart and re-engineered much of it to allow for a more fluid, dynamic system. The system is built on the backs of two multi-channel samplers and driven by a ton of MIDI data. The first sampler contains various articulations of single notes including sustain, hammer-ons, palm mutes, and so on. The second sampler houses all the various chord shapes — power chords, barre chords, muted power chords, higher fret voicings and so on — and harmonic variations such as major, minor, diminished, major seventh and dominant seventh. With that much to work with, we found we had the content needed for most any playing style within our library of songs and genres in the game.

Executive Producer DeVron Warner shows off his chops on the Rock Band VR guitar controller.

Executive Producer DeVron Warner shows off his chops on the Rock Band VR guitar controller.

“In game, the system is actively listening to the player’s input in order to generate the part. We tried to make this as intuitive as possible. On the guitar controller, if you hold the first and third button, you are essentially making a shape that feels like a power chord. Seeing that logic through, if you hold the first and second button, that could be a muted power chord. Three buttons in a row would be a barre chord. First, second, and fourth button would be arpeggio, and so on. There are seven possible chord shapes, as well as single notes, on both the low and high sets of fret buttons on the guitar.

“Couple that with the strum speed on the strum bar, and the game has enough information to generate a guitar part. So, if you play sustain, we’ll hold out a chord. Play super-fast and you hear tremolo. And for more common strum speeds, say, for eighth-note and 16th-note strumming, we’ll actually ‘unmute’ a full-song length MIDI track that generates a guitar part that totally fits with every moment of the song you are in. It’s a super-smart technology that my team is definitely proud of, and one I hope to see in future products.”

With such a wide range of articulations and phrases required, the decision was taken early on to have only a single bank of samples and modify the sounds in real time using effects and amp simulation, rather than separately sampling different guitar rigs for each song. “Every sample we use in the dynamic guitar system in Rock Band VR comes from my 1975 Fender Telecaster, middle setting on the pickup selector. One of our sound designers on the Rock Band VR team, Chris Wilson, spent months building a library of single notes and chords for this sampler, recorded line-in and super-clean. Building even one more library of these samples from another guitar would have meant significantly more development time and a larger memory footprint on the shipping game.

“On top of spending most of our time tailoring every song for every venue, we added some nifty things like guitar pedals that can be activated and stacked, just like real life. I had a lot of fun crafting these, and you can get into creating some pretty psychedelic guitar solos! You can also sing or speak into the microphone, and you’ll hear your voice reverberate through the venue. If you’re feeling bold you can also go over to the drummer and crash the cymbals with your guitar neck! All this stuff definitely adds to the experience of being on stage and feeling like you are really there.”