Developing a new plug‑in is never straightforward. We go behind the scenes to follow the process from idea to finished product.

It’s September 2020, and the stage is set for a major product launch. Press releases are being prepared, manuals written, tutorial videos edited. To busy engineers working in audio post‑production, NUGEN Audio’s announcement might seem like manna from heaven. For NUGEN themselves, however, Paragon is the culmination of nearly three years’ hard work.

The story began in February 2018. Like most plug‑in companies, NUGEN Audio receive many suggestions for new products. Some come from existing users who’ve thought of ways their lives could be easier, others from people who’ve just had a great idea that they’re convinced will change the world. Many of the latter are wildly impractical, but when a researcher from a leading university got in touch to discuss a new technology he’d developed, NUGEN were naturally intrigued. The researcher was York University scientist Jez Wells, and the novel technology was the application of additive resynthesis to the impulse responses used to generate artificial reverberation.

Lab Tests

Jez Wells is a researcher at York University.When Jez Wells first approached NUGEN Audio, he’d solved the mind‑boggling mathematical problems involved in resynthesizing impulse responses. But the fruits of that research bore little resemblance to a functioning VST plug‑in. Wells had done his work in Matlab, a coding environment designed for solving academic maths problems rather than real‑time DSP. So the first step was for NUGEN’s engineers to inspect the Matlab code and decide whether the same algorithms could be coded efficiently enough in C++ to form the basis of a useful, practical plug‑in.

Jez Wells is a researcher at York University.When Jez Wells first approached NUGEN Audio, he’d solved the mind‑boggling mathematical problems involved in resynthesizing impulse responses. But the fruits of that research bore little resemblance to a functioning VST plug‑in. Wells had done his work in Matlab, a coding environment designed for solving academic maths problems rather than real‑time DSP. So the first step was for NUGEN’s engineers to inspect the Matlab code and decide whether the same algorithms could be coded efficiently enough in C++ to form the basis of a useful, practical plug‑in.

There were two elements to Wells’ code: the ‘modeller’, which analyses and models an impulse response, and the ‘interactor’, which allowed the user to change the properties of the modelled IR and apply them to recorded audio. It quickly became apparent that any viable plug‑in would need to be based only on the interactor. The modelling process requires expert handholding, and is so resource‑intensive that even the fastest implementation can take more than a day to process a complex IR. So, rather than attempt to create a plug‑in that could resynthesize any IR offered to it, NUGEN decided that the modelling would take place in‑house, with the resulting models forming the basis of the factory preset library.

In the early part of the development process, the modeller was refined within Matlab rather than being ported to C++, not least because Jez Wells was still improving his algorithms. By May 2018, however, NUGEN were able to show Wells a basic version of the interactor plug‑in operating in real time. Four months’ work had convinced them that Wells’ technology had the potential to become a viable commercial product, but there were still many decisions left to make and problems to solve.

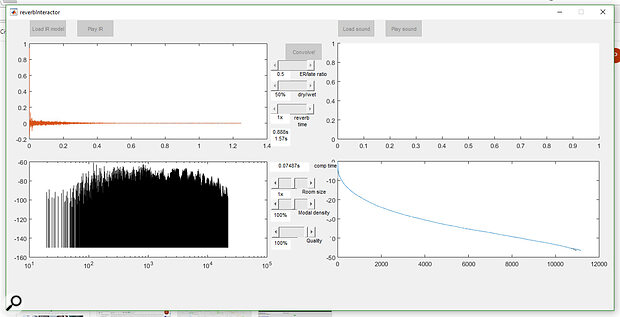

Not quite a plug‑in yet: Jez Wells’ original Interactor.

Not quite a plug‑in yet: Jez Wells’ original Interactor.

Speed Of Sound

The model itself is not an impulse response, but a blueprint for creating IRs. What the interactor plug‑in does is to generate an impulse response from the model according to the parameters chosen by the user. Each time a control is adjusted, a new impulse response must be produced. For example, if the user decides the decay of the reverb should be longer, a fresh IR is calculated, with slower decay times for each individual sine wave.

The supposed advantages of resynthesis would be completely lost if users had to wait 30 seconds to hear the result of each parameter change.

Before this could form the basis of a usable plug‑in reverb, a key technical problem had to be solved: the recalculation was too slow. The supposed advantages of resynthesis would be completely lost if users had to wait 30 seconds to hear the result of each parameter change. Improving recalculation speed was the focus of intensive development work throughout the second half of 2018 and early 2019. Many ideas were tried and found wanting, but most of the successful ones worked on a ‘divide and conquer’ principle. Rather than have the entire model recalculated in one go, it was found that efficiency gains could be made by using separate models for early reflections and tail, and for different frequency bands.

Alongside the technical challenge of improving recalculation speed, NUGEN Audio had to make two other key decisions, which were somewhat related. To what sort of user should this plug‑in be marketed? And what sort of graphical interface would present its novel technology in a way that realised its potential whilst being familiar to users of other reverbs?

In The Round

Many of NUGEN Audio’s existing tools are targeted primarily at post‑production users, so it was natural to optimise the new reverb for the same market. Knowing this world as they did, NUGEN were aware of a general lack of high‑quality surround reverbs that could be applied in immersive audio mixing, especially Dolby Atmos. There was no reason in principle why the resynthesis process should be restricted to stereo, so it was decided that surround compatibility should be a key feature from the off.

This, however, meant that NUGEN would need a library of multichannel impulse response recordings to serve as the basis for resynthesized models. Rather than importing these IRs in channel‑specific formats such as 5.1 or 7.1, it seemed logical to use the first‑order Ambisonic B‑format, which is channel‑agnostic and can be decoded to suit any destination listening format. There was no readily available source of Ambisonic impulse response recordings, so NUGEN had to commission their own library. This, in turn, meant writing a detailed brief as to how the impulses should be captured, and even so, early efforts threw up a number of unforeseen technical issues. To improve the efficiency of their modelling process, NUGEN moved each channel’s processing into its own thread, which then required rewriting sections of code to share resources nicely between threads executing simultaneously and avoid collisions.

This mock‑up user interface from early 2020 introduces many design elements that would be retained and refined, but is still a long way from being the finished article.

This mock‑up user interface from early 2020 introduces many design elements that would be retained and refined, but is still a long way from being the finished article.

Only once the underlying technology was working relatively well did NUGEN turn their attention to developing a graphical user interface. In their words: “The user interface usually comes about after the main algorithm has been implemented. The user experience comes first, deciding what a plug‑in can do to help the end user. The algorithm design is usually next, to give us an idea of what is possible. Then the user interface marries the two together. We usually find that the algorithm can do way more than the interface allows, but not everything that an algorithm can produce will be good. There is a balance to be struck between providing functionality and making something easy to use.”

In this case, most of the functionality could be implemented using parameters familiar from conventional reverb plug‑ins, such as decay time, pre‑delay and room size. Parameters fell reasonably naturally into three groups, so rather than cram everything into one huge window, these groups were assigned to different pages. A major concern was how the output of the reverb could most helpfully be visualised. Given that this might span 10 or more channels, conventional level metering could easily become messy and confusing. Several options were tried, and in the end, an animated circular display proved the most effective way of portraying reverb decay in an intelligible fashion.

Coming Together

The Ambisonic impulse response recordings commissioned from third parties began to arrive in February 2020, and work on the user interface began in March. With the main elements of the GUI in place by the end of May, a provisional release date of October was set. It was time to start planning a marketing campaign, and beta versions of the plug‑in were shared with trusted testers in July. Even then, however, development work was still being done, notably on a concept that was eventually called ‘crosstalk’, which determined how audio feeding one input channel in the plug‑in would be propagated through adjacent channels.

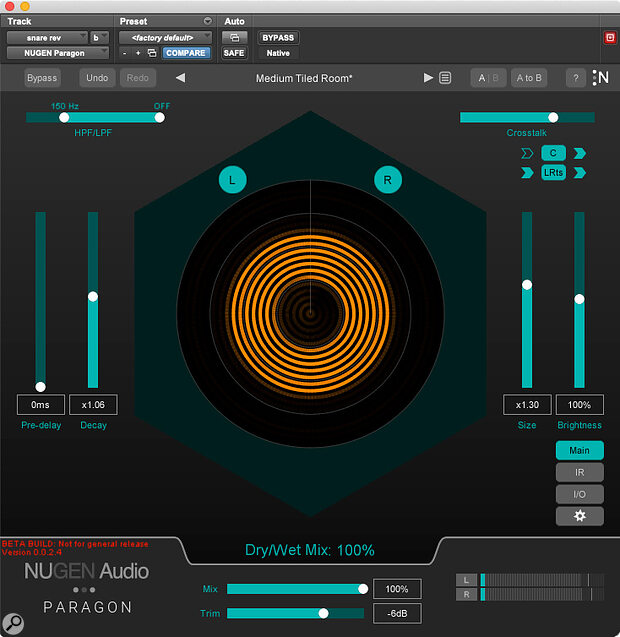

The final Paragon user interface, with its distinctive ‘target’ display.

The final Paragon user interface, with its distinctive ‘target’ display.

There was also the minor detail of deciding on a name for the new plug‑in. The product name is at the heart of any marketing campaign, so it’s vital to choose something appropriate; but with hundreds of other reverbs already on the market, it was hard to find a name that wasn’t already in use, that would appeal both inside and outside the English‑speaking world, and which conveyed the impression of high quality. ‘Mint’ being North British slang for quality, the working title of Mint Reverb (chosen partly due to the original UI colour) persisted for a long time, but was eventually deemed too obscure and gave way to Paragon, with its convenient associations of virtue and leadership.

At the time of writing, NUGEN Audio estimate that some 5000 person‑hours have gone into the development of Paragon. The cost of this development has to be borne up‑front, before even a single penny can be recouped through product sales. It’s a big risk for a relatively small business like NUGEN Audio — but in a world saturated with me‑too products, it’s a risk worth taking in order to be first to market with a novel technology.

For more info on Paragon, visit nugenaudio.com

Additive Synthesis & Resynthesis

Until now, additive synthesis has been used mainly to power musical instruments, the most obvious current example being Apple’s Alchemy. Let’s suppose we want to recreate the characteristic timbre of a clarinet. This comprises a fundamental pitch, plus a series of overtones at related frequencies. To mimic this using conventional subtractive synthesis, we’d start with a raw waveform that was rich in overtone content, such as a square or sawtooth, and use filters to remove the overtones we don’t want. Additive synthesis works the other way around: we build upwards from a simple sine‑wave oscillator at the fundamental frequency, adding further sine‑wave oscillators with different amplitudes to generate each of the wanted overtones.

Manually recreating a complex sound in this way is laborious, so instruments such as Alchemy implement a technique called resynthesis. An additive resynthesizer can inspect an audio file, identify the fundamental and overtones, note how they decay over time, and automatically generate the appropriate array of oscillators and envelopes.

Why go to all this trouble, rather than simply sample the clarinet? Because a resynthesized sound is infinitely mutable, in a way that a sample is not. Radical changes to pitch, duration and timbre are easy to achieve with no loss of sound quality. Resynthesized instruments can be morphed into one another, or you could create a hybrid instrument with, say, the tone of a clarinet and the dynamic envelope of a violin.

In theory, any sound recording can be broken down and reconstructed using additive resynthesis. An impulse response is a type of sound recording: in essence, a ‘sample’ of the acoustic properties of a space. The key insight behind Jez Wells’ research was that applying resynthesis to IRs has the potential to bring similar benefits. Resynthesized impulse responses can be modified far more freely than simple IR recordings, allowing the realism of convolution to be combined with the flexibility of algorithmic reverb.

However, there’s a significant difference between using additive synthesis to mimic a clarinet and using it to recreate an impulse response. The reason that a clarinet sounds musical is that a handful of frequencies — the fundamental and its overtones — are much more strongly represented than any others. As a consequence, many pitched sounds can be recreated using only a few tens of sine‑wave oscillators. By contrast, accurately recreating a broadband, non‑pitched sound such as the decay of an impulse in a room can require tens of thousands of oscillators.