If you're a musician, podcasting is the perfect application for the studio skills and equipment you already have.

The term 'podcast' has been around for 15 years, and the basic concept dates back to at least the turn of the millennium, but the last few years have seen podcasting really hit the mainstream. For many, the unprecedented success of award-winning true-crime show Serial really blew the lid off in 2014, and we've since seen concerted development by mainstream media outlets such as the BBC, NPR, the New York Times, the Wall Street Journal and Audible, as well as an explosion of niche channels developed by grass-roots enthusiasts. But the podcasting scene still feels a bit like the Wild West, with plenty of opportunities for creative new shows to carve out their own piece of this rapidly expanding audience.

In this article, I'll provide some down-to-earth tips for SOS readers who are considering putting together a podcast for the first time. The good news for project-studio owners is that your music equipment and technological skills give you a massive head start. Indeed, I only got into podcasting myself a few years ago, but have managed to produce a couple of different monthly podcasts — the Cambridge-MT Patrons Podcast (www.cambridge-mt.com/podcast) and Project Studio Tea Break (www.projectstudioteabreak.com) — without the benefit of any traditional broadcasting experience.

Going Solo

The simplest podcast format is where you speak directly to your listeners about a topic. This requires minimal gear: a mic, a stand, and a cable; an audio interface with at least one mic input; and some DAW software. And if you're recording direct from your studio chair, you can combine the workflow of recording and editing in a very natural way. Just record a few phrases; edit and quality-check them; re-record and patch up anything substandard; then rinse and repeat! You quickly learn the habit of backtracking a few words whenever you make a mixtape... er... whenever you make a mistake, so that it's easy to edit seamless repairs. It also becomes second nature to favour slice-points in gaps, breaths, or noisy consonants, and you soon realise how much quicker it is to rerecord dodgy sections than spend ages editing audio snippets around.

If you need to use a dynamic mic without getting too close, an inline signal-booster such as the Triton Audio FetHead can help by providing clean gain before your interface's mic preamp.The mechanics of speech recording should be straightforward for SOS readers, since it's not a million miles away from capturing sung vocals, but a crucial difference is that the voice will usually be more exposed in podcasts. This means paying greater attention to background details, so large-diaphragm capacitor mics are a decent first choice, on account of their typically high output and low noise floor. A down side is that many such mics are designed to emphasise a vocalist's high frequencies, bringing a danger of excessive sibilance and lip smacks (those little clicks you get when the speaker's lips and tongue briefly stick to each other), both of which are more distracting in the absence of a backing band!

If you need to use a dynamic mic without getting too close, an inline signal-booster such as the Triton Audio FetHead can help by providing clean gain before your interface's mic preamp.The mechanics of speech recording should be straightforward for SOS readers, since it's not a million miles away from capturing sung vocals, but a crucial difference is that the voice will usually be more exposed in podcasts. This means paying greater attention to background details, so large-diaphragm capacitor mics are a decent first choice, on account of their typically high output and low noise floor. A down side is that many such mics are designed to emphasise a vocalist's high frequencies, bringing a danger of excessive sibilance and lip smacks (those little clicks you get when the speaker's lips and tongue briefly stick to each other), both of which are more distracting in the absence of a backing band!

One way to square this circle is to use a dynamic mic (the Shure SM7B and Electro-Voice RE-20 are popular choices), minimising the noise floor by miking up close and perhaps using an inline gain booster such a Cloudlifter, sE Electronics DM1 or Triton Audio FetHead (pictured) to help out the mic preamp.

The dynamic mic's heavier diaphragm will reduce lip noise and sibilance, and typically gives a more rounded 'radio DJ' tone that many people like. I prefer the high-frequency 'air' and detail of a capacitor mic, so I use the least forward-sounding of my large-diaphragm mics instead, miking above my mouth height to reduce sibilance (which tends to be worst in a horizontal plane at lip height). The secret to keeping lip smacks at bay is to take a sip of water every few phrases so that the vocal apparatus remains well hydrated. But the biggest reason I like using a capacitor mic is that its higher sensitivity allows me to work further away without noise problems: maybe 12-18 inches, with the mic around forehead height. This keeps the mic out of my line of sight while editing, but also allows me to move around a fair bit without the mic's proximity-effect bass boost or off-axis frequency-response variations unduly affecting the vocal tone. For me, this makes it easier to talk freely and naturally while recording, without causing mix difficulties later on.

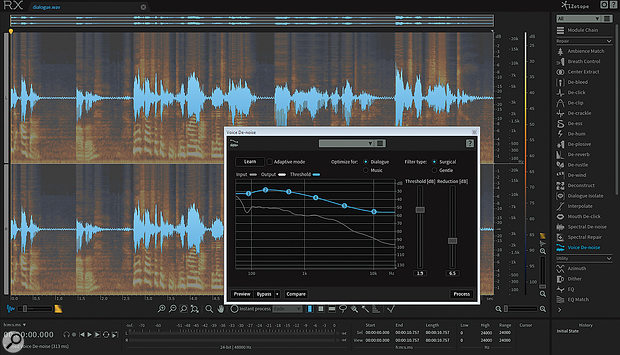

Voices are typically more exposed in podcasts than music, and no matter how much attention you pay when recording, there will likely be times when de-noising software such as iZotope's RX can really help you to deliver a polished result.

Voices are typically more exposed in podcasts than music, and no matter how much attention you pay when recording, there will likely be times when de-noising software such as iZotope's RX can really help you to deliver a polished result.

Whatever gain-management precautions you take while recording, noise-reduction processing can prove beneficial at the editing stage. Most real-world project studios aren't particularly well soundproofed against external noise (especially from traffic), and there will frequently also be sources of unwanted noise in the room, such as fans, central-heating systems, lights and other appliances. Fortunately, products like iZotope's RX Elements make removing steady-state background noise (hiss, buzz, hum, and the like) straightforward and affordable. My main advice here is to remember, when recording, to capture some of the background noise in isolation, so that you can use it to train the noise-reduction algorithm for the best results. (Don't leave this until later, as some noise sources will vary in character at different times of day).

Adding Music

One way to make a podcast feel instantly more polished is to add theme music — and this is where being a recording musician really works in your favour! Not only does using your own music sidestep the potentially thorny issue of licensing fees, but it also allows you to generate multiple variants of your theme music for different purposes. You might have an intro theme ending with a simple rhythm-section 'bed' that fades away gradually under your opening comments, and a selection of short five-second 'stings' to place between your podcast's different thematic segments — things like readers' questions, product tests, interviews, quizzes and (if you're lucky!) advertising spots. And, finally, you might have an outro version of the theme, with a slow fade-in and a strong, clear ending to round out the show. This is one area where music producers are uniquely placed to set their podcasts apart, both because plenty of new podcasts can't afford to use music at all, and because your spoken content will be easier for the listener to digest if you use regular musical 'punctuation' to give listeners a bit of a breather from the sound of your voice!

One of the most frequently cited tenets of online content generation is that your output should appear regularly, so every extra 'production hour' you spend per episode increases the likelihood that you'll have to start postponing or skipping episodes...

There are also plenty of shows that make much greater use of musical underscore and sound design, especially in the documentary/drama space. However, those are typically much more editing-intensive, so I'm not sure I'd recommend that route for your first foray into podcasting — save it for when you're already comfortable with the basic mechanics and can better gauge the work involved. After all, one of the most frequently cited tenets of online content generation is that your output should appear regularly, so every extra 'production hour' you spend per episode increases the likelihood that you'll have to start postponing or skipping episodes.

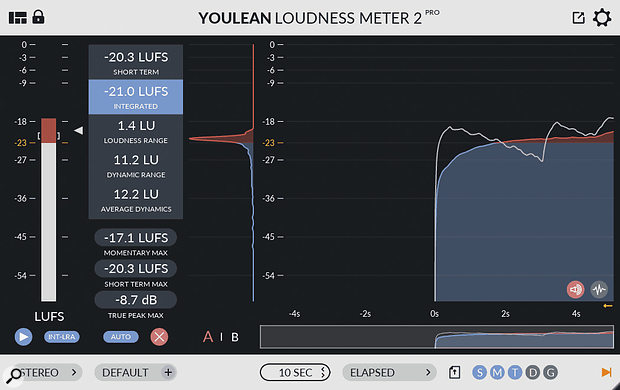

A good loudness meter can be valuable — you can use it, for example, to check the loudness of commercial podcasts (for which there's currently no standard), or to set the relative levels of speech and any critical audio/music examples in your podcast, whose peak levels will naturally differ.

A good loudness meter can be valuable — you can use it, for example, to check the loudness of commercial podcasts (for which there's currently no standard), or to set the relative levels of speech and any critical audio/music examples in your podcast, whose peak levels will naturally differ.

Adding music has some loudness implications, given that no clear loudness standard has yet been agreed for podcast files, and there are plenty of podcast players that don't yet implement loudness-normalisation routines. In general, my advice for choosing a loudness level would be to download some of your favourite podcasts, run them through a loudness meter, compare their loudness-matched sonics, and then take your cues from that. (If you don't already have access to loudness metering, Youlean and Melda both produce decent freeware options, but my own preference is Klangfreund's affordable and more fully-featured LUFS Meter.) However, when there's music in your show, an important additional consideration is how you set the relative levels of the voiceover and music.

You see, it makes sense to mix speech and music elements at similar subjective loudness levels, so that listeners won't have to keep adjusting their volume dials during your episode. But music typically delivers significantly higher peak levels than speech, and if you subsequently try to use mastering processing to match your voiceover levels with mainstream speech-only podcasts, you'll likely squash your music examples to a pulp! So if you plan to use music tracks in your podcast and you want them to sound good, you'll have to keep the speech level lower. For instance, my Cambridge‑MT Patrons Podcast relies heavily on audio examples to illustrate mixing techniques, and I deliberately keep the voiceover level quite low (around -18dB LUFS), so that I can avoid using loudness processing, which would mangle the dynamics of my audio examples. On the other hand, Project Studio Tea Break is a more traditional (and rather rowdy!) speech-based discussion format, which means I can afford to have the voiceover levels quite a lot higher, around -13dBFS.

[Since this article was originally published, the podcast industry has now pretty much standardised on -16 LUFS as the optimum level - Ed.]

Two's Company

The main problem with one-person podcasting is that it's limited by the creativity of the host, and there's a risk of monotony, on account of the single voice and single point of view. For this reason, one of the most popular formats involves two people conversing about a given topic. One variant is where they're both permanent co-hosts, for example in mainstream shows like The Dollop history podcast and the BBC's Curious Cases Of Rutherford & Fry, or (in the music-production niche) Ian Shepherd's The Mastering Show or The UBK Happy Funtime Hour.

Although this kind of show can enliven its subject matter by virtue of conversational interplay and personal chemistry between the co-hosts, the trade-off is that you have to develop new content every episode to keep the listener's interest. An alternative two-hander format that mitigates this is where a regular host interviews a series of guests. Because each participant will naturally bring their own perspective to the table, you continually get fresh content without having to research or script very much for yourself. Mainstream shows such as The Joe Rogan Experience or Marc Maron's WTF provide good examples of this, while shows like Working Class Audio and Recording Studio Rockstars — and SOS's own new podcasts, of course — cater directly to the project-studio niche by featuring a wide range of audio engineers and producers. The down sides here are the logistical challenges of finding and scheduling a regular supply of suitable guests; there's no such thing as a free lunch!

Whichever model you go for, easily the most common recording method is to use some kind of Internet telephony service, like Skype or FaceTime. The simplest approach is to use a telephony service with built-in recording functionality, and this may be the only practical option for many host-guest scenarios — you may be an audio specialist, but your interviewee may not! The main thing in that case is just to make sure that both parties are using headphones for the call, so as to avoid problems with echo-back (when your voice emerges from your guest's phone speaker, re-enters their phone mic, and you hear it repeated back to you following the system's transmission delay).

If your guest is techno-savvy enough to be able to route a proper vocal mic through their call software, that's a bonus, but the sound will still be at the mercy of their recording technique/environment and the audio-streaming data compression. My main advice would be to ask your guest, if possible, to avoid/reduce any obvious sources of background noise and to hang up a duvet behind them (an open clothes closet works well too) to reduce room-reverb pickup. Duo podcasts tend to feel most natural and intimate when the listener can imagine both protagonists in the same physical space, and that illusion's a lot trickier to achieve if one side of the conversation is swathed in tumble-dryer noise or honky small-room ambience! This is also a situation where, if asked for technical input, I'd normally suggest the guest adopts the 'eating a dynamic mic' recording approach, simply because I think there's less to go wrong with that method, especially in untreated acoustics.

To be honest, though, I prefer to record my own duo podcast in a different way: my co-host and I track our voices independently to our own local DAW systems, leaving the telephony only to fulfil a communication function — we use the little earbud/mic headset for the phone call, but each of us also has a separate studio mic actually picking up our voices for the podcast recording. That way, at the end of the call, we've each captured just our side of the conversation, and my co-host can send me a WAV of his contribution to line up against my own for editing purposes.

This offers a few advantages. It allows us to keep the audio recording software independent of the telephony software, so the audio quality and reliability of the Skype connection don't compromise the production values of the podcast. In fact, I also like to use a completely separate device for the telephony, rather than running it on my DAW system, to reduce the likelihood of driver conflicts between the different applications. Trust me, you really don't want to have to re-record any conversation on account of audio glitching or computer crashes, because it's a nightmare trying to conjure any sense of spontaneity the second time around.

Having each voice on its own track, free from spill or overlaps, gives you much greater freedom at editing time. I find this useful when compensating for the time lag from a poor telephony connection, because it means I can edit out all the awkward pauses and overlaps that inevitably result from that rather unnatural conversational situation. I'd recommend recording the telephony audio as well, though — this not only provides a guide for sync'ing the two hi-res audio files, it can also save your bacon if one of the DAW systems crashes mid-take or either person forgets to press Record! On one occasion, I was able to salvage a podcast take during which my DAW PC blue-screened, simply by re-recording my side of the conversation (which was captured on the telephony audio) at the editing stage. Yes, it inevitably involved some slightly hammy voice acting on my part, but it was still preferable to trying to restage the whole unscripted segment from scratch.

Panel Podcasting

Once you have more than two people on a podcast, Internet telephony can become really cumbersome. It's not just that the unavoidable delays interfere with conversational fluency, but also that the subconscious body-language cues we rely on so heavily in real-world interactions are weakened, making it harder for each speaker to sense the non-verbalised reactions and intentions of the others. If you want three or more voices on your podcast, you'll likely get more natural and engaging results if you record all those people in the same room.

As this is a type of podcasting I have less first-hand experience with, I asked Randy M Salo of Moonbase Studios (www.moonbasemunich.com) for some specialist tips. He runs a dedicated podcasting space that caters for larger panel discussions, as well as producing and hosting Progcast on the FREQS network (https://freqs.stewismedia.com).

Randy M Salo (right) with ProgCast co-host Dario Albrecht.An obvious complication with group recordings is spill, and to combat this Randy uses a good deal of acoustic foam on his room's walls — more than you'd expect in a music-studio live room. So don't be afraid to jury-rig extra temporary deadening measures in your own space if you're trying this kind of session. It also makes sense to work with dynamic mics up close, as well as reminding contributors to stay on-mic. "With people who aren't used to podcasting, they sometimes move away from the mic, or don't talk right into it," explains Randy. "It just doesn't feel natural. And they don't necessarily hear that when they're talking, because they're not paying attention to what they sound like from a technical point of view. So I like to use a large foam windscreen on the mic and try to get them comfortable with being right up close to that. That helps me control the levels, so I don't have to do too much rebalancing work later."

Randy M Salo (right) with ProgCast co-host Dario Albrecht.An obvious complication with group recordings is spill, and to combat this Randy uses a good deal of acoustic foam on his room's walls — more than you'd expect in a music-studio live room. So don't be afraid to jury-rig extra temporary deadening measures in your own space if you're trying this kind of session. It also makes sense to work with dynamic mics up close, as well as reminding contributors to stay on-mic. "With people who aren't used to podcasting, they sometimes move away from the mic, or don't talk right into it," explains Randy. "It just doesn't feel natural. And they don't necessarily hear that when they're talking, because they're not paying attention to what they sound like from a technical point of view. So I like to use a large foam windscreen on the mic and try to get them comfortable with being right up close to that. That helps me control the levels, so I don't have to do too much rebalancing work later."

With those simple measures in place, "bleed between microphones is almost no issue when we're in the room together," says Randy. "We often do podcasts with people sitting directly across from each other, less than a metre apart. There is still bleed, but it doesn't affect the podcast quality." But what if people talk over each other? "That happens all the time," he laughs. "Somebody's about to make a point and somebody else cuts in, but then the person that cuts in doesn't continue. I simply mute the person cutting in, and although you do then hear some bleed, it's not a problem in practice. With podcasts, I always think of sound in three dimensions, just as I would when doing movies. So I always want the thing that's telling the story to be in the front. If the bleed from the speaker cutting in is behind the main voice, then it sounds natural and doesn't distract the listener. And I hear that trick all the time on podcasts now, because I'm so used to using it myself!"

The podcasting room at Moonbase Studios — a much drier-sounding environment than a typical music-studio live room, and with an informal atmosphere that helps to put inexperienced guests at ease.

The podcasting room at Moonbase Studios — a much drier-sounding environment than a typical music-studio live room, and with an informal atmosphere that helps to put inexperienced guests at ease.

Beyond technical considerations, Randy repeatedly emphasises the importance of making the recording environment as comfortable as possible. "I try to position people so that they can see one another and engage naturally, and I tend to worry about that more than I worry about bleed. Especially if you're there for an hour or two, the comfort and body language is critical. People can be really close to one another — in my opinion, the closer the better — because with podcasting you want to create an environment that allows the people to have some intimacy."

Desk-mounted 'anglepoise' mic stands such as the K&M 23860 or Rode PSA1 can be handy, because their freedom of movement makes it possible to vary seating arrangements to suit the mood. "People can sit on barstools, chairs, or literally lay back on the couch," Randy explains, "and I can still pick them up really nicely. We've even had three people on that couch together because it's all about comfort. Many people aren't used to being in a podcast, having their voice recorded, so it helps them with that. But if we need to switch into more 'business casual' mode, then we just use some nicer stools or chairs instead."

That's not the only psychology involved in getting people to relax in the studio environment. "I always remind people that we're not doing a live broadcast," says Randy, "and tell them that anything can be edited out. If you don't like something we can stop. We can repeat anything and then edit it down. If you forget to plug somebody or mention something like your website, we can always tack that on at the end. I just want to put people at their ease, so they don't feel like anything they say now is immediately going out live."

Although full headphone monitoring is far from essential with one- or two-person shows (beyond hearing the other person's Skype feed where telephony's involved), Randy extols its virtues for group podcasts. "The closer you get people to their mics, the louder they are in their headphones. And it's funny — you can hear it in their voices. Everybody gets really quiet, and it's almost like they forget maybe that the microphones are there, because the sound is so direct, and they don't have to shout. It changes how you speak to somebody. It's like they're not really across at the other side of the room any more."

Mix Processing

When it comes to mixing, SOS readers should encounter few serious challenges, not least because complicated send effects such as delay and reverb rarely have a role to play in traditional speech podcasts. As far as EQ is concerned, there's very little I can generalise about, as it depends so much on the voice, the mic choice, and how the mic was used. Again, the most solid advice I can offer is to compare your podcast against some of the established competition. That said, given that many podcasters work very close to the mic, it's not uncommon to need a few decibels of low shelving cut to rein in excess proximity-effect bass boost below about 300Hz. And I'd also add that the high-frequency 'air' EQ boosts that are so often used for singers to combat masking in music mixing shouldn't be necessary in isolated pure-speech applications. In fact, I'd actively advise against boosting the upper spectrum any more than necessary, to avoid the risk of making consonants fatiguing on the ear.

I'd actively advise against boosting the upper spectrum any more than necessary to avoid the risk of making consonants fatiguing on the ear.

Usually, the biggest mixing task is reducing each speaker's dynamic range. This is important, because most people listen to podcasts while doing something else, which often means there's a lot of background noise to contend with. Furthermore, if a listener turns up their headphones to battle against environmental noise, any sporadic loudness peaks within the audio file may make for uncomfortable listening. With this in mind, I'd suggest layering compression plug‑ins in series, because trying to get too much gain reduction from a single processor is more likely to give undesirable gain-pumping artifacts.

I first like to compress with a very soft-knee 2:1 ratio using a fast attack and slow release (as well as a few milliseconds of lookahead if the compressor offers that). My aim is to trigger gain reduction the whole time, letting the slow release gently even out medium-term level variations. I'll follow that with a second, higher-ratio compressor with a faster release, setting its threshold to catch individual louder syllables. I might even put a dedicated peak limiter after that to catch unusually large signal peaks. The main decision then is how hard to push each processor — another situation where referencing against competing podcasts is helpful.

The heavier the compression, the more likely you are to suffer from excessive sibilance, because compressors tend to reduce the level of HF-rich 's' and 'sh' sounds less than they do the levels of other vocal components. If your compressors have side-chain EQ functionality, you can mitigate the problem by firmly boosting the side-chain's upper spectrum above about 5kHz or so. If that doesn't do the trick, a dedicated de-esser may be necessary, especially if you've been close-miking with a brighter-sounding capacitor mic.

Randy also warns against losing focus on the main voice. "One thing that doesn't come naturally to people who haven't been doing this for a while, is the idea of balancing the voices appropriately against the intro music and any sound effects. For me, that's part of the story-telling. It's important to make sure that the voice is always the star. So, for example, in Progcast we use a foghorn sample whenever someone says anything really nerdy, and it's super-clean, so in order to keep it behind the voice I always run that through a hall or a short delay, and make sure I keep it low enough in the mix so that it never overshadows the voice. It can be really jarring if a sound effect comes in and it's way too loud."

Cast Away!

There's never been a better time to podcast to the world, and I hope I've been able to demonstrate that, for SOS readers in particular, technical hurdles need not get in the way. All you really need is something worth saying...

Check out the new SOS Podcast channels that we've launched on 21 May 2020:

https://www.soundonsound.com/podcasts

The Tone Of Podcasting

If you're new to podcasting, it'd be easy to write it off simply as on-demand radio. However, although there have been some mainstream radio-show spin-offs, podcasts tend on the whole to have a different character from traditional broadcasting. Partly, this is because on-demand podcasts are more often consumed with active attention by mobile-device users wearing earbuds, rather than being heard sporadically in the background in the way that many people consume radio. This puts the podcaster much closer to the listener psychologically, as if actually speaking into their ear. In this context, a more casual, low-key vocal delivery feels more natural than the traditional 'projected' radio-announcer voice — not least because it's in keeping with typical audio-book recordings, which share a similar listener base.

It's precisely the unvarnished authenticity that sets podcasting apart for many people who've grown bored or cynical of over-polished mainstream media.

The strong indie roots of podcasting (and its low bar of entry, technologically speaking) mean that it's also more associated with real-world people than with professional broadcasters. It's precisely the unvarnished authenticity that sets podcasting apart for many people who've grown bored or cynical of over-polished mainstream media. So where traditional talk-radio would typically have edited out 'um's/'er's, conversational tangents, and performer/technology goofs, those are things that podcasts increasingly wear as a badge of honour. A great example is Americast, in which veteran broadcast journalists Emily Maitlis and Jon Sopel call each other by nicknames, crack in-jokes, struggle (more or less successfully) with their podcasting technology, interview correspondents at dinner, shoo guests off mid-show so they don't miss their flights... basically a whole bunch of things that used to be left 'behind the curtain', but which audiences increasingly enjoy sharing.

Indeed, it's often the tangential, personal elements of a podcast that end up carrying the most appeal. The BBC's Fortunately... podcast, for instance, started off as a kind of highlights round-up from the week's talk radio, but quickly transformed into a free-wheeling conversational show, once they realised that the main reason people were tuning in was to hear UK national treasures Fi Glover and Jane Garvey giggling irreverently off-script about everyday Broadcasting House minutiae!

Another hallmark of podcasting is direct audience participation, which doesn't just mean fielding listener questions (despite the popularity of question-led shows such as Answer Me This or My Brother My Brother And Me). It's about allowing listeners to get involved with the direction of the show, especially where the subject is a topical one. For example, Jamie Bartlett's recent The Missing Cryptoqueen documentary podcast deliberately started releasing weekly episodes without a complete series plan, because they wanted to seek out and follow up additional investigative leads from their listeners. The Maximum Fun network's The Adventure Zone (a real-time Dungeons & Dragons podcast) used Twitter followers' names for non-player characters and allowed listeners to design absurd magical items for use in the game. And one of my favourite podcasts ever, Reply All #109, featured host Alex Goldman trying to persuade a series of listeners that Facebook isn't illicitly spying on them through their phone mic, leaving co‑host P J Vogt speechless with hysterical laughter at his total lack of success. Mainstream radio was already doing this kind of thing, but successful podcast creators typically take it further — perhaps because they're more interested in connecting directly with individual listeners than in pitching their content for licensing to large media conglomerates.

Specialist Podcast Recorders

Recent months have seen the birth of a new breed of dedicated hardware, such as the Rode RodeCaster Pro v2 [reviewed in this SOS June 2020 issue] or the new Zoom LiveTrack L8 that Randy M Salo has at the centre of his podcasting rig, designed to streamline podcast production.

In addition to multi-channel mic preamplification and audio interfacing, these offer useful functions such as fader-based hardware control, battery-powered operation, internal SD card data storage, and dedicated one-shot sample-trigger buttons for stings and sound effects — useful if you plan to adapt your podcast into a live show. You get specialised monitoring facilities for more complicated session setups, which allow in-room contributors to hear your full mix (including their own mic), while any telephonically connected contributors get a 'mix minus', without the distracting echo-back of their own phone mic.

Things That Go Bump On The Mic

A problem with close-miking speech is that some consonants shoot out jets of air which can cause air-blast noises if they hit the mic's diaphragm directly. Common culprits tend to be the plosives ('p' and 'b'), but I've also encountered similar blasts on 'w', 'th' and 'f' sounds. A common solution when recording singers is to use a pop shield. That'll work with speech, too, but note that bulkier models can obstruct important sight lines. (If you're recording yourself in front of your DAW, this is another advantage of using a more distant off-axis capacitor mic-placement; wind blasts are normally very directional, jetting forward from the speaker's mouth.)

When tackling low-frequency pops and thuds that crept into the recording, the quickest solution is usually high-pass filtering. But be careful not to thin the vocal tone too much!In the real world, you'll probably find yourself occasionally having to sort out wind-blast noise after the fact — you may not have enough pop shields available for a panel discussion, or a guest recording remotely may not have one. Because speech wind-blasts are usually mostly low-frequency energy, high-pass filtering is an effective solution. A filter slope of 12dB/octave or more makes sense, but you should adjust the cutoff frequency by ear so it's just high enough to rein in the low-end thud without thinning the vocal tone. If the plosives are mild, you may be able to high-pass filter the whole recording, but for more extreme problem's I'd suggest using your DAW's region-specific processing to restrict the low-cut to the offending moments. You'll find you can raise the high-pass filter frequency for the worst segments higher than you'd be able to get away with on the whole track.

When tackling low-frequency pops and thuds that crept into the recording, the quickest solution is usually high-pass filtering. But be careful not to thin the vocal tone too much!In the real world, you'll probably find yourself occasionally having to sort out wind-blast noise after the fact — you may not have enough pop shields available for a panel discussion, or a guest recording remotely may not have one. Because speech wind-blasts are usually mostly low-frequency energy, high-pass filtering is an effective solution. A filter slope of 12dB/octave or more makes sense, but you should adjust the cutoff frequency by ear so it's just high enough to rein in the low-end thud without thinning the vocal tone. If the plosives are mild, you may be able to high-pass filter the whole recording, but for more extreme problem's I'd suggest using your DAW's region-specific processing to restrict the low-cut to the offending moments. You'll find you can raise the high-pass filter frequency for the worst segments higher than you'd be able to get away with on the whole track.

High-pass filtering can also come in handy if you need to remove low-frequency thumps and rumbles transmitted to the mic mechanically via the stand or cable. Traffic rumble is common on home-studio recordings, and it's not unknown for podcast contributors to hit the mic while speaking, or bump the stand legs with their feet. You can also avoid this kind of thing by using a decent suspension shockmount and making sure that the mic cable isn't held under tension (which can subvert the shockmount's operation).

How Long Should Each Episode Be?

One of the great freedoms of the podcasting world is that there are no standard lengths for podcasts — you'll find everything from audio snacks like Scientific America's 60 Second Science or NPR's Up First news round-up, all the way to sprawling 'slow media' banquets such as Sam Harris's Making Sense or Dan Carlin's Hardcore History. This fact, along with the importance of upload regularity, is a big reason why I think podcasts in general are less tightly edited than radio, where it's vital that the material be shoehorned into a fixed duration for scheduling.

How cool do you think you'll sound when the listener loses patience and switches to 1.5x playback?

Bear in mind, though, that editing can also play a large role in setting the subjective pace of your podcast, and there are, unfortunately, far too many podcasts that use the lack of length restrictions as an excuse for poor structuring and general waffle. Or, to put it another way: how cool do you think you'll sound when the listener loses patience and switches to 1.5x playback?

Can You Make Money?

When it comes to making money from your podcasts, there are various possible business models — but possibly the easiest way to start is to use a donation/subscription service such as Patreon, and offer bonus content for subscribers.

When it comes to making money from your podcasts, there are various possible business models — but possibly the easiest way to start is to use a donation/subscription service such as Patreon, and offer bonus content for subscribers.

How can you make money from this? There's no industry-standard funding model, but niche programmes which would once have struggled to find mainstream support can finance themselves directly from their small, dedicated worldwide fanbases.

Traditional radio-style recorded adverts haven't gone away, and you'll hear those on shows from the larger podcast networks such as Earwolf, Gimlet, Radiotopia and Wondery or from individual top-tier personalities like Phil McGraw, Joe Rogan and Dave Ramsay. However, advertisers tend to look more for listener numbers than listener enthusiasm, so specialist podcasts will need a different approach.

The primary indie funding model is to solicit money straight from the audience. At its simplest, this might be a website with a PayPal 'Donate' button, but most podcasters now gravitate towards a donation/subscription model, offering things like early access, additional content, in-person hang-outs, or limited-edition merchandise in return for a monthly payment. If you don't fancy the technical headache of setting up your own subscription site, there are third-party services that will manage it for you. Probably the best-known is Patreon, but smaller services such as Subscribestar and Liberapay offer alternatives, depending on the facilities you need and the commission fees you're willing to pay.

Another approach is to treat the podcast as a kind of loss-leader, designed primarily to direct listeners to your other paid products. This is popular with podcasters who sell digital products or professional services, because they can tailor the podcast content to a precise target market. Let's say you sell specialist parts for vintage cars. Why not start a podcast with tips about repairing classic vehicles? If you sound like you know your stuff, listeners are much more likely to come to your store for their Morris Minor's replacement gangle pin. Or if you give out great answers to home-knitting questions, your audience will inevitably be well-disposed to your 12 Steps To Wonderful Woollens online course or e‑book.

A more recent development is for podcasters to take their show on the road. Professional actors and comedians have been in the vanguard here — The Dollop, The Bugle, and The Adventure Zone all immediately spring to mind. However, the rise of independent podcast festivals such as Podfest and Now Hear This, and the proliferation of adjunct podcast stages at live-music and literary events, offers the opportunity for many smaller shows to connect more directly with their own listeners, and expand their reach to a wider audience.