Mono Modernity

In my family home, we listen to music on a single-speaker DAB radio in the kitchen or a Bluetooth Sonos speaker that we move to where it's wanted — and my teenage daughter uses her iPhone's speaker! Such small-speaker mono systems are probably typical today. When a stereo mix is folded down to mono, mono sources like a typical lead vocal become louder, and I've tended to find that 'tucking vocals in' a little more and working harder at fine-tuning the balance helps the mix translate better on such playback devices. Of course, more listeners than ever are also listening in stereo on headphones, so I wondered how — if at all — the engineers felt recent changes in consumer listening habits had affected how they approached the mix and judged the vocal levels.

All the engineers discussed the importance of good monitoring when working on vocals, and each of them recommended different tools to help in that, ranging from old studio stalwarts such as the Auratones and Yamaha NS10 speakers (pictured), through more modern consumer speakers with bass enhancement, such as Sonos Bluetooth speakers, to 'room-correction' software like Sonarworks Reference.Tony Hoffer has always valued monitoring in mono: "I'll mostly mix in stereo on the ProAcs, but I'll definitely spend at least 20 percent of the mix on one Auratone speaker listening quietly in mono. I came up working in audio post-production and learned the importance early on of having music sound good in both stereo and mono. I think that's helped a great deal with not only getting a good balance but also getting my work to sound good on TV, films and commercials."

All the engineers discussed the importance of good monitoring when working on vocals, and each of them recommended different tools to help in that, ranging from old studio stalwarts such as the Auratones and Yamaha NS10 speakers (pictured), through more modern consumer speakers with bass enhancement, such as Sonos Bluetooth speakers, to 'room-correction' software like Sonarworks Reference.Tony Hoffer has always valued monitoring in mono: "I'll mostly mix in stereo on the ProAcs, but I'll definitely spend at least 20 percent of the mix on one Auratone speaker listening quietly in mono. I came up working in audio post-production and learned the importance early on of having music sound good in both stereo and mono. I think that's helped a great deal with not only getting a good balance but also getting my work to sound good on TV, films and commercials."

Jack Ruston was keen to stress the primacy of the stereo mix: "We don't want to 'break' the mix on headphones because of the way it sounds on a phone speaker." And his sentiment was echoed by Romesh Dodangoda: "I will keep [mono] in mind, but I want a mix that sounds good in stereo, not a compromised mix in case someone listens in mono." Julian Kindred was, if anything, more emphatic: "Stereo is everything! It's far more representative of the real world than mono. I work in mono a bit to ensure that the impact of a stereo mix that's turning out well still has mid-range impact, punch and overall 'glue'; but stereo comes first, because when the vocal punches in stereo and mono in equal measure, then you've properly presented it!"

For all five engineers, headphones play only a small part in the mix process. Romesh Dodangoda: "I don't do a lot of mixing on headphones really and I think it's really important to do your vocal balances on a pair of speakers if possible." While acknowledging how helpful they can be for checking elements in a mix, Jack Ruston also recommended "trusting the balance you got on your speakers". Tony Hoffer had a similar message: "I don't personally spend a lot of time on headphones. I check the mix on my Sennheiser HD600s when I'm like 95 percent there. It's good to check the balance and how the low, mid and top sound, but I don't like automating on them."

Julian Kindred also uses them sparingly: "I love checking on my Beyer MD770s, but I don't spend lots of time with them, just enough to get a sense of that perspective. I feel it's much more effective to get the vocal's presence and position correct on speakers, because when you listen that way the mix moves the air around you. It leads to far more lifelike judgement calls about the vocal impact."

Clip Gain & Automation

Vocal level riding is something many inexperienced mixers are aware of and, I suspect, feel they should be doing more of, but often don't really understand how to do it effectively. All the engineers explained that this is an important part of how they mix vocals, although they revealed slightly different ways of getting the desired result — a dynamic vocal that moves with the music.

Tony HofferTony Hoffer: "Riding the faders in real time seems to get more dynamic results than systematically raising certain parts of a mix by fixed amounts.

Tony Hoffer explained that, "I always automate the vocal with a fader over a number of passes of the song. If I need more or less, I'll then trim an area down but keep the relative rides I did." I got the impression he uses a physical fader: "Riding the faders in real time seems to get more dynamic results than systematically raising certain parts of a mix by fixed amounts."

Boe Weaver also automate but eschew the physical fader: "Assuming the vocal is processed and sounding good overall, we will do rides, which we just draw in by hand, normally focusing on the right amount of change needed for a chorus, etc. Generally, throughout our Pro Tools sessions, though, we have VCA channels moving all over the place!"

Romesh Dodangoda described another approach: "I will quite often have my verse and chorus vocals going to identical buses. Same plug-ins, same EQ, but the chorus on a separate bus allows me to globally push the volume up when the arrangement gets busier. I prefer this to drawing in automation as it just keeps it a little clearer for me visually. I also do more automation where needed, though."

Compression can obviously be a big part of mixing a vocal, and doing some 'evening out' of a signal before a compressor is quite a common approach, as Jack Ruston explained: "I usually start by using a clip-gain–like process to address any passages or phrases that are clearly too loud, or too quiet. It's likely that I'm going to compress the vocal, but I'm doing that for sonics more than level so I don't want anything to hit that compression too hard and introduce esses or unwanted distortion." But he'll also apply level changes after the compressor: "Finally, after whatever sonic processing I settle on, there will almost always be automation, sometimes quite a bit and often to replace any dynamics that the previous processes have flattened out."

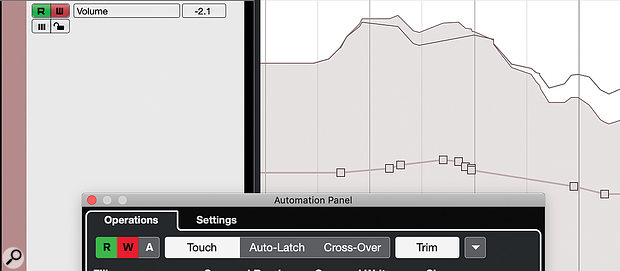

A real fader control surface can make it quicker and easier to write detailed vocal level automation — particularly if your DAW supports relative trim automation, which allows you to scale rather than overwrite existing automation.Julian Kindred agreed: "I use clip-gain quite a bit because I love its influence on the final contour and shape of a vocal's character, so the performance is more dynamically consistent going into any bus or parallel processing like compression, levelling or distortion. However, that doesn't mean it's automatically a substitution for automation. Recorded music is two types of dynamics blended: controlled sonic dynamics, and variation in the song's arranged dynamics. The two strengthen each other in the final mix. The controlled aspect provides the punch and power, while the variation shows off the natural dynamics of the arrangement." He continued: "There's a lot of interest in plug-ins that 'ride' a vocal. That's fine, if it's not a lazy crutch and the song gets 'massaged' as it deserves... Sometimes the draw view in your DAW is a flat line; other times it's as intricate as the Manhattan skyline. And, depending on the song, both are correct."

A real fader control surface can make it quicker and easier to write detailed vocal level automation — particularly if your DAW supports relative trim automation, which allows you to scale rather than overwrite existing automation.Julian Kindred agreed: "I use clip-gain quite a bit because I love its influence on the final contour and shape of a vocal's character, so the performance is more dynamically consistent going into any bus or parallel processing like compression, levelling or distortion. However, that doesn't mean it's automatically a substitution for automation. Recorded music is two types of dynamics blended: controlled sonic dynamics, and variation in the song's arranged dynamics. The two strengthen each other in the final mix. The controlled aspect provides the punch and power, while the variation shows off the natural dynamics of the arrangement." He continued: "There's a lot of interest in plug-ins that 'ride' a vocal. That's fine, if it's not a lazy crutch and the song gets 'massaged' as it deserves... Sometimes the draw view in your DAW is a flat line; other times it's as intricate as the Manhattan skyline. And, depending on the song, both are correct."

Tony Hoffer described another tactic for manipulating the pre-compressor level, to shape the vocal sound: "I'll put a trim plug-in with the bypass and trim automated in case I want to hit the compressors less or more for certain sections of a song."

I think the important 'take away' is that micro-managing vocal levels is important — you need to find a technique that works for you and encourages using your ears as much as possible. Some draw automation in, others use faders, but notably those who use faders don't try to write all the automation in a single pass — if you want to go down that road, it's well worth getting your head around your DAW's different options for recording new automation over what already exists.

There seems to be no doubt that detailed level automation is of huge importance in getting a vocal to sit in the mix.

There seems to be no doubt that detailed level automation is of huge importance in getting a vocal to sit in the mix.