Erica Synths Syntrx

The Syntrx is not a clone, but its inspiration is clear. Can it recapture the spirit of the legendary EMS Synthi?

To find the exact phrase, put the words in quotes or join them together with a plus sign e.g. live+recording or "live recording".

To find, say, all live recording articles that mention Avid, enter: live+recording +avid - and use sidebar filters to narrow down searches further.

The Syntrx is not a clone, but its inspiration is clear. Can it recapture the spirit of the legendary EMS Synthi?

The physics of loudspeakers have hardly changed in a century — but DSP and material science have come a long way, and the Pulsar takes full advantage.

ZoneMatrix transforms a single instance of Kontakt into a multi‑instrument performance environment.

Recording an instrument you’ve not encountered before can seem daunting. Here’s a rule of thumb that guarantees a decent result every time.

We check out IK’s latest refresh of their popular amp and effects modelling suite.

Famed for its speed of use, the latest version of FL Studio is a highly sophisticated music production environment.

From sketches to final mixes, engineer Jonathan Low spent 2020 overseeing Taylor Swift’s hit lockdown albums folklore and evermore.

No‑one can tell you exactly what to buy without knowing more about your circumstances, your preferences, your studio and the things you’re likely to be recording. And the best person to come up with those answers is you.

In Auto Sampler, the main features behind the automatic creation of sample sets are presented in a very intuitive fashion and work extremely well...

We put Mackie’s diminutive multimedia speakers to the test.

Choose a mic with the optimum polar pattern for the job, and you’re halfway to capturing a great recording.

For anyone with an interest in recording classical performances of any form — whether they’re an amateur, a professional or an academic — this is absolutely essential reading.

The concept behind the Soundevice Digital DIFIX is, as the name implies, to ‘fix’ your DI recordings.

This delicious‑sounding phaser should fill the needs of funk players or those trying to replicate some of the gentler Jimi Hendrix and David Gilmour effects.

Synth fans of a certain age will remember the Keyfax books by musician and sometime SOS contributor Julian Colbeck. Back in...

The SWN is a fairly big 26HP six‑voice module capable of creating slowly morphing drones, polyphonic melodies, evolving sequences and a vast range of rich textures.

Qu‑Bit’s Surface collects together several percussive‑style physical modelling algorithms in one 10HP module.

Euporie is a ‘multi‑response’ analogue low‑pass filter whose resonance can be switched between a Moog and Sallen & Key characteristic, giving you two filters for the price of one.

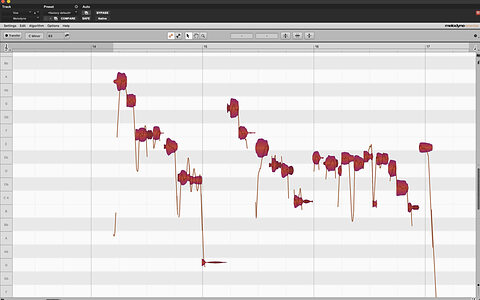

Melodyne Essential now comes bundled with Pro Tools 2020.11, so let's explore what it offers.

I wish to do some surround mixing in my home studio. I already have stereo monitors... Can I just add three more of the same speaker?